Category: Prosthetics

-

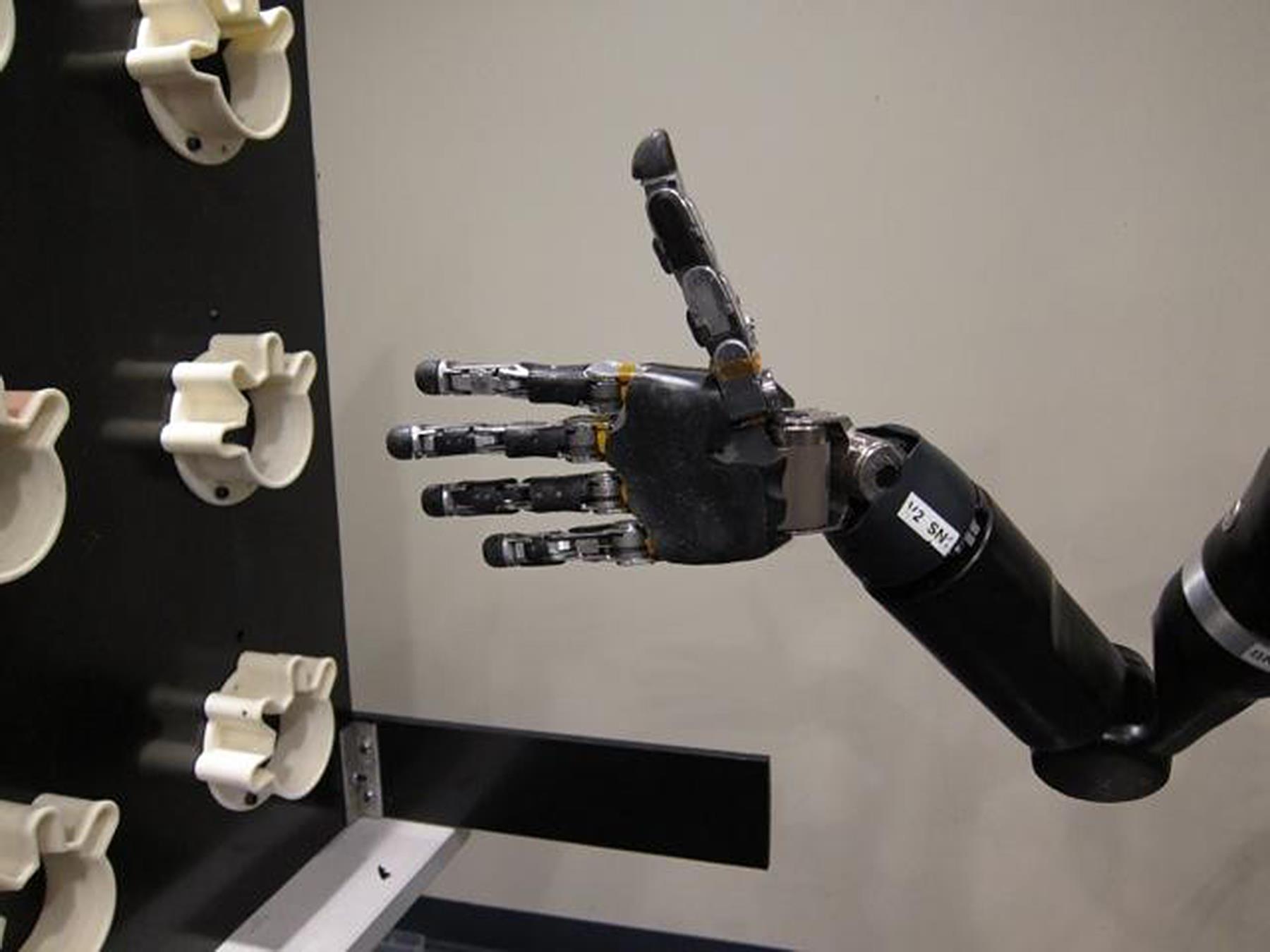

Mind controlled prosthetic fingers

Johns Hopkins researchers have developed a proof-of-concept for a prosthetic arm with fingers that, for the first time, can be controlled with a wearer’s thoughts. The technology was tested on an epileptic patient who was not missing any limbs. The researchers used brain mapping technology to bypass control of his arms and hands. (The patient was…

-

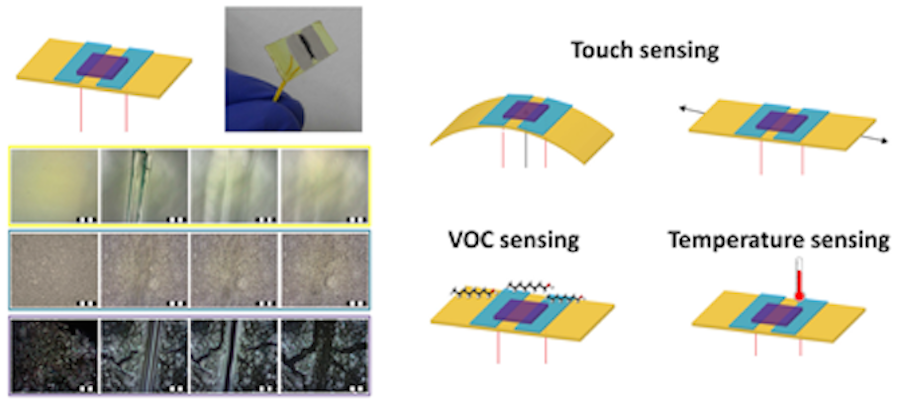

Self-healing sensor improves “electronic skin”

Hossam Haick and Technion colleagues are developing materials to be integrated into flexible electronics that mimic the healing properties of human skin. The goal is to quickly repair incidental scratches or damaging cuts that might compromise device functionality. The synthetic polymer can “heal” electronic skin in one day, which can improve the materials used to…

-

Sensors allow more natural sense of touch in prosthetics

Stanford’s Zhenan Bao is developing technology that could restore a more natural sense of touch in prosthetics. Her flexible, thin plastic sensors send signals to the brain that more closely resemble nerve messages of human skin touch sensors. The disruptive technology has not yet been tested on humans, and researchers still need to find a safe way to…

-

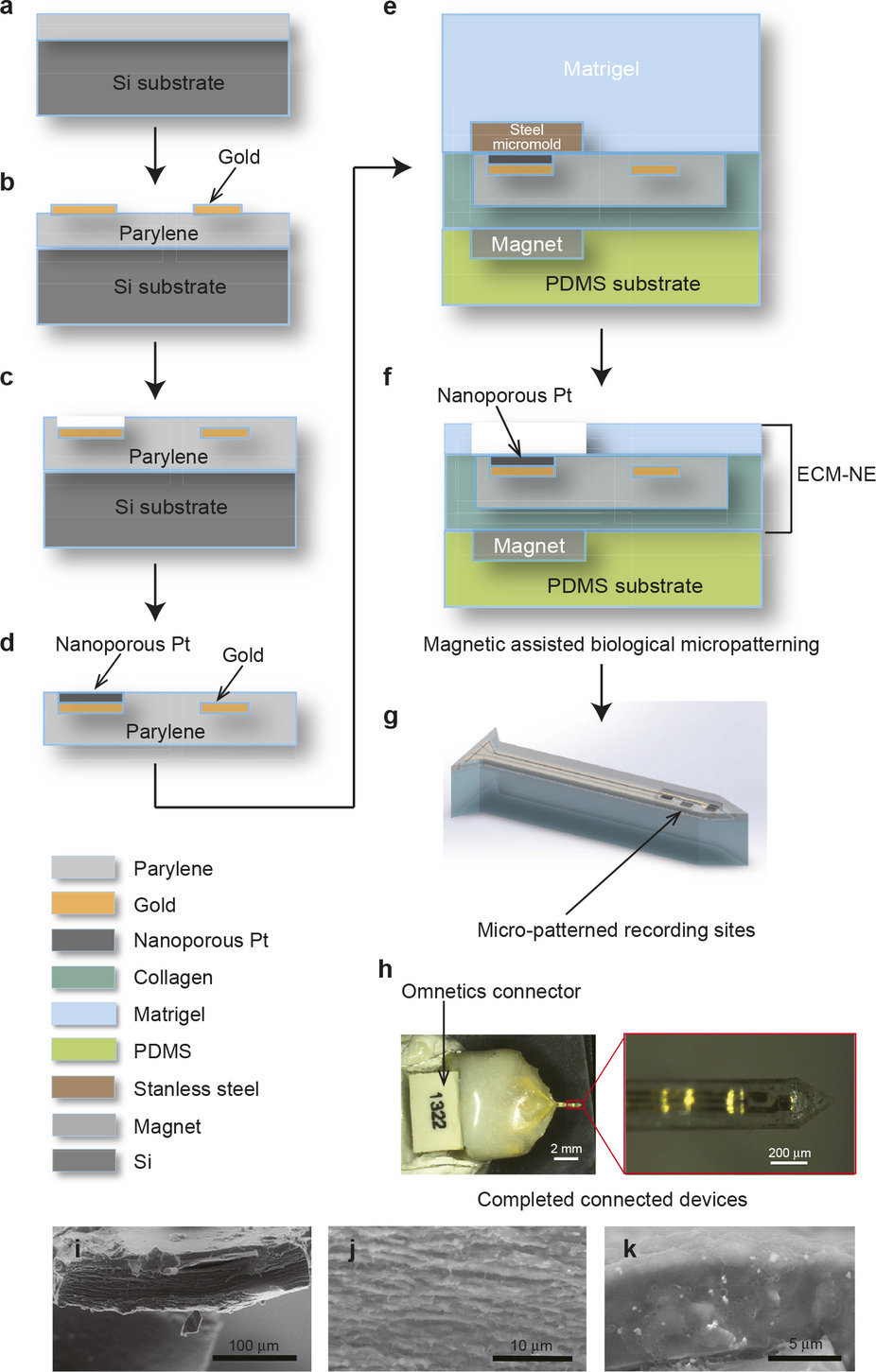

Biocompatible neural prosthetics

Spinal injury patients, and those with lost limbs, sometimes have neural prosthetic devices implanted in an attempt to regain independence. They are used for deep brain stimulation and brain controlled external prosthetics. However, neural prosthetics are often rejected by the immune system, and can fail because of a mismatch between soft brain tissue and rigid…

-

“Lifelike” bionic hand for women and teenagers

bebionic by steeper is a small, “lifelike” bionic hand created for women and teenagers. It is designed around an accurate skeletal structure with 337 mechanical parts. Its 14 grip patterns and hand positions mimic real hand functions. Its first user, Nicky Ashwell, was born with out a right hand. After being fitted with the prosthetic,…

-

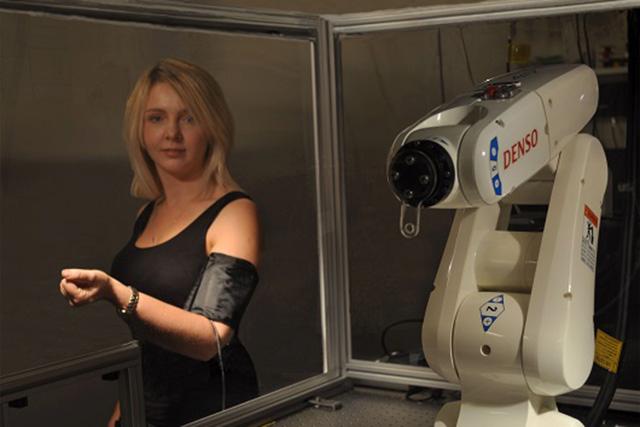

Intent controlled robotic arm with neuroprosthetic implant

Caltech and Keck researchers implanted neuroprosthetics in a part of the brain that controls the intent to move, with the goal of producing more natural and fluid motions. The study, published in Science, was led by Richard Andersen. A quadriplegic implanted with the device was able to perform a fluid handshaking gesture and play “rock,…

-

Intent controlled prosthetic foot using myoelectric sensors

Ossur‘s sensor implant allows amputees to control bionic prosthetic limbs with their minds. Myoelectric sensors are surgically placed in residual muscle tissue. Prosthetic movement is triggered via a receiver. Ossur’s existing “smart limbs” are capable of real-time learning and automatically adjust to a user’s gait, speed and terrain. However, conscious thought is still required.…

-

Implant to enable prosthetic sensations

Washington University‘s Daniel Moran has received a DARPA grant to test a device that would stimulate nerves in the upper arm and forearm of prosthetic users. The goal is for the wearer to be able to feel hot, cold, and a sense of touch. In a related development last year, MC10‘s Roozbeh Ghaffari developed artificial skin…

-

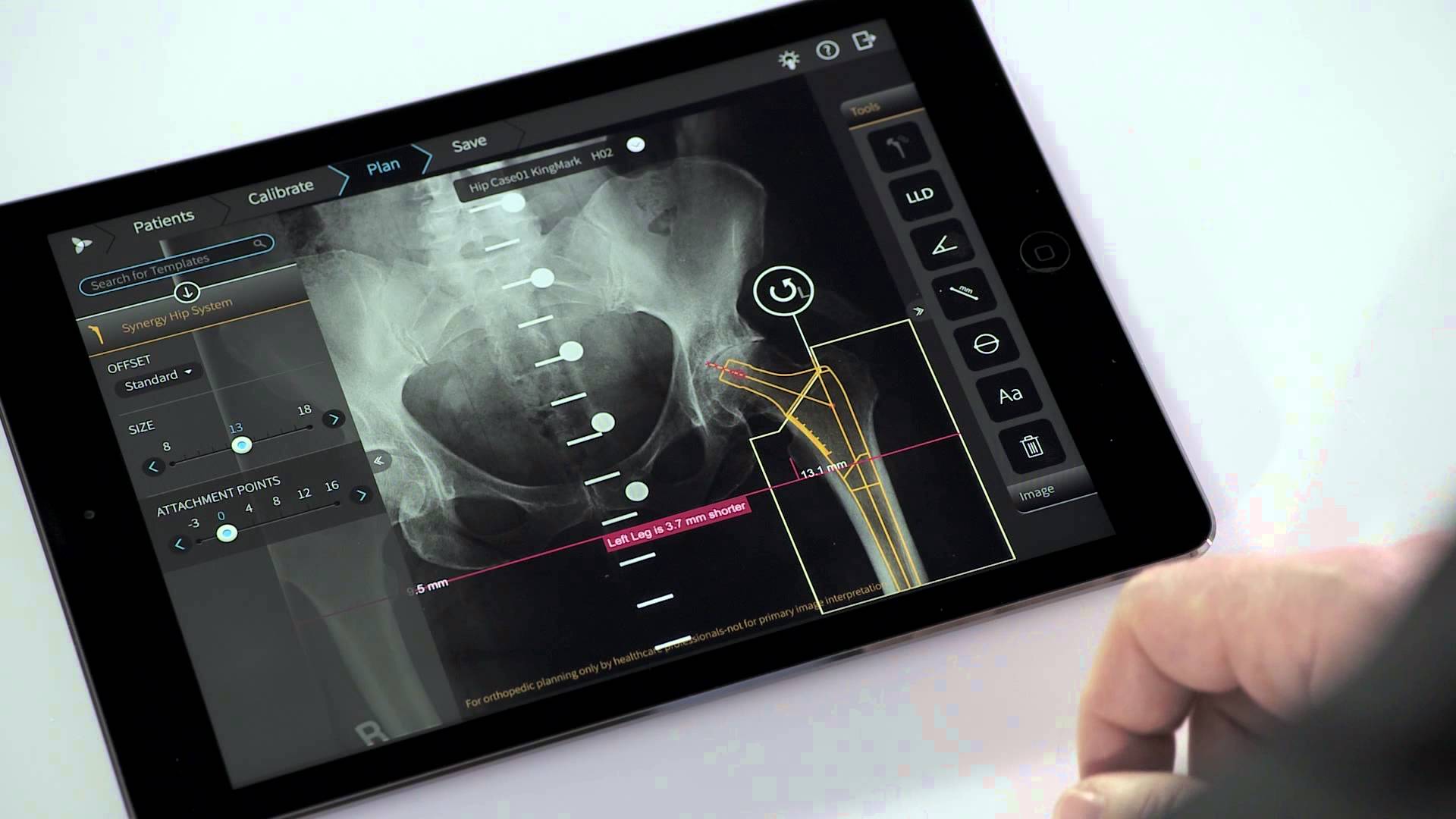

App helps orthopedic surgeons plan procedures

Tel Aviv based Voyant Health‘s TraumaCad Mobile app helps orthopedic surgeons plan operations and create result simulations. The system offers modules for hip, knee, deformity, pediatric, upper limb, spine, foot and ankle, and trauma surgery. The iPad app mobile version of this decade old system was recently approved by the FDA. Surgeons can securely import medical images from the…

-

Artificial skin detects pressure, moisture, heat, cold

MC10‘s Roozbeh Ghaffari and a team of researchers from the US and Korea have developed artificial skin for prosthetics that mimics the sensitivity of real skin. Its silicon and gold sensors detect pressure, moisture, heat and cold. It is elastic enough for users to stretch and move a bionic hand’s fingers as they would…

-

BCI enabled 10-D prosthetic arm control

Jennifer Collinger and University of Pittsburgh colleagues have enabled a prosthetic arm wearer to reach, grasp, and place a variety of objects with 10-D control for the first time. The trial participant had electrode grids with 96 contact points surgically implanted in her brain in 2012. This allowed 3-D control of her arm. Each electrode point picked up signals…

-

Wearable optical sensor controls prosthetic limbs

Ifor Samuel and Ashu Bansal at the University of St. Andrews have developed a wearable optical sensor that can be used to control the movement of artificial limbs. Plastic semiconductor based sensors detect muscle contraction. Light is shined into fibrous muscle, and the scattering of the light is observed. When muscle is contracted, the light scatters…