Category: Cancer

-

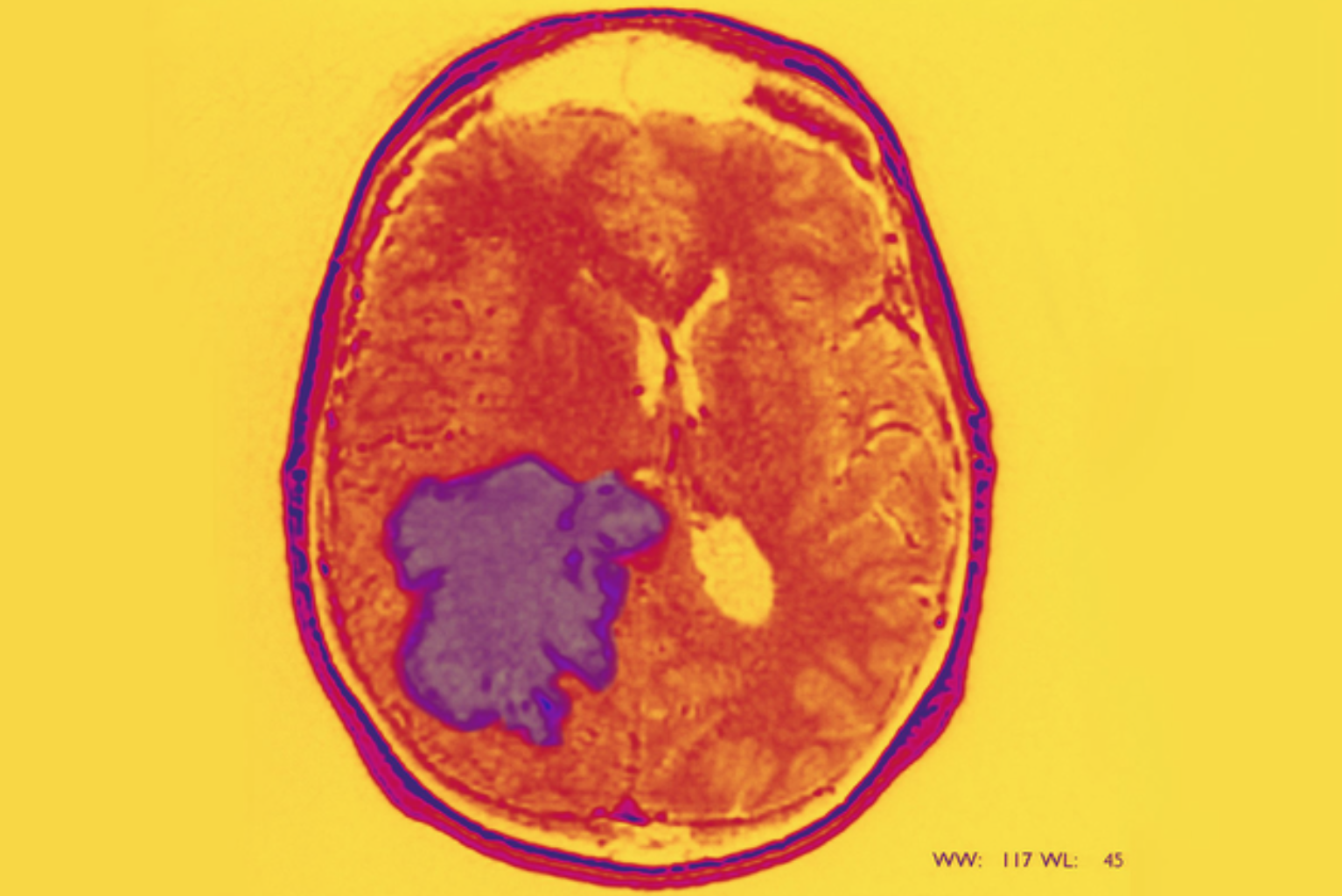

Starving cancer stem cells as a new approach to glioblastoma

Luis Parada and Sloan Kettering colleagues are focusing on cancer stem cells as a new approach to glioblastoma. Like normal stem cells, cancer stem cells have the ability to rebuild a tumor, even after most of it has been removed, leading to cancer relapse and metastasis. According to Parada: “The pharmaceutical industry has traditionally used…

-

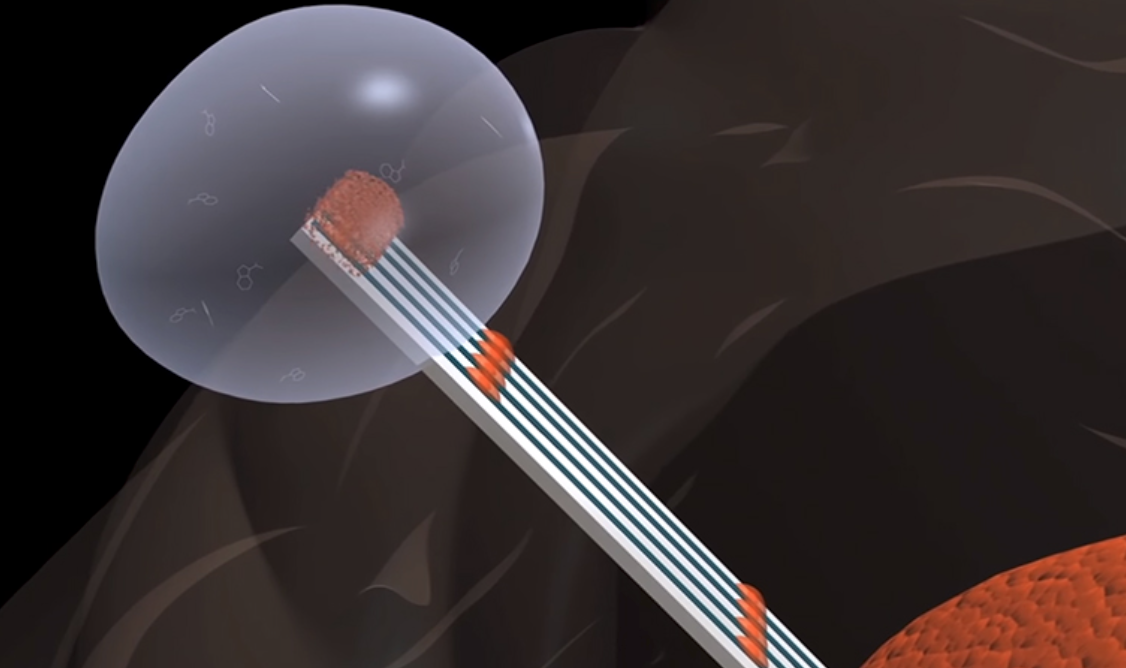

“Monorail” could halt spread of brain tumors

Duke’s Ravi Bellamkonda has developed a “Tumor Monorail” which tricks aggressive brain tumors such as glioblastoma into migrating into an external container rather than throughout the brain. It has been designated “Breakthrough Device” by the U.S. Food and Drug Administration (FDA). The device mimics the physical properties of the brain’s white matter to entice aggressive tumors to…

-

Wireless system could track tumors, dispense medicine

Dina Katabi and MIT CSAIL colleagues have developed ReMix, which uses lo power wireless signals to pinponit the location of implants in the body. The tiny implants could be used as tracking devices on shifting tumors to monitor movements, and in the future to deliver drugs to specific regions. The technology showed centimeter-level accuracy in animal…

-

AI – optimized glioblastoma chemotherapy

Pratik Shah, Gregory Yauney, and MIT Media Lab researchers have developed an AI model that could make glioblastoma chemotherapy regimens less toxic but still effective. It analyzes current regimens and iteratively adjusts doses to optimize treatment with the lowest possible potency and frequency toreduce tumor sizes. In simulated trials of 50 patients, the machine-learning model…

-

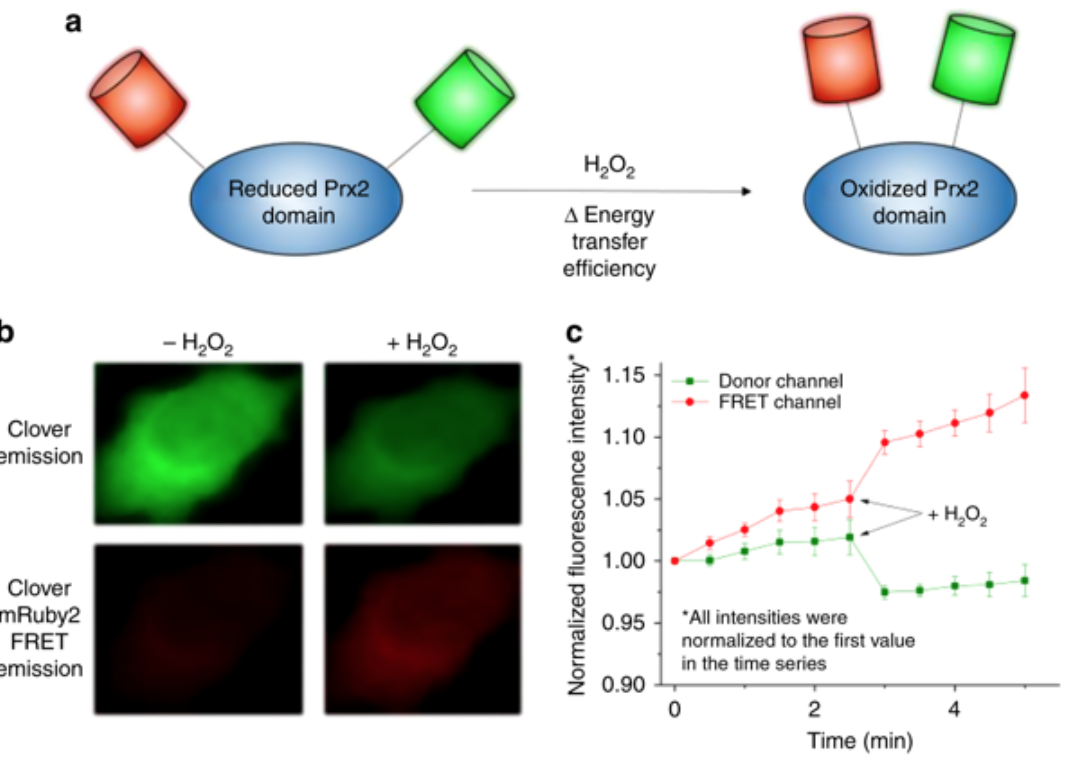

Hydrogen peroxide sensor to determine effective chemotherapy

MIT’s Hadley Sikes has developed a sensor that determines whether cancer cells respond to a particular type of chemotherapy by detecting hydrogen peroxide inside human cells. The technology could help identify new cancer drugs that boost levels of hydrogen peroxide, which induces programmed cell death. The sensors could also be adapted to screen individual patients’…

-

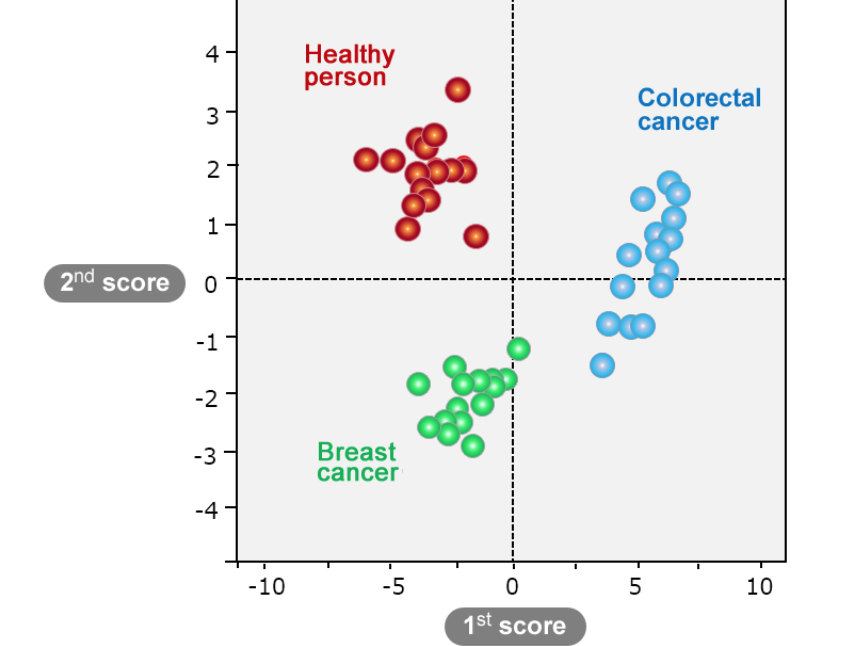

Urine test for cancer biomarkers

Minoru Sakairi and Hitachi scientists have developed a urine test for early cancer detection. 5,000 types of metabolites can be analyzed for cancer biomarkers in urine. The team began a study three years ago, resulting in the identification of 30 metabolites that can be used to discriminate between healthy people and cancer patients. Further validation studies…

-

Mary Lou Jepson on wearable MRI + telepathy | ApplySci @ Stanford

Mary Lou Jepsen discusses wearable MRI + holography-based telepathy at ApplySci’s Wearable Tech + Digital Health + Neurotech Silicon Valley conference – February 26-27, 2018 at Stanford University: Join ApplySci at the 9th Wearable Tech + Digital Health + Neurotech Boston conference – September 25, 2018 at the MIT Media Lab

-

Remote photodynamic therapy targets inner-organ tumors

NUS researchers Zhang Yong and John Ho have developed a tumor-targeting method that remotely conveys light for photodynamic treatment. The tiny, wireless, implanted device delivers doses of light over a long period in a programmable and repeatable manner. PDT is usually used on surface diseases because of low infiltration of light through organic tissue. This remote…

-

AI detects bowel cancer in less than 1 second in small study

Yuichi Mori and Showa University colleagues haved used AI to identify bowel cancer by analyzing colonoscopy derived polyps in less than a second. The system compares a magnified view of a colorectal polyp with 30,000 endocytoscopic images. The researchers claimed 86% accuracy, based on a study of 300 polyps. While further testing the technology, Mori said that…

-

Machine learning improves breast cancer detection

MIT’s Regina Barzilay has used AI to improve breast cancer detection and diagnosis. Machine learning tools predict if a high-risk lesion identified on needle biopsy after a mammogram will upgrade to cancer at surgery, potentially eliminating unnecessary procedures. In current practice, when a mammogram detects a suspicious lesion, a needle biopsy is performed to determine if…

-

CRISPR platform targets RNA and DNA to detect cancer, Zika

Broad and Wyss scientists have used an RNA-targeting CRISPR enzyme to detect the presence of as little as a single target molecule. SHERLOCK (Specific High Sensitivity Enzymatic Reporter UnLOCKing) could one day be used to respond to viral and bacterial outbreaks, monitor antibiotic resistance, and detect cancer. Demonstrated applications included: Detecting the presence of Zika virus in…

-

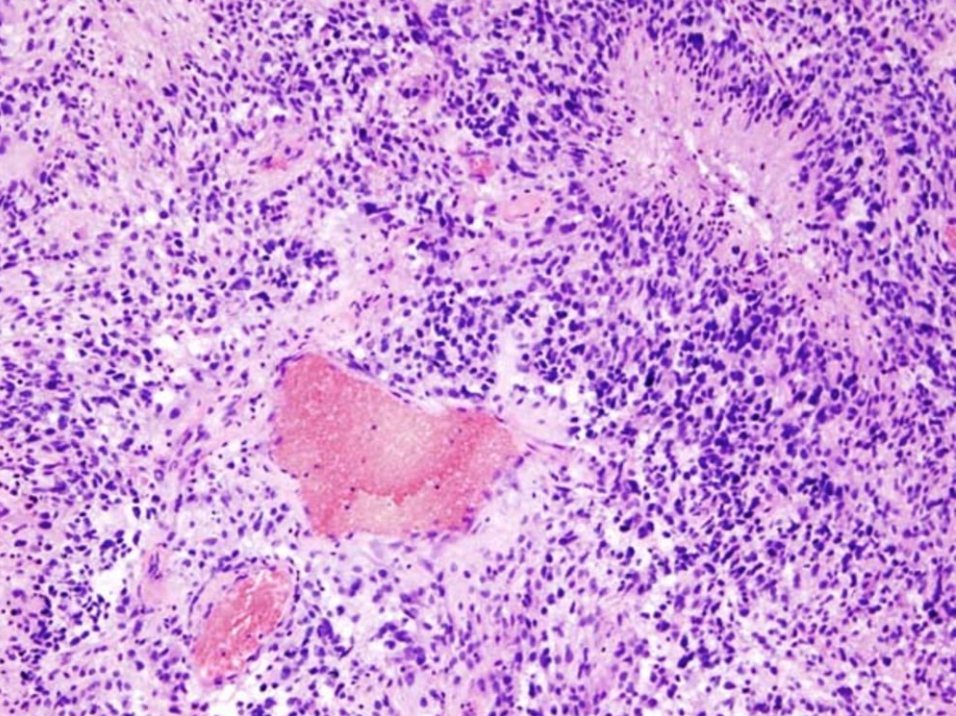

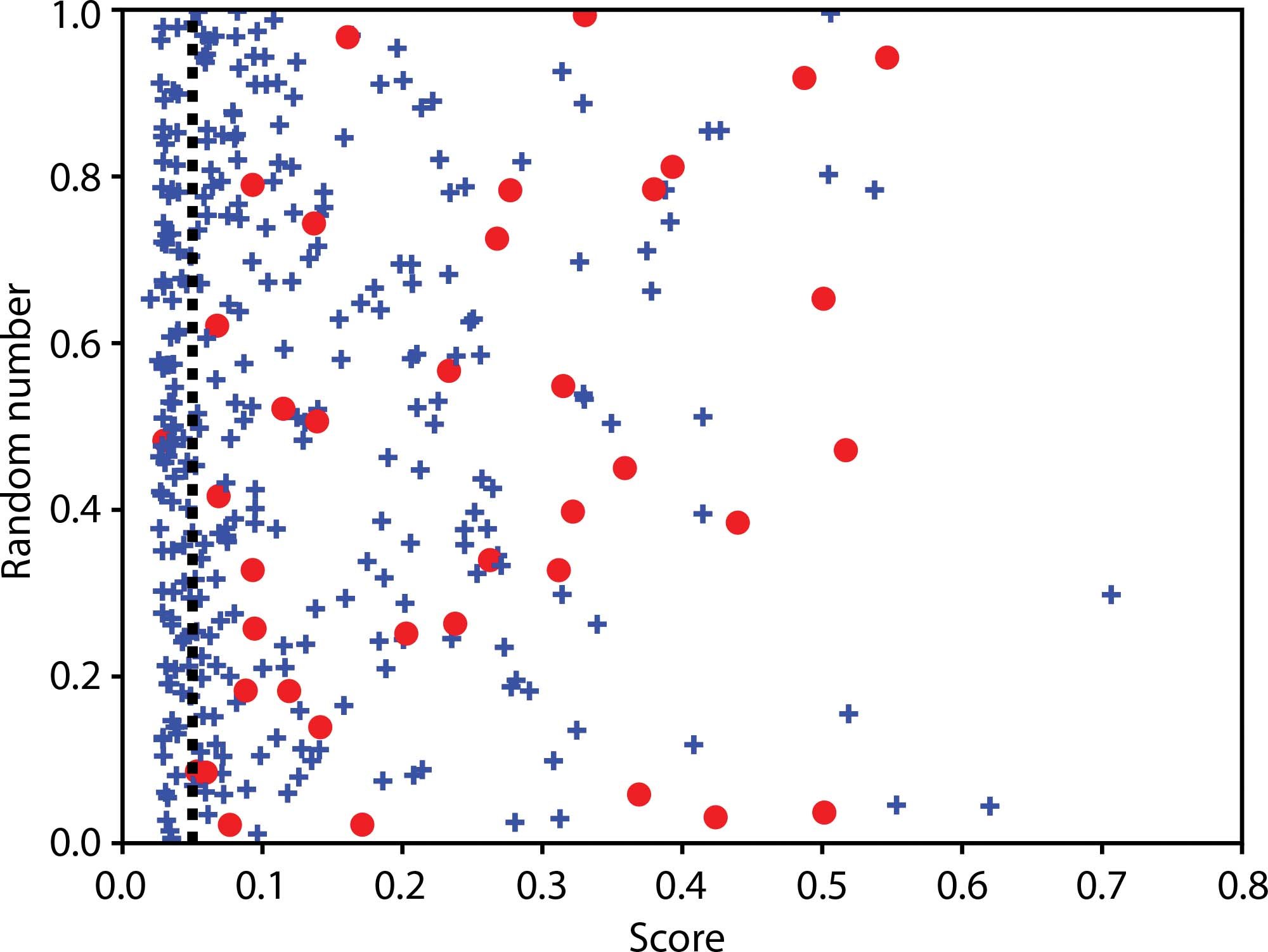

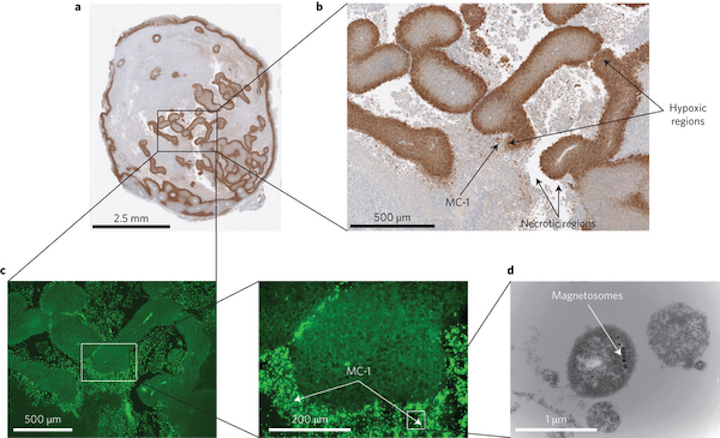

Nanorobots optimize cancer drug delivery, preserve surrounding tissues

Sylvain Martel and Polytechnique Montréal and McGill University colleagues have developed nanorobotic agents that can specifically target active cancerous cells of tumors. Optimal targeting could help preserve surrounding organs and healthy tissues, and allow reduced dosage. The nanorobotic agents can autonomously detect oxygen-depleted tumour areas, and deliver the drug to them. These “hypoxic” zones are…