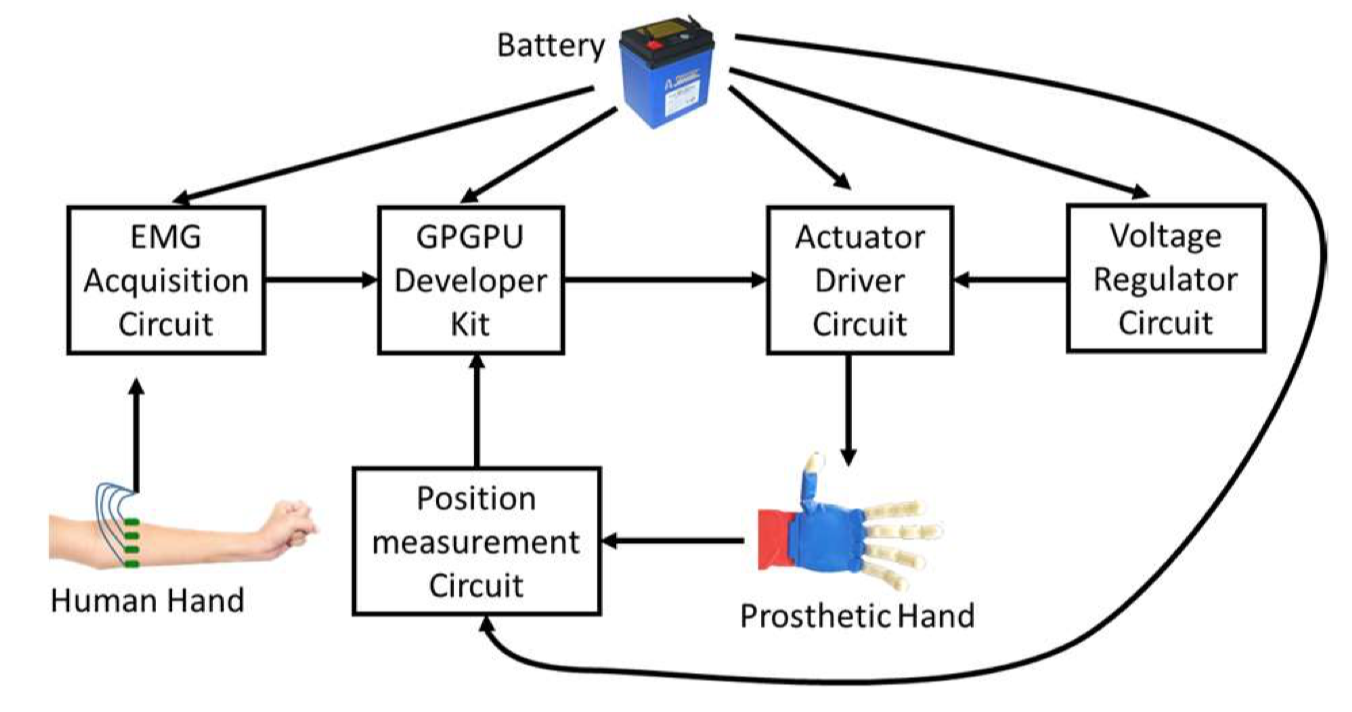

UT Dallas researchers Mohsen Jafarzadeh, Yonas Tadesse, and colleagues are using AI to control prosthetic hands with raw EMG signals. The real-time convolutional neural network, which does not require preprocessing, results in faster and more accurate data classification and faster hand movements. User data re-trains the system to personalize actions.

Join ApplySci at the 12th Wearable Tech + Digital Health + Neurotech Boston conference on November 14, 2019 at Harvard Medical School featuring talks by Brad Ringeisen, DARPA – Joe Wang, UCSD – Carlos Pena, FDA – George Church, Harvard – Diane Chan, MIT – Giovanni Traverso, Harvard | Brigham & Womens – Anupam Goel, UnitedHealthcare – Nathan Intrator, Tel Aviv University | Neurosteer – Arto Nurmikko, Brown – Constance Lehman, Harvard | MGH – Mikael Eliasson, Roche – Nicola Neretti, Brown

Join ApplySci at the 13th Wearable Tech + Neurotech + Digital Health Silicon Valley conference on February 11-12, 2020 on Sand Hill Road featuring talks by Zhenan Bao, Stanford – Rudy Tanzi, Harvard – Shahin Farshchi – Lux Capital – Sheng Xu, UCSD – Carla Pugh, Stanford – Nathan Intrator, Tel Aviv University | Neurosteer – Wei Gao, Caltech