Scientist-led conferences at Harvard, Stanford and MIT

-

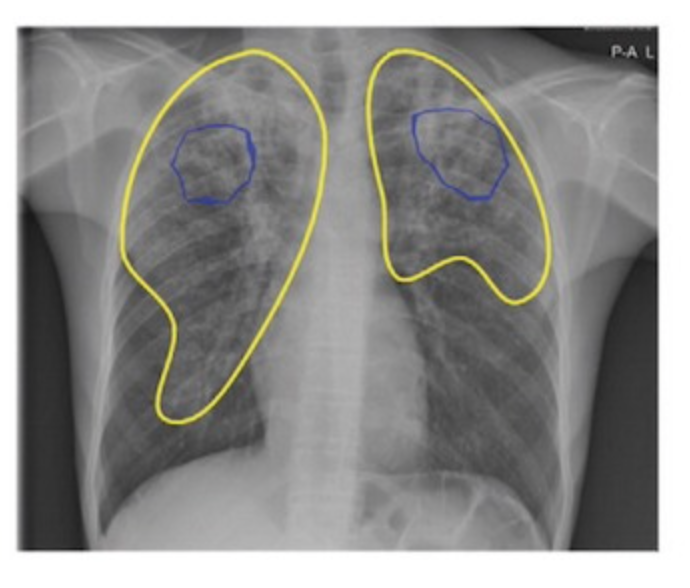

Google AI detects tuberculosis

Google’s deep learning technology detected tuberculosis with similar accuracy to radiologists in a Radiology study. 165,174 chest radiographs from 22,284 patients in four countries were scanned. In detecting active tuberculosis, its sensitivity was higher (88 percent versus 75 percent) and its specificity was noninferior (79 percent versus 84 percent) compared to nine radiologists. Costs were…

-

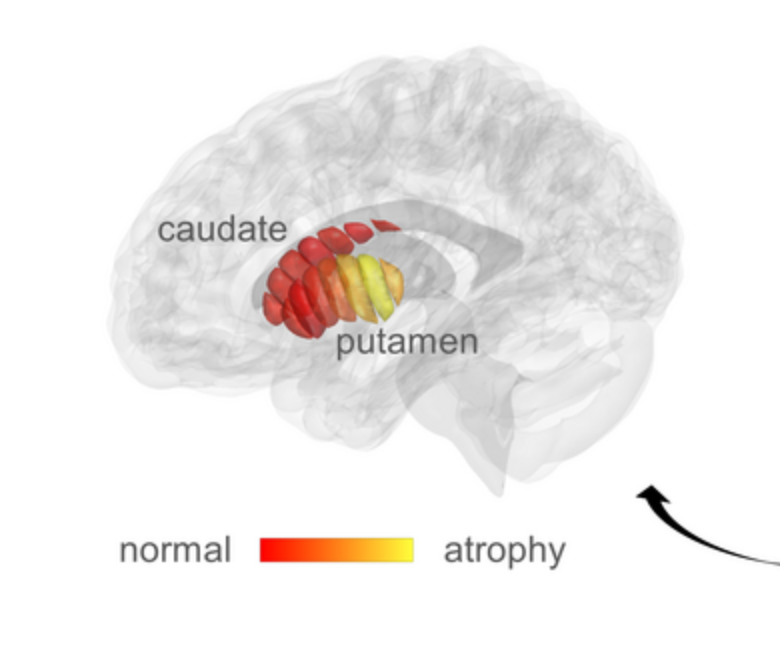

qMRI for early detection of Parkinson’s disease

Aviv Mezer and Hebrew University colleagues used quantitative MRI to identify cellular changes in Parkinson’s disease. Their method enabled them to look at microstructures in the striatum, which is known to deteriorate during disease progression. Using a novel algorithm developed by Elior Drori, biological changes in the striatum were revealed, and associated with early stage…

-

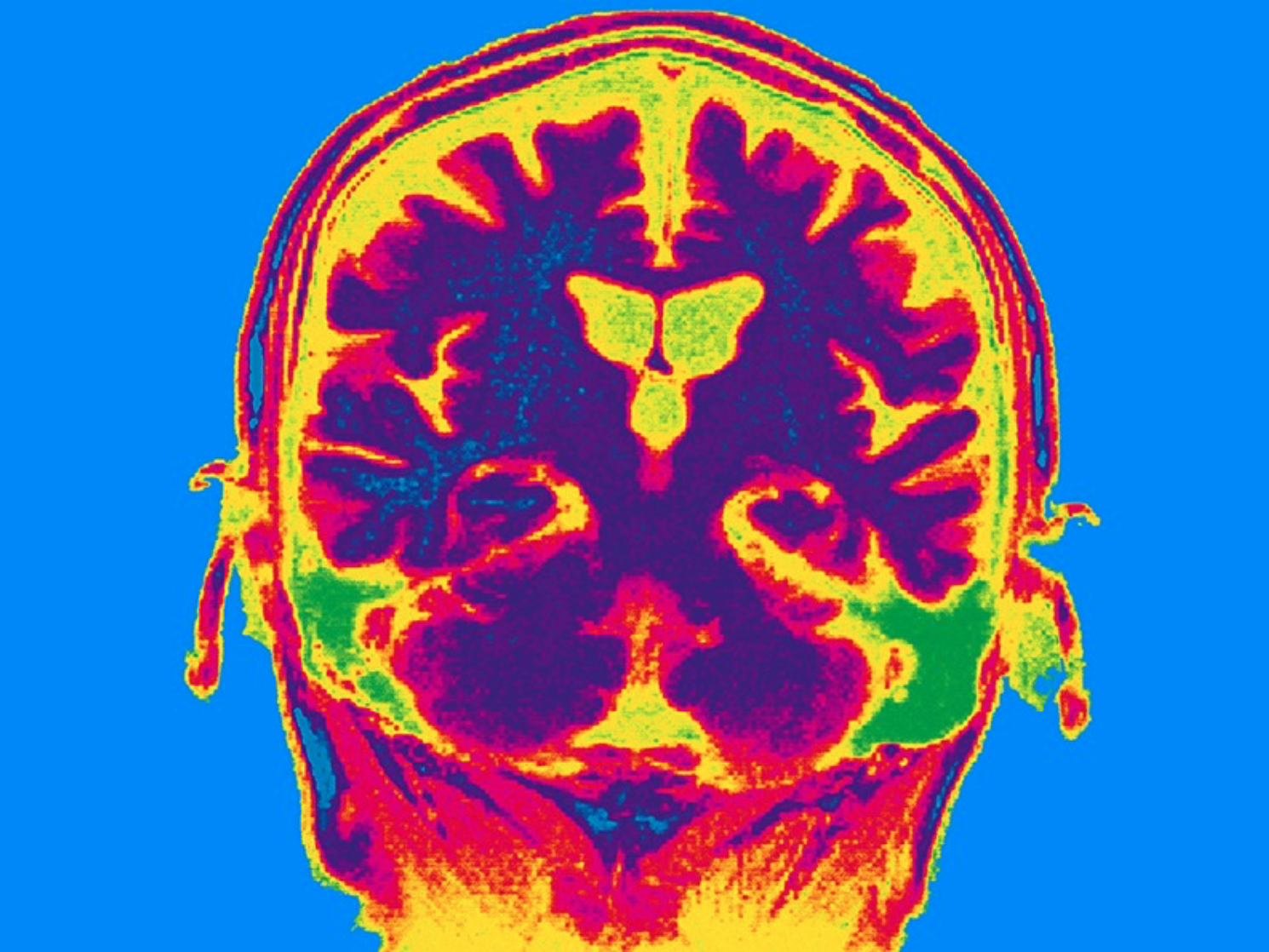

Non-invasive stimulation improves memory in study

In a recent study, Boston University professor Robert Reinhart used tACS to stimulate brain activity in 150 people aged 65-88, resulting in memory improvements for one month. Stimulating the dorsolateral prefrontal cortex improved long-term memory, while stimulating the inferior parietal lobe, with low-frequency electrical currents, boosted working memory. Participants were asked to recall 20 words…

-

Neural Network assesses sleep patterns for passive Parkinson’s diagnosis

MIT’s Dina Katabi has developed a non-contact, neural network-based system to detect Parkinson’s disease while a person is sleeping. By assessing nocturnal breathing patterns, the series of algorithms detects, and tracks the progression of, the disease — every night, at home. A device in the bedroom emits radio signals, analyzes their reflections off the surrounding…

-

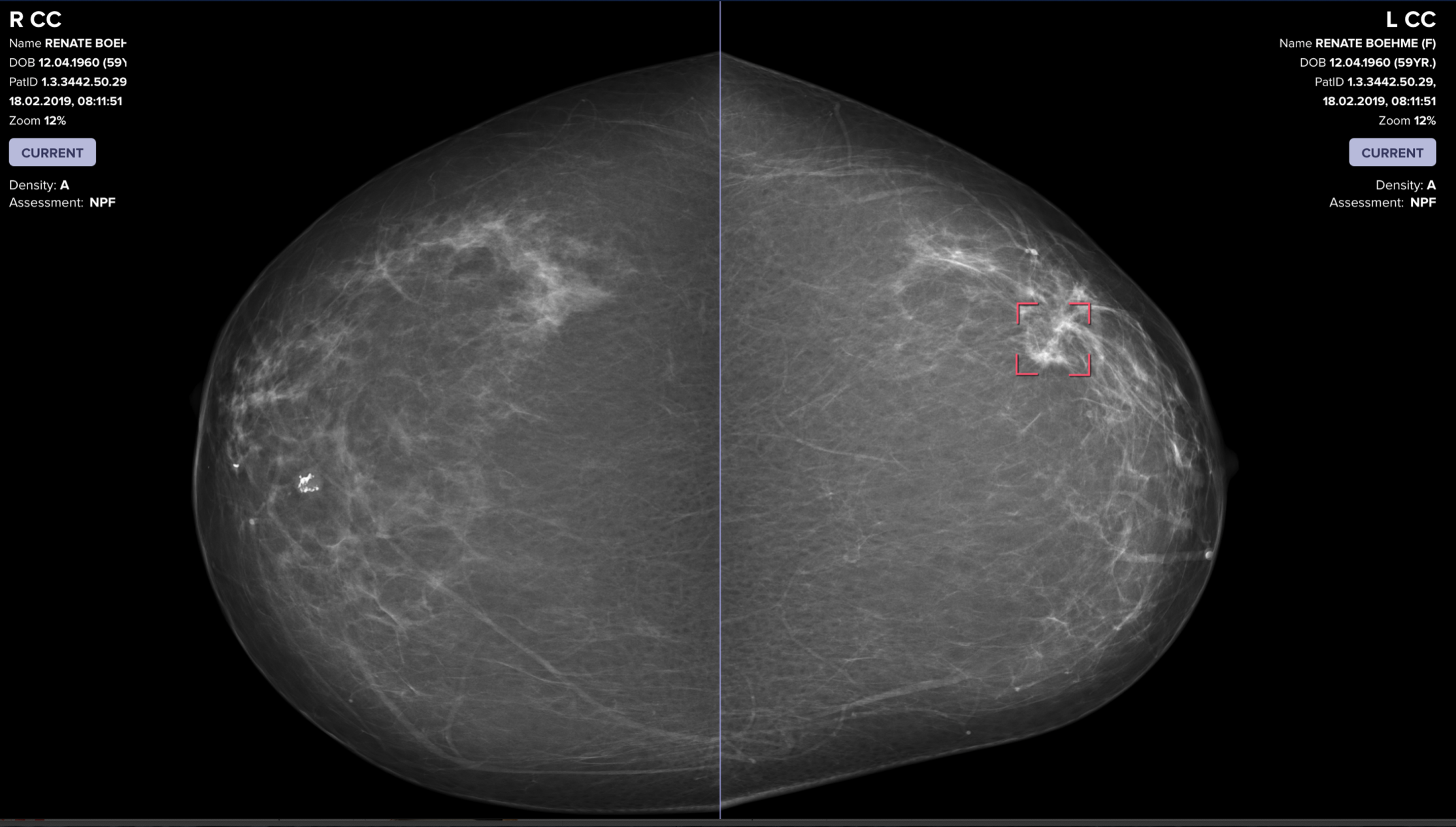

AI catches breast cancer earlier, more often than traditional screening alone

The mammography screening paradigm has not changed since the 1960s. Breast screening AI company Vara, with Essen University and Memorial Sloan Kettering hospitals, published a study showing that radiologists assisted by AI are better able to screen for breast cancer. The hope is that AI systems could detect cancers that doctors miss, provide better care…

-

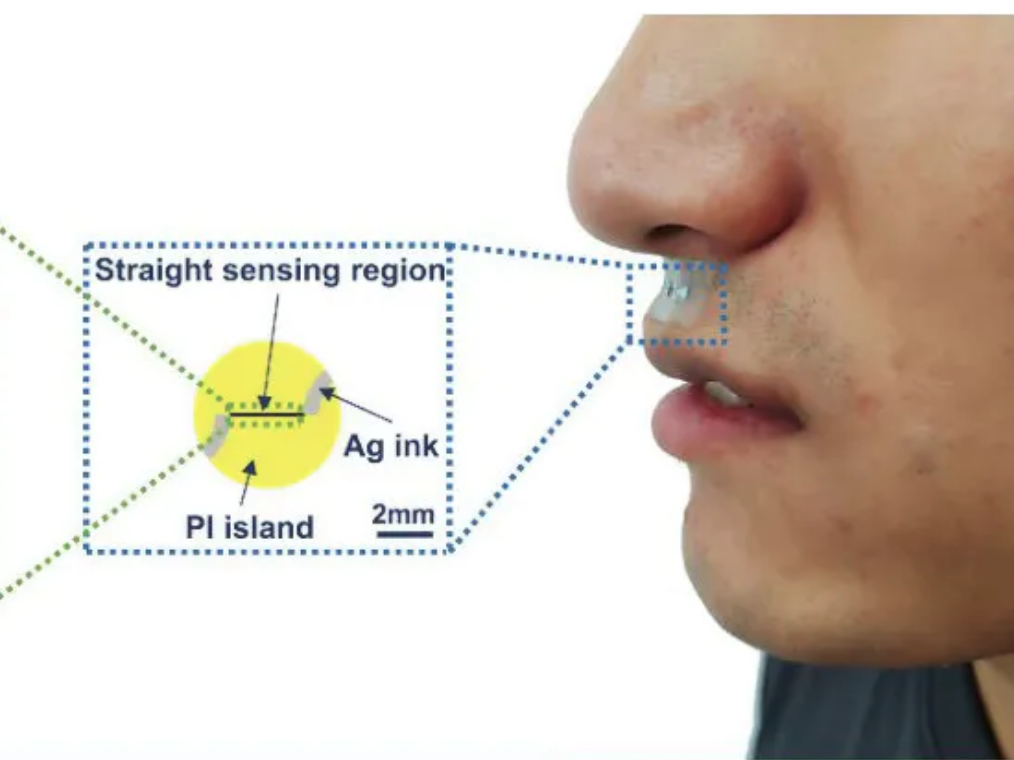

Small sticker-sensor continuously analyzes breath for broad health monitoring

Heibei University, Tianjin Hospital, Beihang University, and Penn State researchers have developed an under nose-worn, stretchable, skin-friendly, waterproof sensor to analyze breath for health monitoring. It could be use for multiple-condition screening, asthma and COPD management, or environmental hazard sensing, among other applications. The functional gas sensor, in a moisture-resistant membrane, can operate in humid…

-

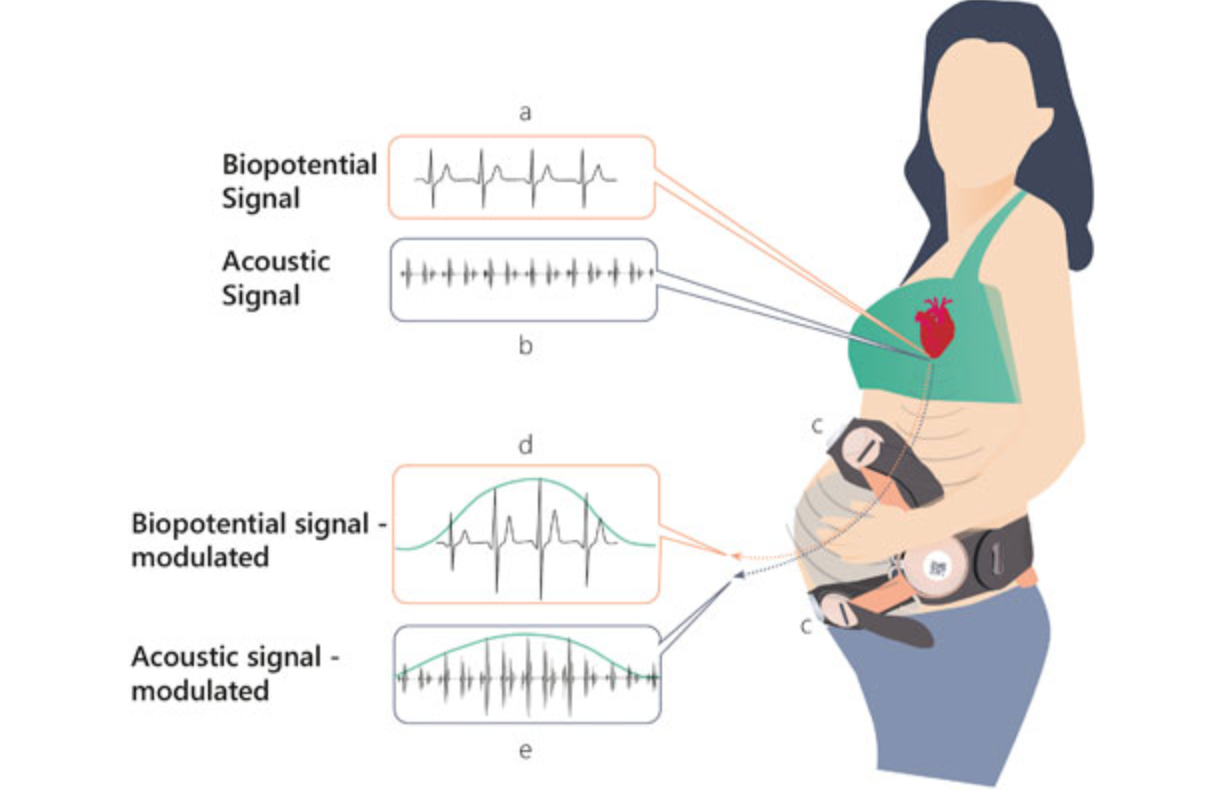

Remote, non-invasive pregnancy monitor tracks uterine activity

Uterine activity monitoring is essential to pregnancy management. Current methods are either invasive, or their accuracy is compromised by obesity, maternal movements, or belt positioning. Pregnancy-monitoring company Nuvo has published a study showing that their cardiac-derived algorithm for uterine monitoring was more accurate than TOCO standard of care in 150 patients. This remote, non-invasive detection…

-

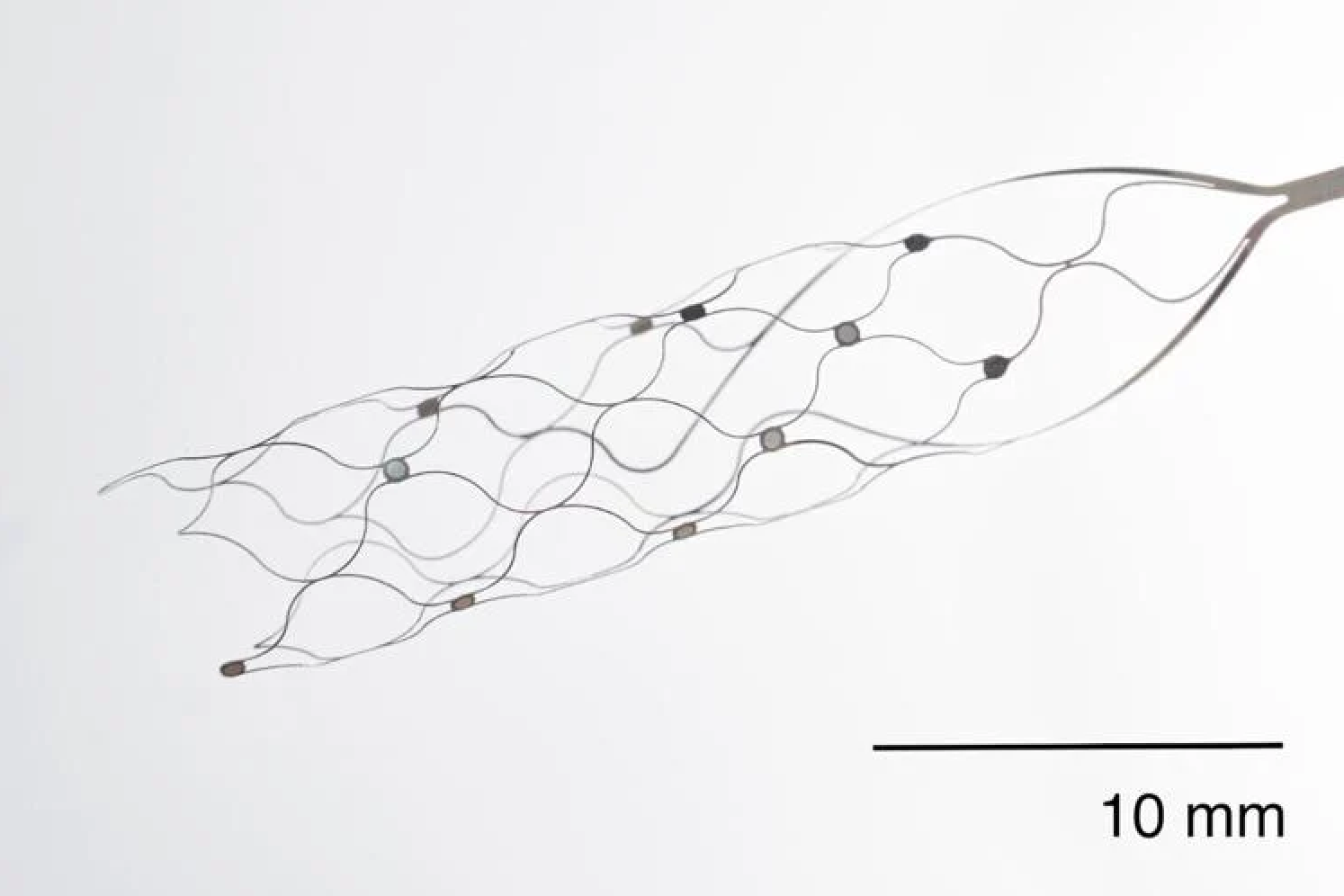

First US patient receives Synchron endovascular BCI implant

On July 6, 2022, Mount Sinai’s Shahram Majidi threaded Synchron‘s 1.5-inch-long, wire and electrode implant into a blood vessel in the brain of a patient with ALS. The goal is for the patient, who cannot speak or move, to be able to surf the web and communicate via email and text, with his thoughts. Four…

-

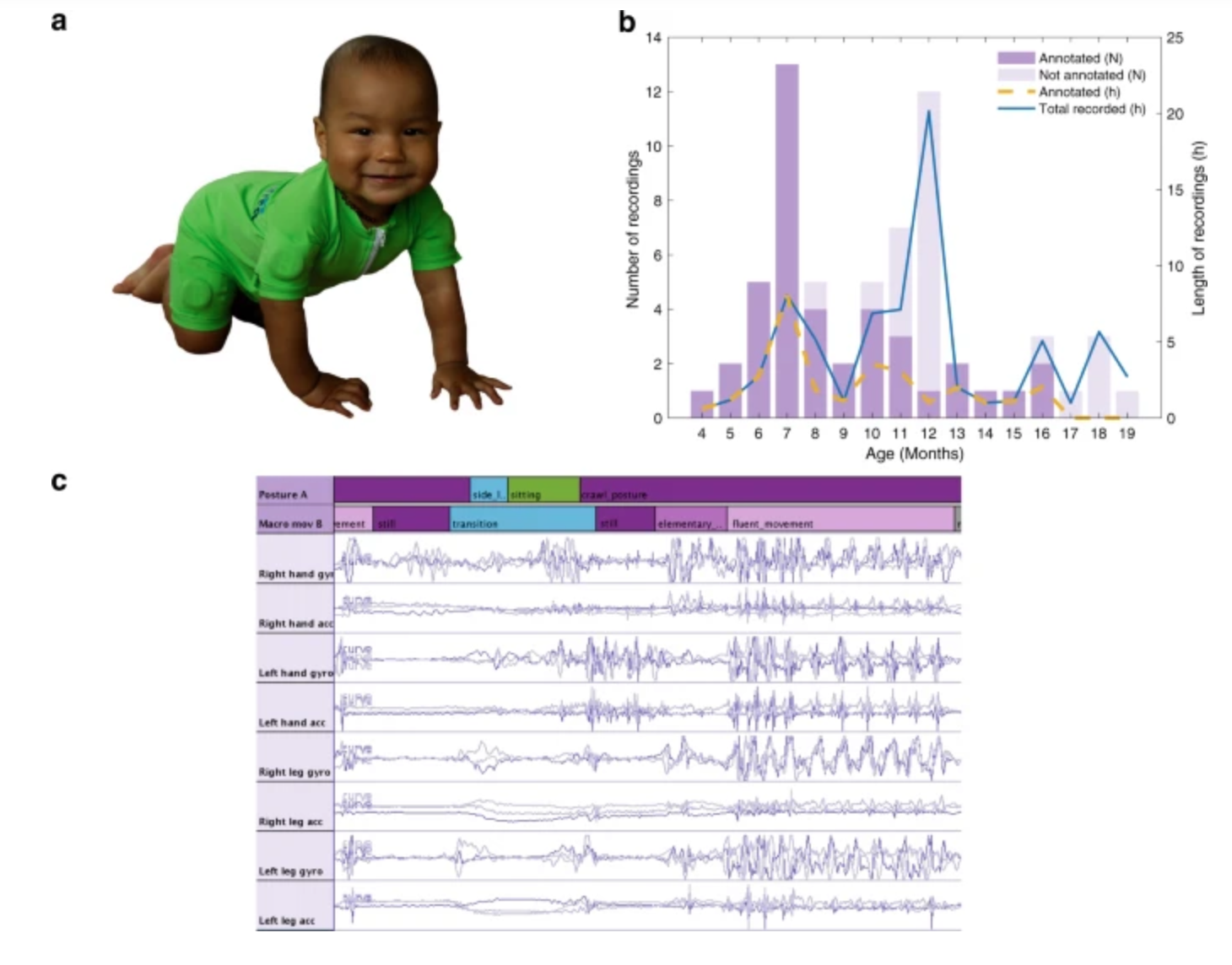

Sensor jumpsuit monitors infant motor abilities

Sampsa Vanhatalo, Manu Airaksinen and University of Helsinki colleagues have developed MAIJU (Motor Assessment of Infants with a Jumpsuit,) a wearable onesie with multiple movement sensors which they believe is able to predict a child’s neurological development. In a recent study, 5 to 19 month-old infants were monitored using MAIJU during spontaneous playtime. Initially, infant…

-

Continuous, cuffless blood pressure monitoring via graphene tattoo

Deji Akinwande, Roozbeh Jafari, and UT Austin colleagues have developed an electronic wrist tattoo that can be worn for hours and deliver highly accurate, continuous blood pressure measurements. This can provide a much clearer picture of a person’s health than occasional, cuff based measurements at a physicians office, or at home. Smart watches are not…

-

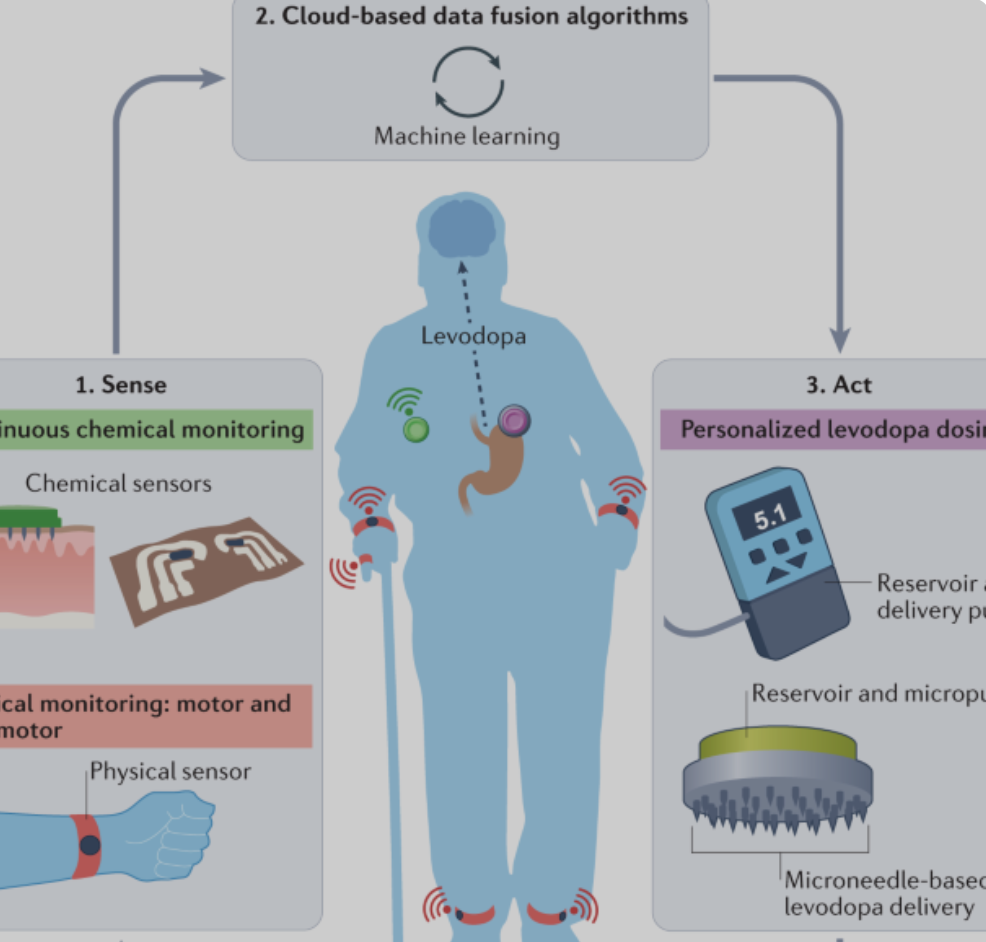

Joe Wang developed, closed-loop, levadopa delivery/monitoring system for Parkinson’s disease

Early Parkinson’s Disease patients benefit significantly from levodopa, to replace dopamine to restore normal motor function. As PD progresses, the brain loses more dopamine-producing cells, which causes motor complications and unpredictable responses to levodopa. Doses must be increased over time, and given at shorter intervals. Regimens are different for each person and may vary from…

-

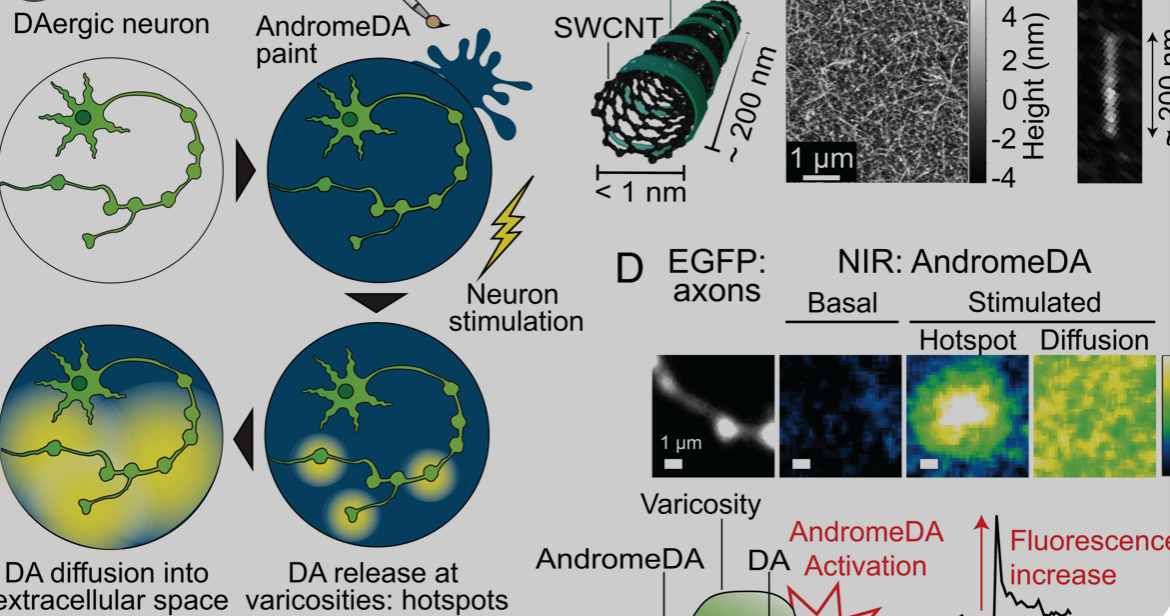

Carbon nanotube sensor precisely measures dopamine

Ruhr University professor Sebastian Kruss, with Max Planck researchers Sofia Elizarova and James Daniel, has developed a sensor that can visualize the release of dopamine from nerve cells with unprecedented resolution. The team used modified carbon nanotubes that glow brighter in the presence of the messenger substance dopamine. Eizarova said that the sensor “provides new…

Got any book recommendations?