Scientist-led conferences at Harvard, Stanford and MIT

-

NFC powered ultra-thin health monitoring patch

Powering wearables for efficient, long term, continuous, use remains a challenge. Illinois professor John Rogers has, again, disrupted himself. His new, stretchable, ultra-thin, health monitoring patches are wirelessly powered via smartphone near field communication. This enables the devices to be 5-10 times thinner than before — increasing comfort and therefore the willingness of people to wear…

-

GSK/Verily “biolectronic medicine” partnership for disease management

Galvani Biolectronics is a Verily/GSK company, created to accelerate the research, development and commercialization of bioelectronic medicines. The goal is to find solutions to manage chronic diseases, such as arthritis, diabetes, and asthma, using miniaturized electronics. Implanted devices would modify electrical signals that pass along nerves, including irregular impulses that occur in illness. Initial work will…

-

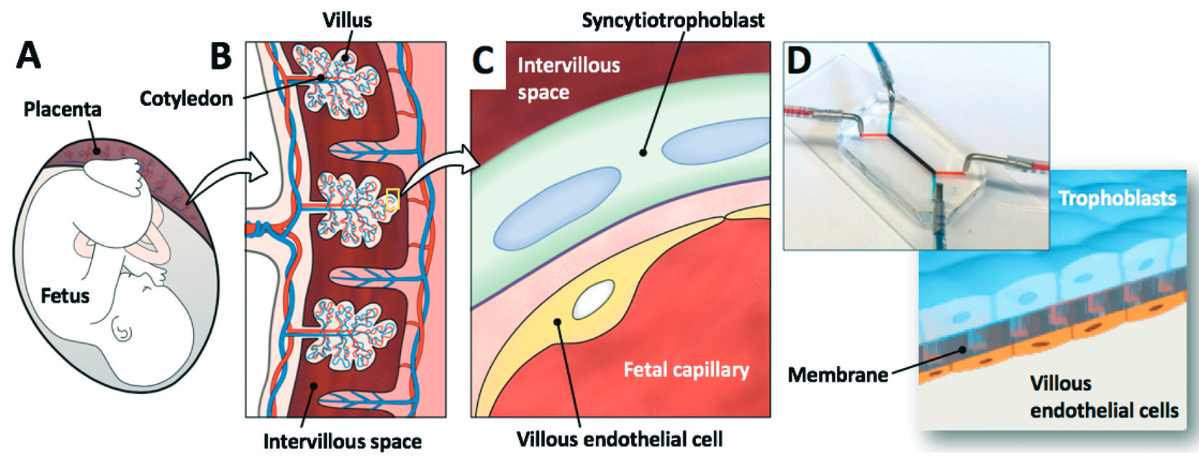

“Placenta on a chip” to study pre-term birth

Penn researchers have developed a “placenta-on-a-chip” to model the transport of nutrients across the placental barrier. It will be used in studies to identify causes of and prevention methods for dangerous preterm birth. (Lungs, intestines, and eyes “on chips” are similarly being used for research.) The underlying mechanisms of pre-term birth studies currently rely on…

-

Robot assesses, assists dementia patients

Ludwig is a University of Toronto – built robot meant to assist seniors with cognitive issues. “He” stands in front of a person, displays a picture on a screen, and asks the viewer to describe what he or she sees. Ludwig then interprets a user’s condition, including engagement, happiness or anxiety, and behavior changes over time.…

-

Keith Black on tumor treatment innovation, early Alzheimer’s detection, predictive medicine

Keith Black, MD, Chairman and Professor, Department of Neurosurgery at Cedars-Sinai, was a keynote speaker at ApplySci’s recent NeuroTech San Francisco conference. Click to view his interview with StartUp Health’s Unity Stoakes at the event, where he discussed brain tumor treatment innovation, early Alzheimer’s diagnosis, wearables, and predictive medicine. Dr. Black’s brilliance is equaled only…

-

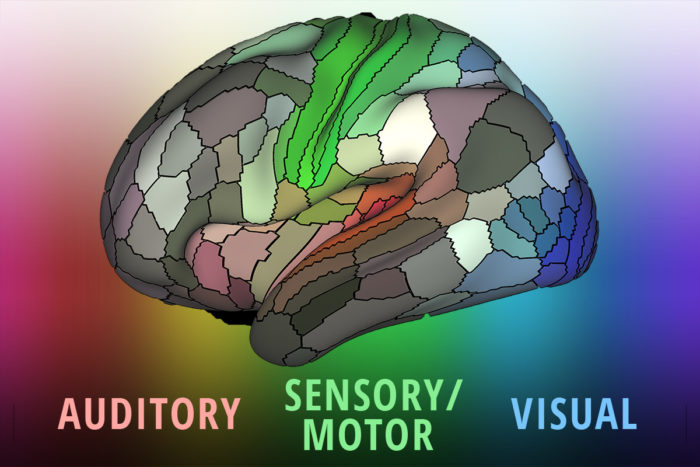

MRI, fMRI, task-based MRI, diffusion imaging combined for highly precise brain map

A brain map that includes more than doubled the number of distinct areas known in the human cortex, from 83 to 180, has been published. It combines data from four imaging technologies to bring high-definition to brain scanning. Washington University’s Matthew Glasser and David Van Essen led the global research team. 1200 young adult brains were scanned using…

-

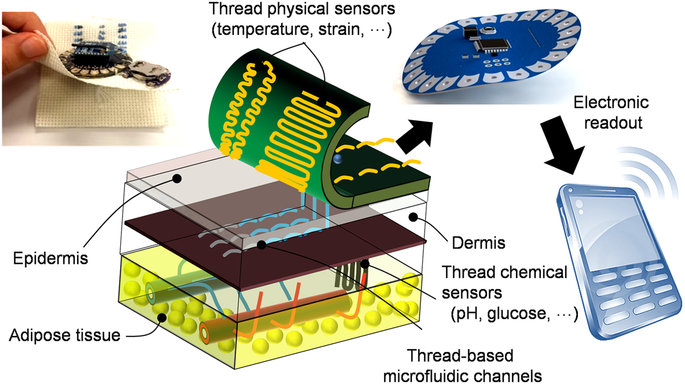

Implanted thread provides real-time diagnostic data

Tufts University researchers have created a thread-based diagnostic platform to provide real-time health data for implanted devices and wearables. Thread-integrated nano-scale sensors, electronics and microfluidics can be sutured through multiple layers of tissue . Measures of tissue health (pressure, stress, strain and temperature), pH and glucose levels are collected. Results are transmitted wirelessly. The system can be used…

-

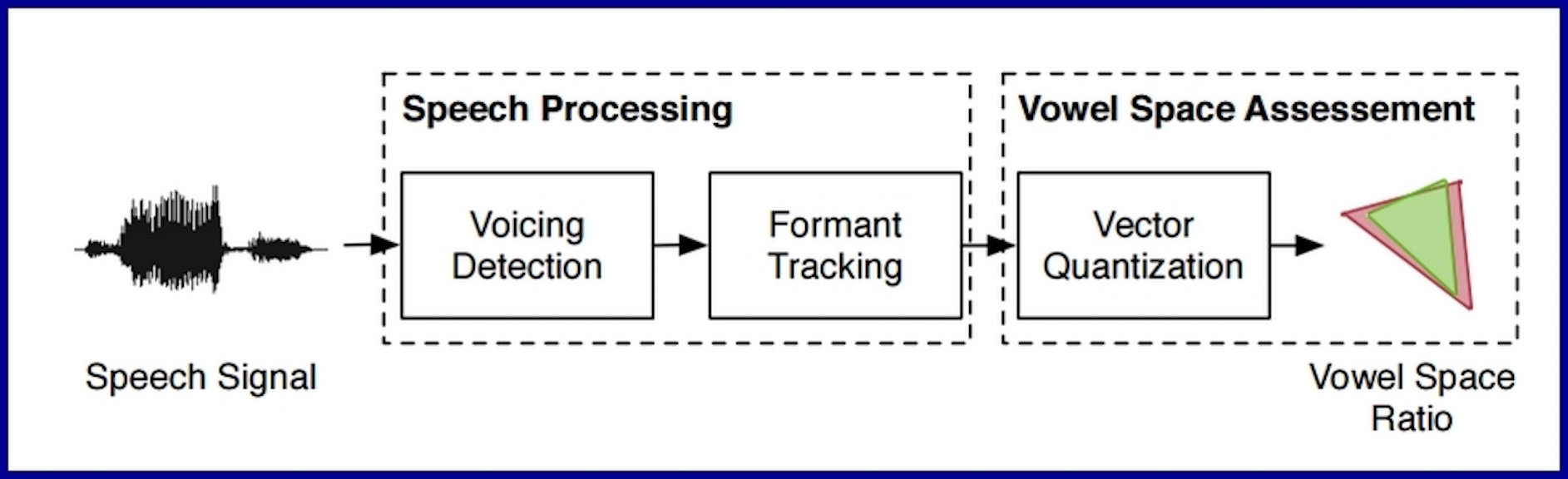

Algorithm detects depression in speech

USC researchers are using machine learning to diagnose depression, based on speech patterns. During interviews, SimSensei detected reductions in vowel expression that might be missed by human interviewers. The depression-associated speech variations have been documented in past studies. Depressed patient speech can be flat, with reduced variability, and monotonicity. Reduced speech, reduced articulation rate, increased pause duration,…

-

Andreas Weigend on Data for the People

Of all the data we create and share, perhaps none is more important — or more sensitive — than data about our health. The wearable tech revolution has given us, as patients and individuals, control – but we also must think about what we, and others, do with the data that we collect. Andreas Weigend,…

-

AI robot learns ward procedures, advises nurses

Julie Shah and MIT CSAIL colleagues have developed a robot to assist labor nurses. The AI driven assistant learns how the unit works from people, and is then able to make care recommendations, including scheduling and patient movement. Labor nurses attempt to predict the arrival and length of labor, and which patients will require a…

-

Immersive media system reduces pre-surgery anxiety

BERT (Bedside Entertainment Theater) is a non-medical technique used at Lucille Packard Children’s Hospital to reduce stress before surgery. It is meant to be a safer, entertaining alternative to anti-anxiety drugs, which are often given pre-anesthesia, and could affect the recovery process, or impact developing brains. BERT is an immersive media experience, consisting of a mobile projector and…

-

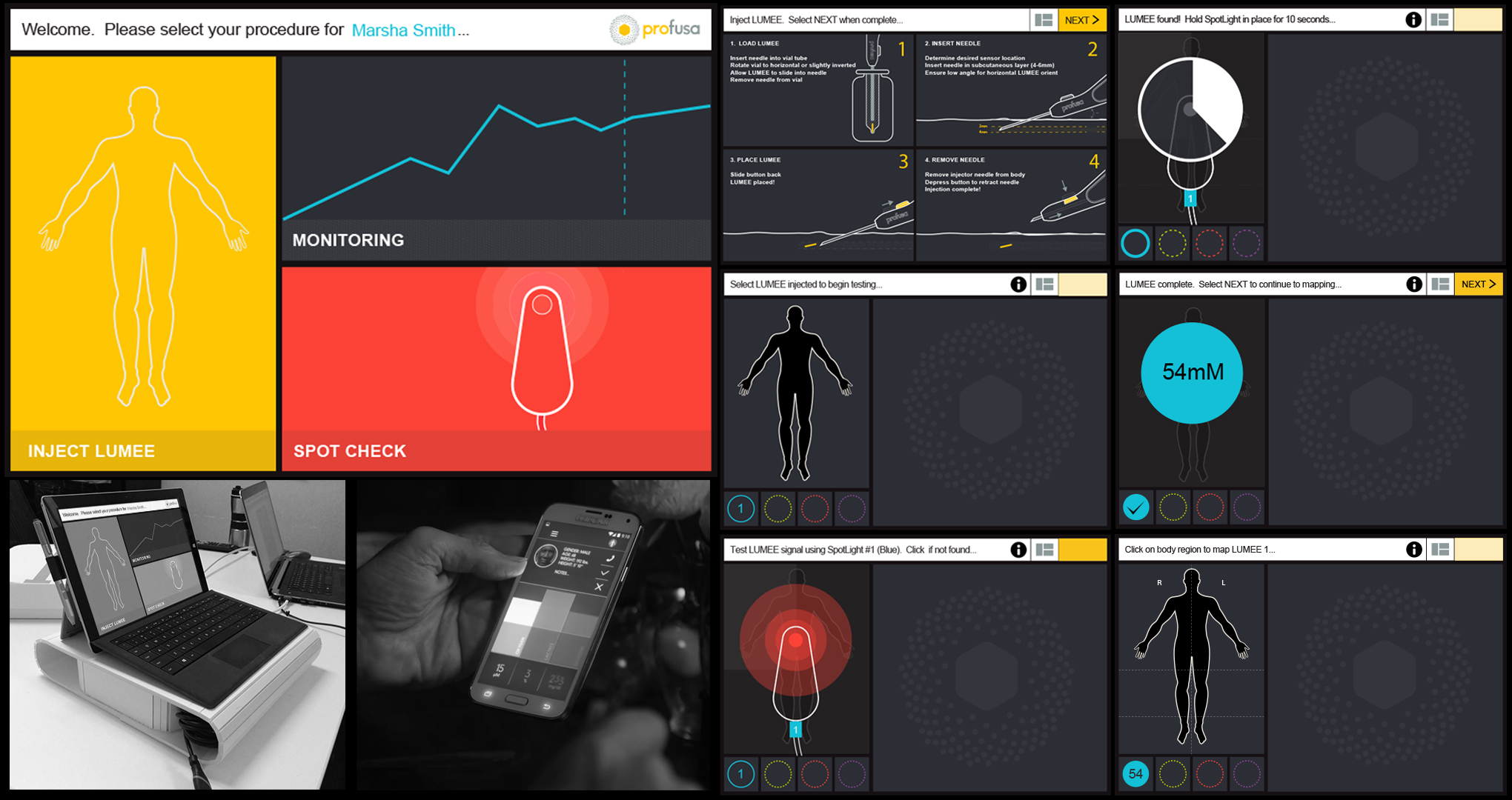

Injectable sensor continuously monitors multiple body chemistries

Profusa injectable sensors are designed for the simultaneous, continuous monitoring of multiple body chemistries including metabolic and dehydration status, ion panels, blood gases, and other biomarkers. The company will initially provide real-time monitoring of soldier’s health, but its sensors can be used to manage peripheral artery disease, diabetes or COPD, or enhance sport performance. The small,…

Got any book recommendations?