Tag: Brain

-

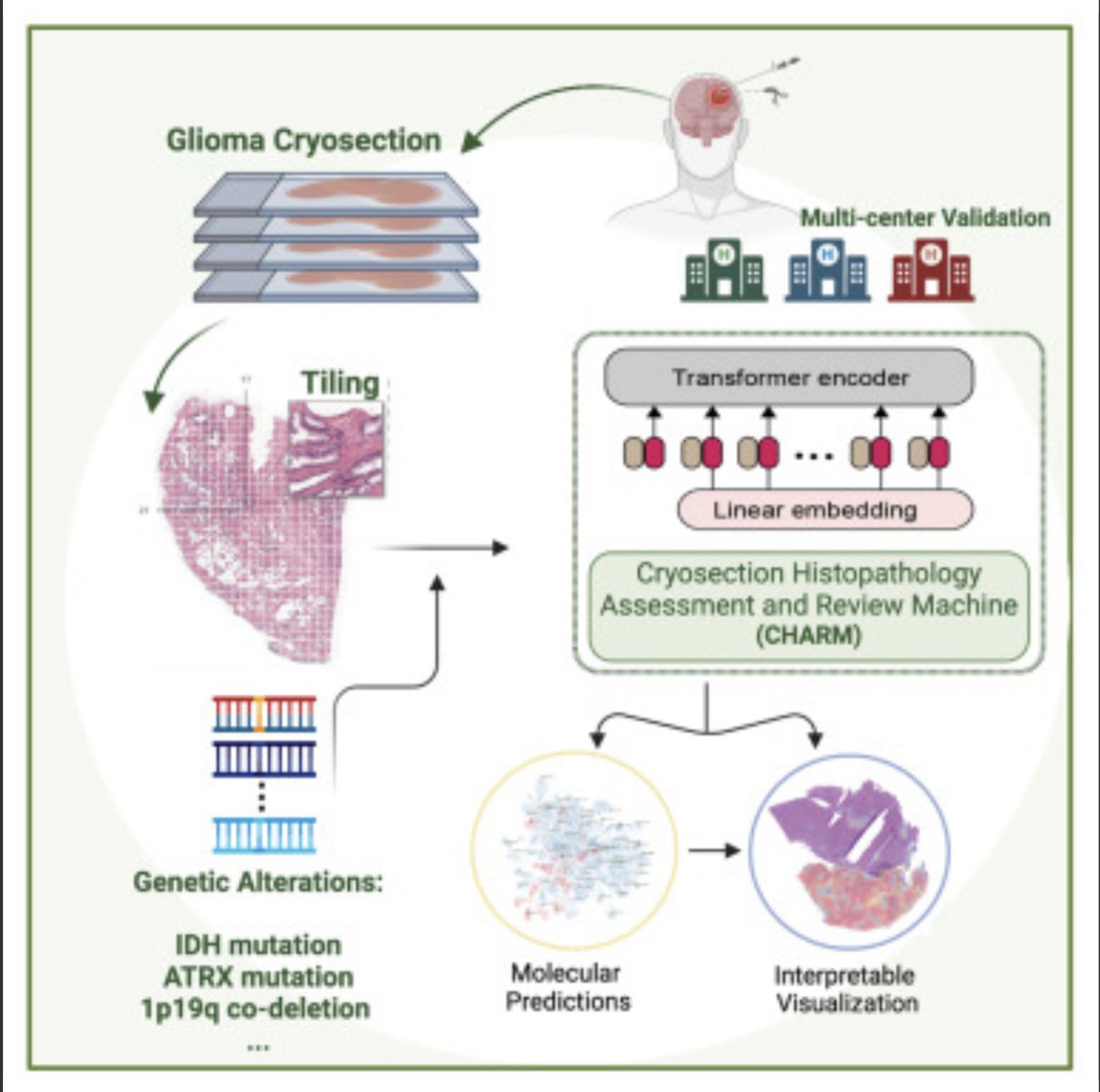

AI decodes brain tumor DNA during surgery

Kun-Hsing Yu and HMS colleagues used AI to rapidly determine a brain tumor’s molecular identity during surgery, propeling the development of precision oncology. The tool is CHARM (Cryosection Histopathology Assessment and Review Machine.) Currently, genetic sequencing takes days to weeks. Accurate molecular diagnosis during surgery can help a neurosurgeon decide how much brain tissue…

-

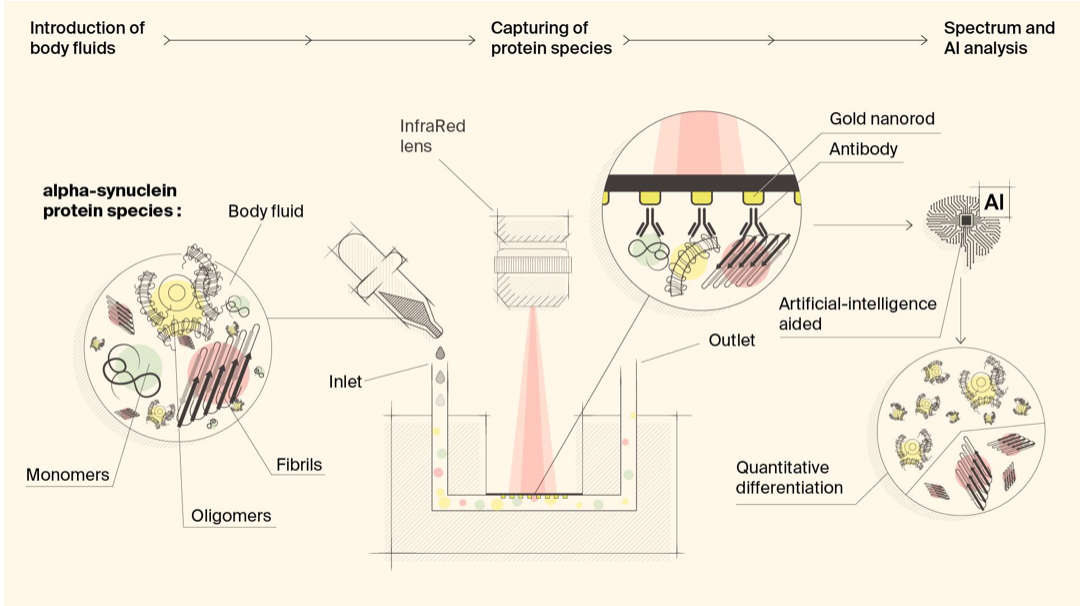

Biosensor detects misfiled proteins in Parkinson’s and Alzheimer’s disease

Hatice Altug, Hilal Lashue, and EPFL colleagues have developed ImmunoSEIRA, an AI-enhanced, biosensing tool for the detection of misfolded proteins linked to Parkinson’s and Alzheimer’s disease. The researchers also claim that neural networks can quantify disease stage and progression. The technology holds promise for early detection, monitoring, and assessing treatment options. Protein misfolding has been…

-

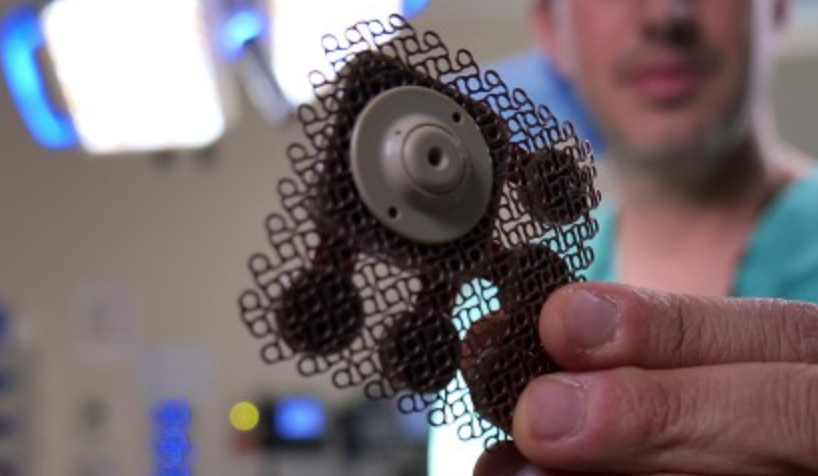

Brain-spine interface allows paraplegic man to walk

EPFL professor Grégoire Courtine has created a “digital bridge” which has allowed a man whose spinal cord damage left him with paraplegia, to walk. The brain–spine interface builds on previous work, which combined intensive training and a lower spine stimulation implant. Gert-Jan Oskam participated in this trial, but stopped improving after three years. The new…

-

Implanted ultrasound allows powerful chemotherapy drugs to cross the blood brain barrier

Adam Sonaband and Northwestern colleages used a skull-implantable ultrasound device to open the blood-brain barrier and repeatedly permeate critical regions of the human brain, to deliver intravenous chemotherapy to glioblastoma patients. This is the first study to successfully quantify the effect of ultrasound-based blood-brain barrier opening on the concentrations of chemotherapy in the human brain.…

-

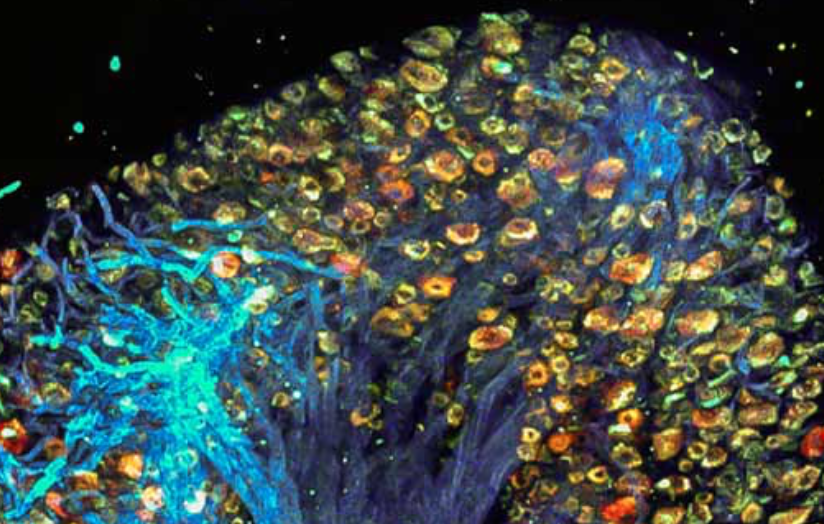

Study: Molecular mechanism of accelerated cognitive decline in women with Alzheimer’s

Hermona Soreq, Yonatan Loewenstein, and Hebrew University of Jerusalem colleagues have uncovered a sex-specific molecular mechanism leading to accelerated cognitive decline in women with Alzheimer’s disease. Current therapeutic protocols are based on structural changes in the brain and aim to delay symptom progression. Women typically experience more severe side effects from these drugs. This research…

-

Music improves working memory in study of seniors

A study led by Damian Marie and UNIGE, HES-SO Geneva, and EPFL colleagues showed the effect of music on working memory decline. 132 healthy retirees from 62 to 78 years of age, who had not taken any lessons for at least six months, were assigned to two groups — piano practice, and active listening. According…

-

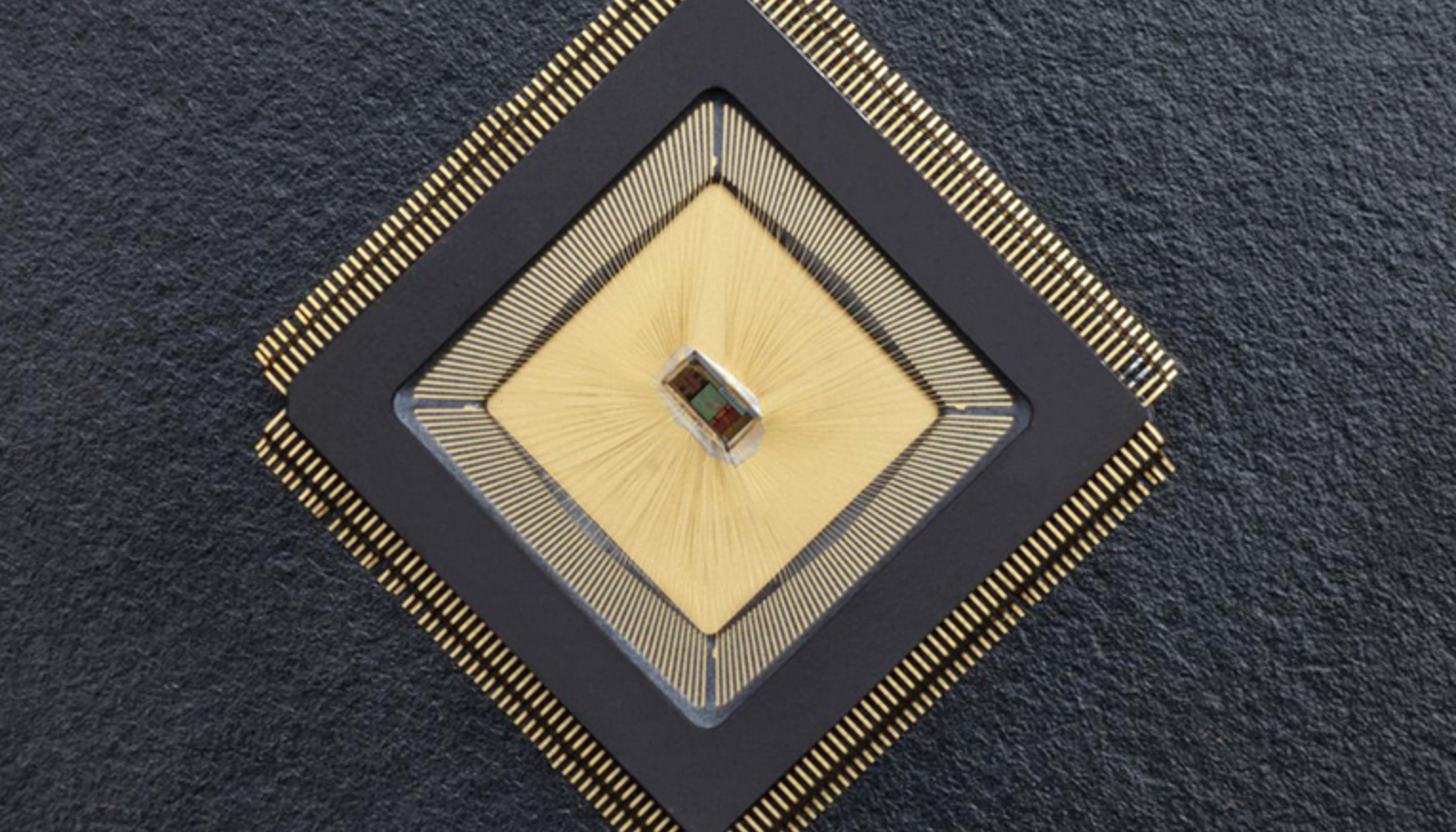

Closed-loop sensor/stimulation system to detect, reduce neurological events

For the first time, there is an autonomous sensing and stimulating unit sitting in a specific brain region and ensuring that neuronal activity in that region is controlled. The closed-loop system is called NeuralTree and was developed by Mahsa Shoaran and EPFL colleagues, to overcome the lack of control caused by epileptic activity or tremor,…

-

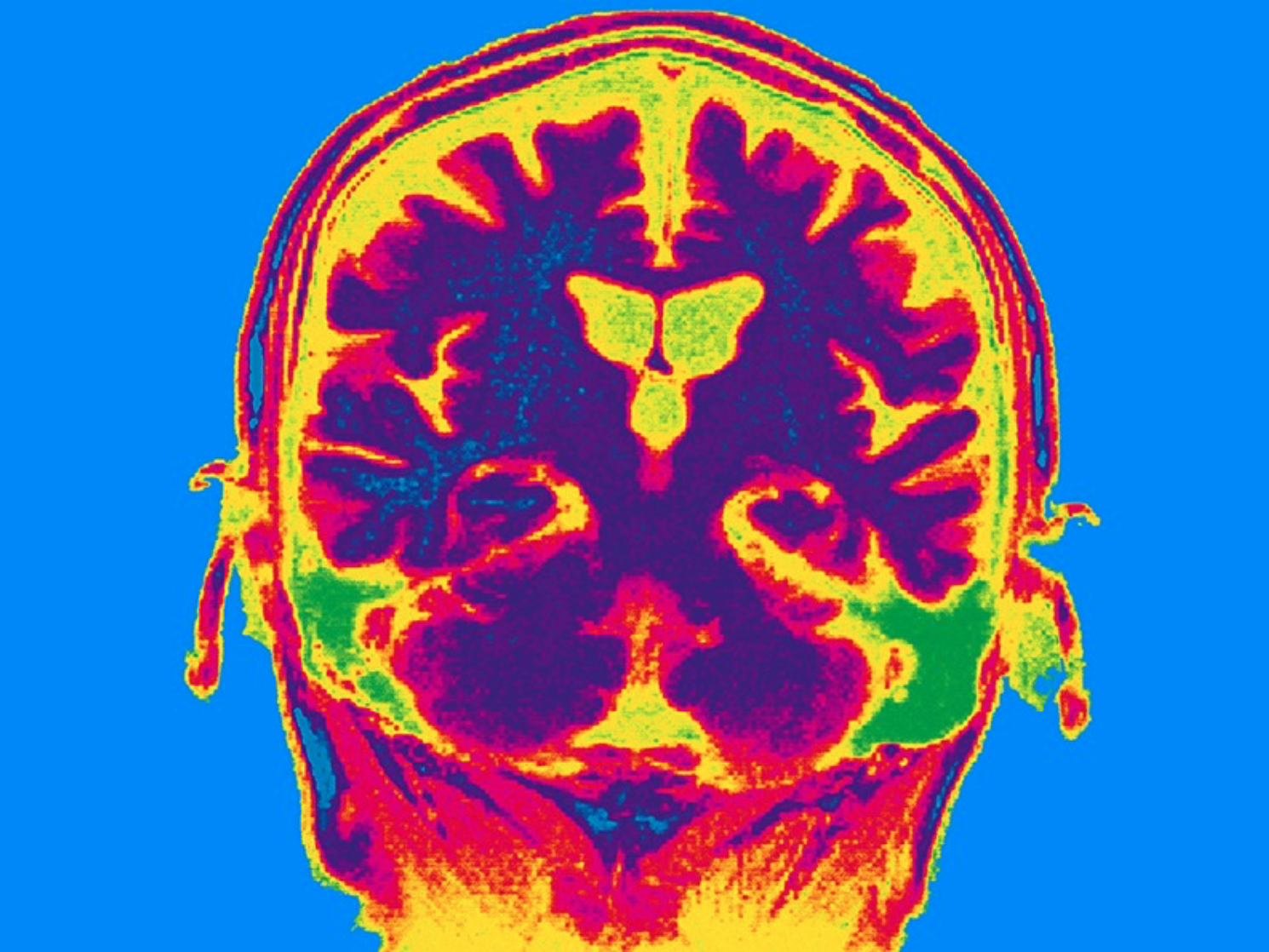

AI reconstructs viewed images

Yu Takagi, Shinji Nishimoto and Osaka University colleagues have published a study which demonstrates that AI can read brain scans and re-create largely realistic versions of images a person has seen. Future applications could include enabling communication of people with paralysis, recording dreams, and understanding animal perception, among others. Additional training was used on the existing text-to-image generative…

-

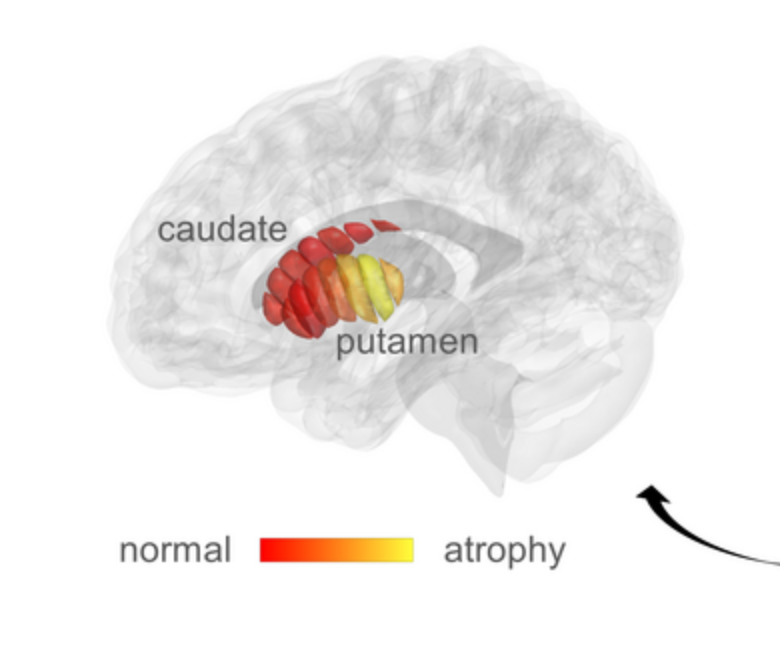

qMRI for early detection of Parkinson’s disease

Aviv Mezer and Hebrew University colleagues used quantitative MRI to identify cellular changes in Parkinson’s disease. Their method enabled them to look at microstructures in the striatum, which is known to deteriorate during disease progression. Using a novel algorithm developed by Elior Drori, biological changes in the striatum were revealed, and associated with early stage…

-

Non-invasive stimulation improves memory in study

In a recent study, Boston University professor Robert Reinhart used tACS to stimulate brain activity in 150 people aged 65-88, resulting in memory improvements for one month. Stimulating the dorsolateral prefrontal cortex improved long-term memory, while stimulating the inferior parietal lobe, with low-frequency electrical currents, boosted working memory. Participants were asked to recall 20 words…

-

Neural Network assesses sleep patterns for passive Parkinson’s diagnosis

MIT’s Dina Katabi has developed a non-contact, neural network-based system to detect Parkinson’s disease while a person is sleeping. By assessing nocturnal breathing patterns, the series of algorithms detects, and tracks the progression of, the disease — every night, at home. A device in the bedroom emits radio signals, analyzes their reflections off the surrounding…

-

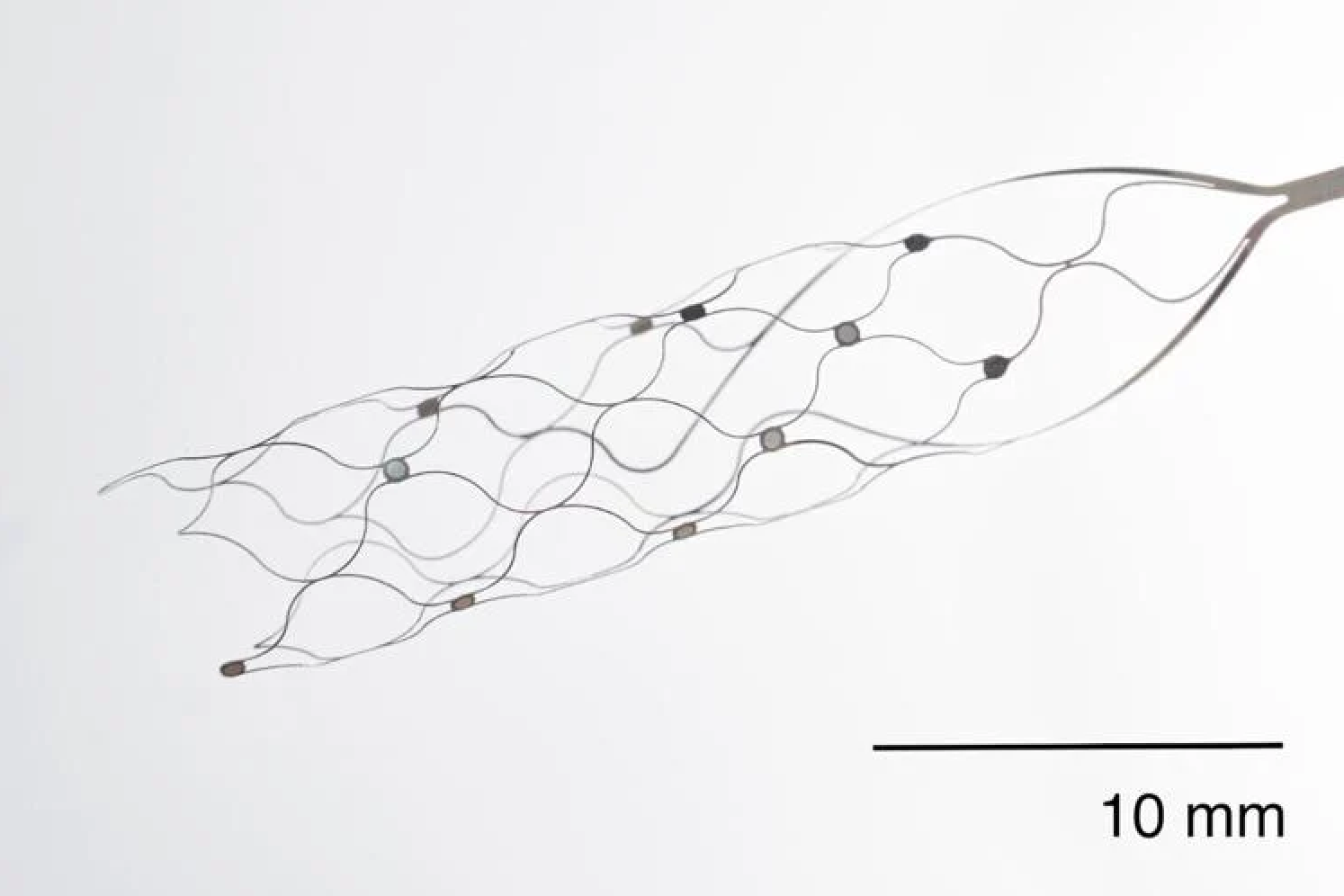

First US patient receives Synchron endovascular BCI implant

On July 6, 2022, Mount Sinai’s Shahram Majidi threaded Synchron‘s 1.5-inch-long, wire and electrode implant into a blood vessel in the brain of a patient with ALS. The goal is for the patient, who cannot speak or move, to be able to surf the web and communicate via email and text, with his thoughts. Four…