Edward Chang at UCSF, Mark Chevillet at Facebook, and colleagues, have published a study where implanted electrodes were used to “read” whole words from thoughts. Previous technology required users to spell words with a virtual keyboard.

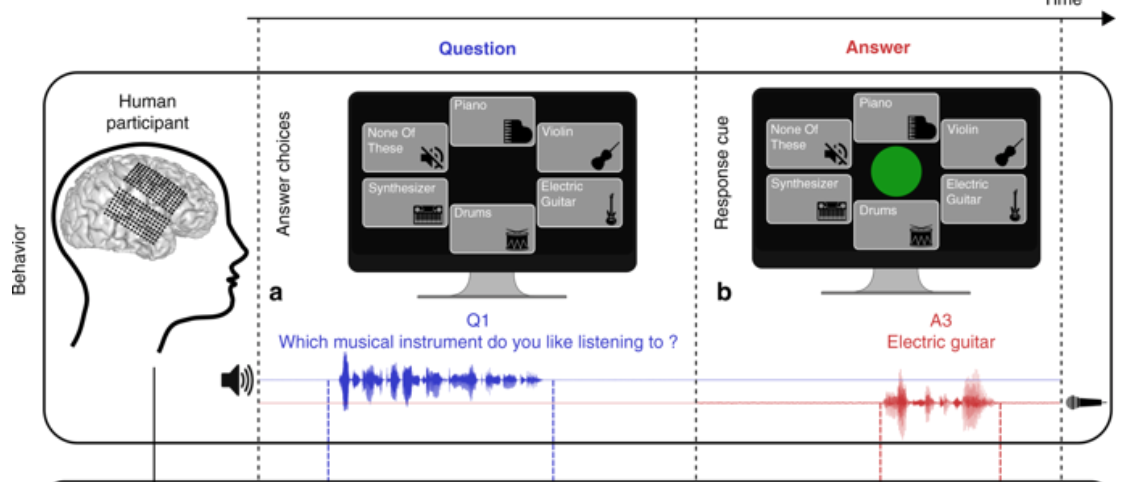

Subjects listened to multiple-choice questions and spoke answers aloud. An electrode array recorded activity in parts of the brain associated with understanding and producing speech, and sought patterns that matched with words and phrases in real-time.

Participants responded to questions with one of several options while their brain activity was recorded. The system guessed when they were asking a question and when they were answering it, and then the content of both speech events. The predictions were shaped by prior contex. Results were 61 to 76 percent accurate, compared with 7 to 20 percent accuracy expected by chance.

This builds on Facebook technology described by Mark Chevillet at the ApplySci conference at the MIT Media Lab in September, 2017, and could result in the ability for the speech-impaired to freely communicate.

Join ApplySci at the 12th Wearable Tech + Digital Health + Neurotech Boston conference on November 14, 2019 at Harvard Medical School featuring talks by Brad Ringeisen, DARPA – Joe Wang, UCSD – Carlos Pena, FDA – George Church, Harvard – Diane Chan, MIT – Giovanni Traverso, Harvard | Brigham & Womens – Anupam Goel, UnitedHealthcare – Nathan Intrator, Tel Aviv University | Neurosteer – Arto Nurmikko, Brown – Constance Lehman, Harvard | MGH – Mikael Eliasson, Roche – David Rhew, Samsung

Join ApplySci at the 13th Wearable Tech + Neurotech + Digital Health Silicon Valley conference on February 11-12, 2020 at Stanford University featuring talks by Zhenan Bao, Stanford – Rudy Tanzi, Harvard – David Rhew, Samsung – Carla Pugh, Stanford – Nathan Intrator, Tel Aviv University | Neurosteer