Vikash Kumar and University of Washington colleagues have developed a simulation model that allows robotic hands to learn from their own experiences, while performing dexterous manipulation. Human direction is not required.

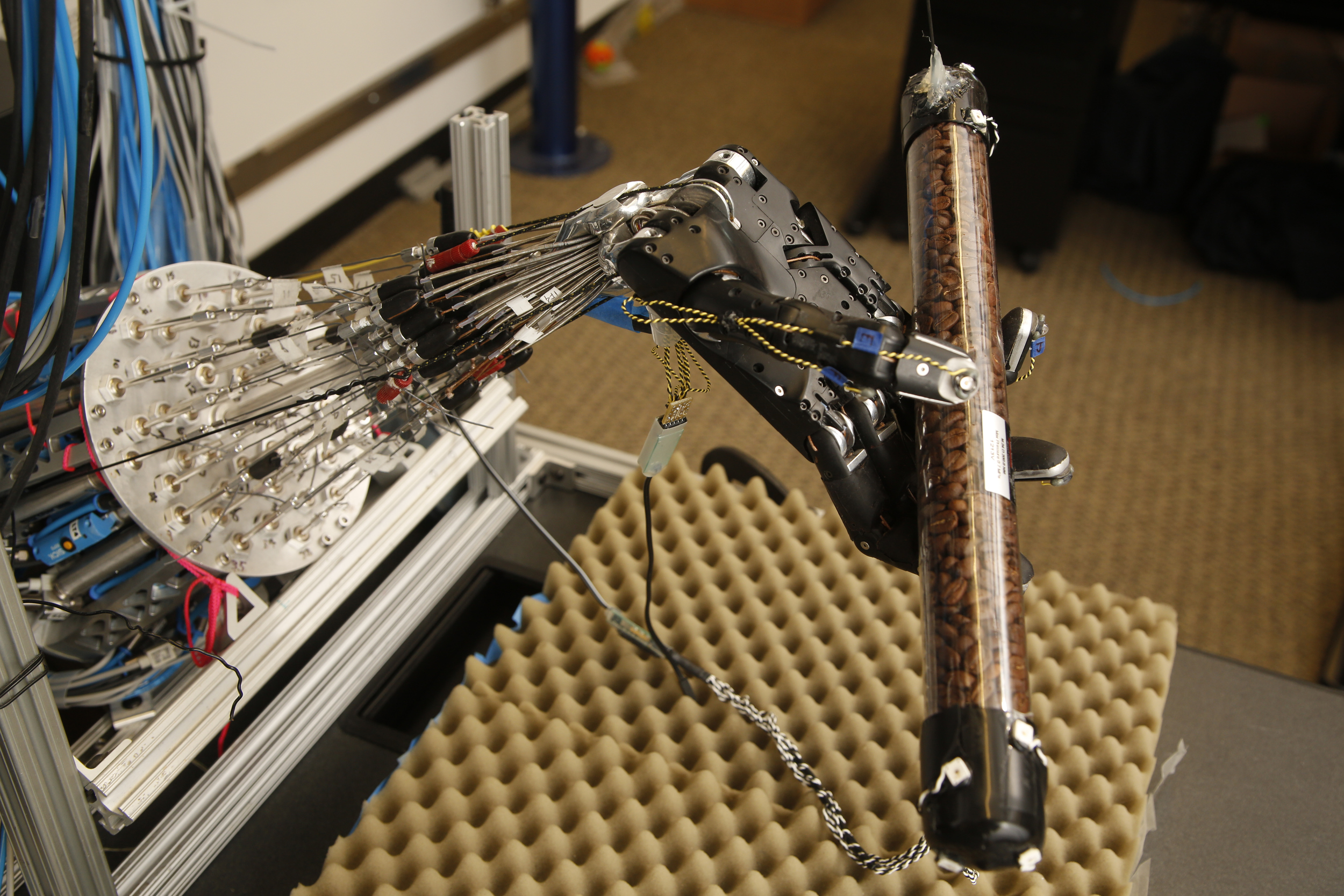

A recent study incorporated the model while a robotic hand attempted several tasks, including rotating an elongated object. With each try, the hand became better able to spin the tube. Machine learning algorithms helped it model the basic physics involved, and plan the actions it should take to best complete the task.

Click to view University of Washington video

Wearable Tech + Digital Health NYC – June 7, 2016 @ the New York Academy of Sciences

NeuroTech NYC – June 8, 2016 @ the New York Academy of Sciences