Scientist-led conferences at Harvard, Stanford and MIT

-

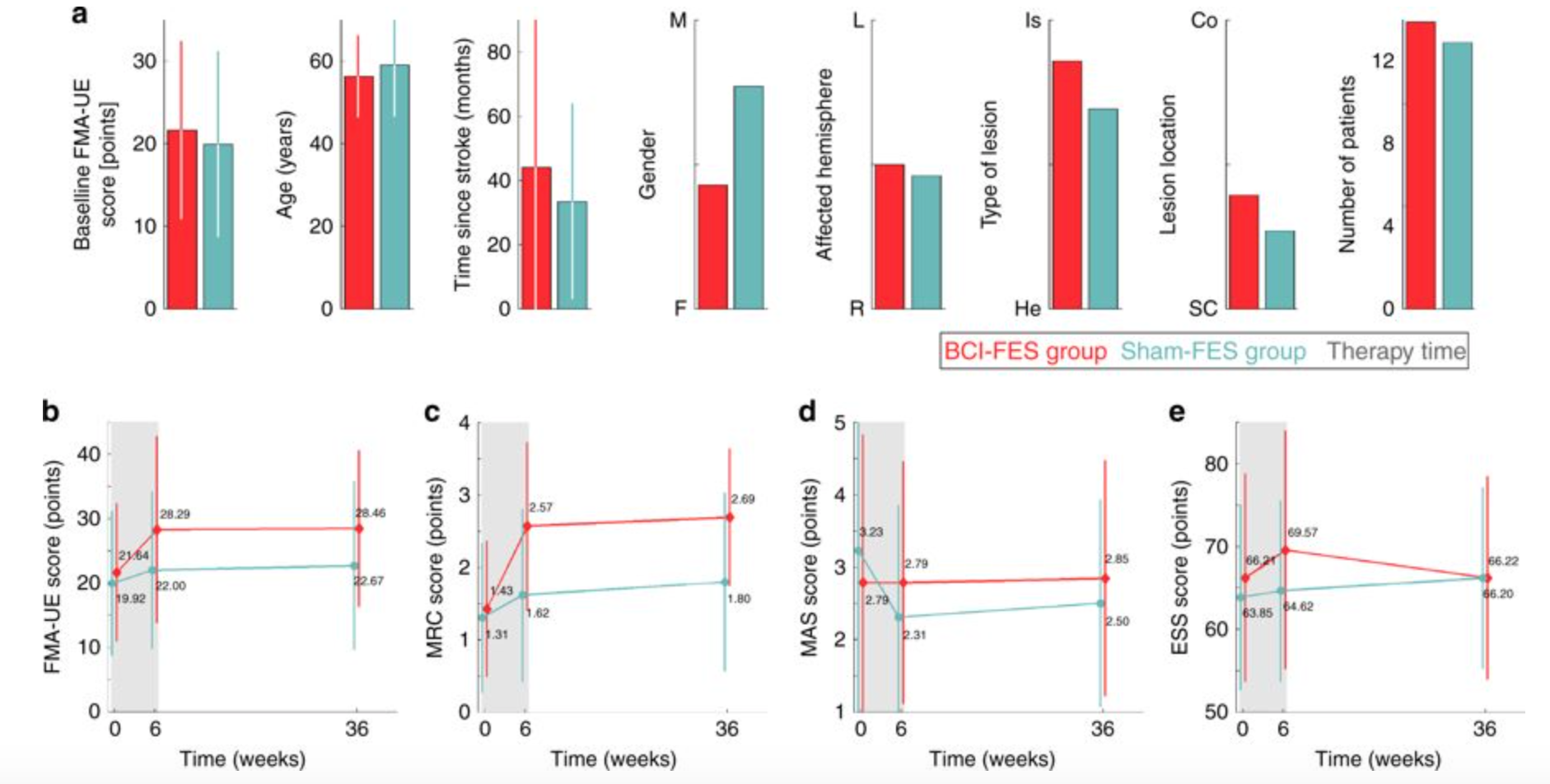

Combined BCI + FES system could improve stroke recovery

Jose Millan and EPFL colleagues have combined a brain computer interface with functional electrical stimulation in a system that, in a study, showed the ability to enhance the restoration of limb use after a stroke. According to Millan: “The key is to stimulate the nerves of the paralyzed arm precisely when the stroke-affected part of…

-

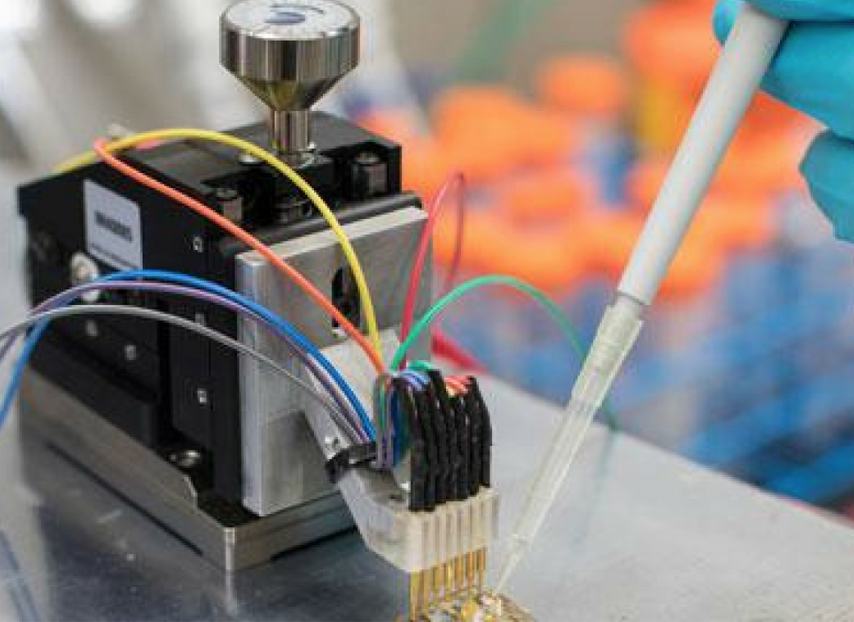

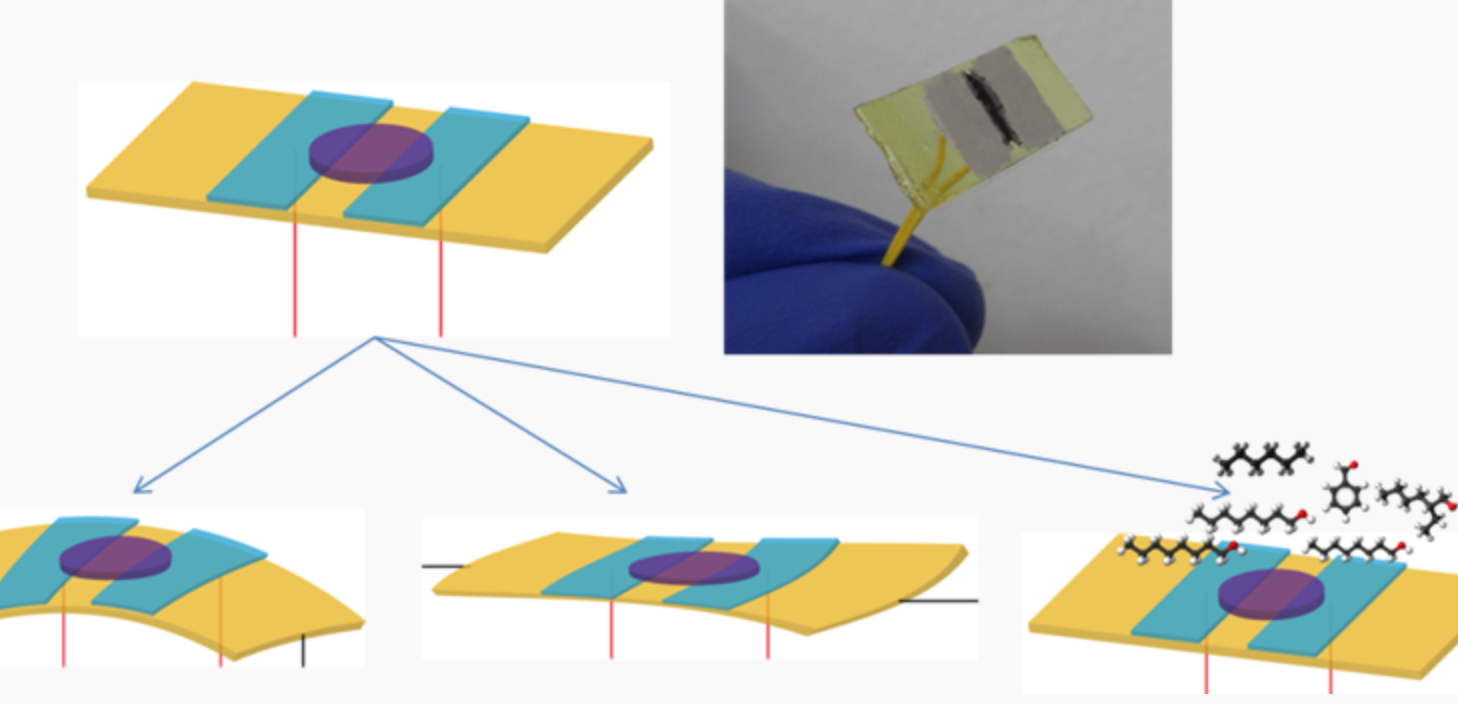

Cheap, molecular-wired, metabolite-measuring sensor

Cambridge’s Anna-Maria Pappa, KAUST’s Sahika Inal, and colleagues have developed a low cost, molecular wired sensor that can measure metabolites in sweat, tears, saliva or blood. It can be incorporated into flexible and stretchable substrates for cellular-level health monitoring. A synthesised polymer acts as a molecular wire, accepting electrons produced during electrochemical reactions. It merges…

-

Tony Chahine on human presence, reimagined | ApplySci @ Stanford

Myant‘s Tony Chahine reimagined human presence at ApplySci’s recent Wearable Tech + Digital Health + Neurotech conference at Stanford: Join ApplySci at the 9th Wearable Tech + Digital Health + Neurotech Boston conference on September 24, 2018 at the MIT Media Lab. Speakers include: Rudy Tanzi – Mary Lou Jepsen – George Church – Roz Picard –…

-

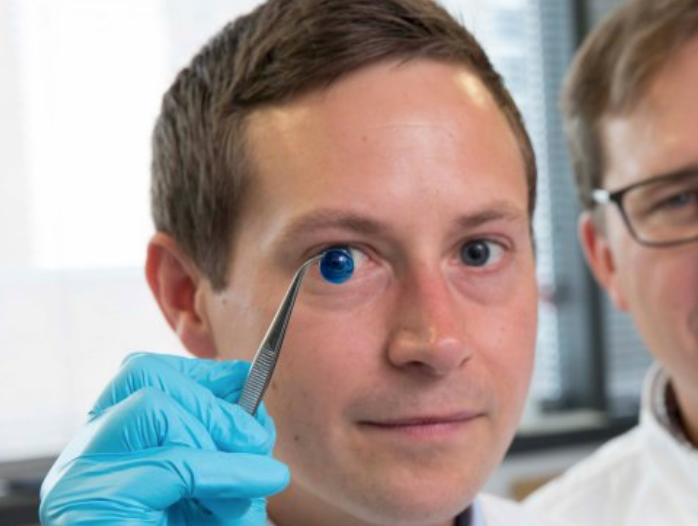

Proof of concept 3D printed cornea

Newcastle University’s Che Connon has developed proof-of-concept research that could lead to a 3D printed cornea. Stem cells from a healthy donor cornea were mixed with alginate and collagen to create a printable bio-ink. A 3D printer extruded the bio-ink in concentric circles to form the shape of a human cornea in less then 10 minutes. The…

-

Thought, gesture-controlled robots

MIT CSAIL’s Daniela Rus has developed an EEG/EMG robot control system based on brain signals and finger gestures. Building on the team’s previous brain-controlled robot work, the new system detects, in real-time, if a person notices a robot’s error. Muscle activity measurement enables the use of hand gestures to select the correct option. According to…

-

Phillip Alvelda: More intelligent; less artificial | ApplySci @ Stanford

Phillip Alvelda discussed AI and the brain at ApplySci’s recent Wearable Tech + Digital Health + Neurotech Silicon Valley conference at Stanford: Dr. Alvelda will join us again at Wearable Tech + Digital Health + Neurotech Boston, on September 24, 2018 at the MIT Media Lab. Other speakers include: Rudy Tanzi – Mary Lou Jepsen – George Church…

-

Algorithm predicts low blood pressure during surgery

UCLA’s Maxime Cannesson has developed an algorithm that, in a recent study, predicted an intraoperative hypotensive event 15 minutes before it occurred in 84 percent of cases, 10 minutes before in 84 percent of cases, and five minutes before in 87 percent of cases. The goal is early identification and treatment, to prevent complications, such…

-

Nano-robots remove bacteria, toxins from blood

UCSD’s Joe Wang and Liangfang Zhang have developed tiny ultrasound-powered robots that can swim through blood, removing harmful bacteria and toxins. Gold nanowires were coated with platelet and red blood cell membranes, allowing the nanorobots to perform the tasks of two different cells at once—platelets, which bind pathogens, and red blood cells, which absorb and neutralize toxins. The…

-

Bob Knight on decoding language from direct brain recordings | ApplySci @ Stanford

Berkeley’s Bob Knight discussed (and demonstrated) decoding language from direct brain recordings at ApplySci’s recent Wearable Tech + Digital Health + Neurotech Silicon Valley at Stanford: Join ApplySci at the 9th Wearable Tech + Digital Health + Neurotech Boston conference on September 24, 2018 at the MIT Media Lab. Speakers include: Rudy Tanzi – Mary Lou Jepsen – George…

-

“Artificial nerve” system for sensory prosthetics, robots

Stanford’s Zhenan Bao has developed an artificial sensory nerve system that can activate the twitch reflex in a cockroach and identify letters in the Braille alphabet. Bao describes it as “a step toward making skin-like sensory neural networks for all sorts of applications” which would include artificial skin that creates a sense of touch in prosthetics. The artificial…

-

Body heat-powered, self-repairing health sensor system

Hossam Haick at Technion-Israel Institute of Technology has developed a body heat powered, self-repairing system of sensors for disease detection and monitoring. Unlike other wearables, the ability to derive energy from the wearer, and to fix tears and scratches, prevents the need to turn off the device for repair or charging, allowing truly continuous tracking.…

-

Nathan Intrator on epilepsy, AI, and digital signal processing | ApplySci @ Stanford

Nathan Intrator discussed epilepsy, AI and digital signal processing at ApplySci’s Wearable Tech + Digital Health + Neurotech Silicon Valley conference on February 26-27, 2018 at Stanford University: Join ApplySci at the 9th Wearable Tech + Digital Health + Neurotech Boston conference on September 24, 2018 at the MIT Media Lab. Speakers include: Mary Lou…

Got any book recommendations?