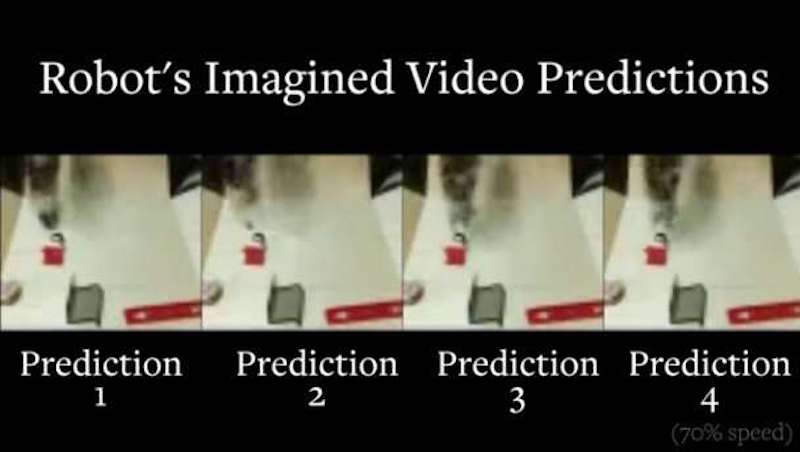

Sergey Levine and UC Berkeley colleagues have developed robotic learning technology that enables robots to visualize how different behaviors will affect the world around them, with out human instruction. This ability to plan, in various scenarios, could improve self-driving cars and robotic home assistants.

Visual foresight allows robots to predict what their cameras will see if they perform a particular sequence of movements. The robot can then learn to perform tasks without human help or prior knowledge of physics, its environment or what the objects are.

The deep learning technology is based on dynamic neural advection. These models predict how pixels in an image will move from one frame to the next, based on the robot’s actions. This has enabled robotic control based on video prediction to perform increasingly complex tasks.

Click to view UC Berkeley video

Join ApplySci at Wearable Tech + Digital Health + Neurotech Silicon Valley on February 26-27, 2018 at Stanford University. Speakers include: Vinod Khosla – Justin Sanchez – Brian Otis – Bryan Johnson – Zhenan Bao – Nathan Intrator – Carla Pugh – Jamshid Ghajar – Mark Kendall – Robert Greenberg – Darin Okuda – Jason Heikenfeld – Bob Knight – Phillip Alvelda – Paul Nuyujukian – Peter Fischer – Tony Chahine – Shahin Farshchi – Ambar Bhattacharyya – Adam D’Augelli – Juan-Pablo Mas – Michael Eggleston – Walter Greenleaf