In a Nature paper, the system accurately detected objects, including a soda can, scissors, tennis ball, spoon, pen, and mug 76 percent of the time.

The tactile sensing sensors could be used in combination with traditional computer vision and image-based datasets to give robots a more human-like understanding of interacting with objects. The dataset also measured cooperation between regions of the hand during interactions, which could be used to customize prosthetics.

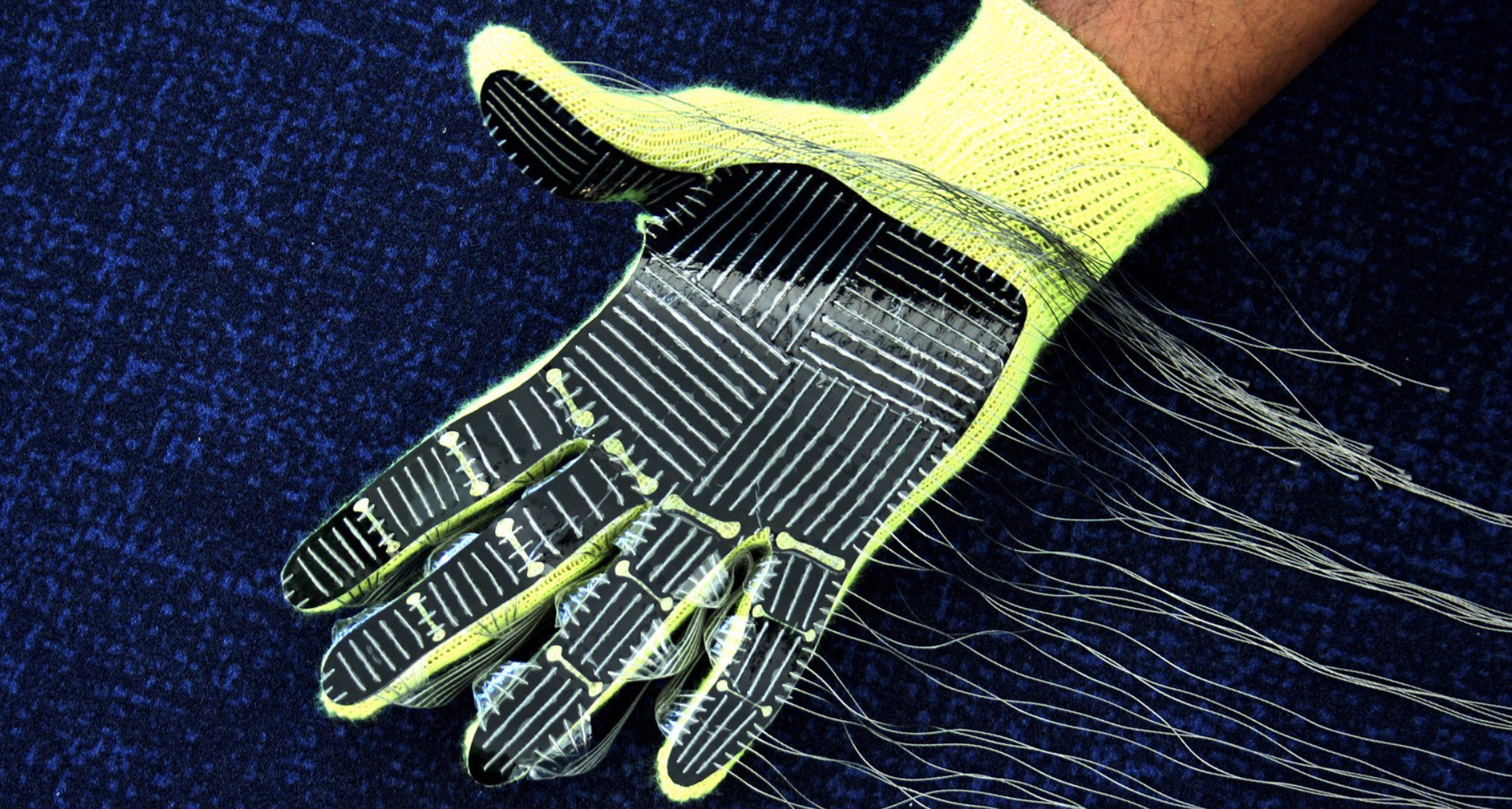

Similar sensor-based gloves used cost thousands of dollars and typically 50 sensors. The STAG glove costs approximately $10 to produce.

Click to view MIT video

Join ApplySci at the 12th Wearable Tech + Digital Health + Neurotech Boston conference on November 14, 2019 at Harvard Medical School and the 13th Wearable Tech + Neurotech + Digital Health Silicon Valley conference on February 11-12, 2020 at Stanford University