Category: AI

-

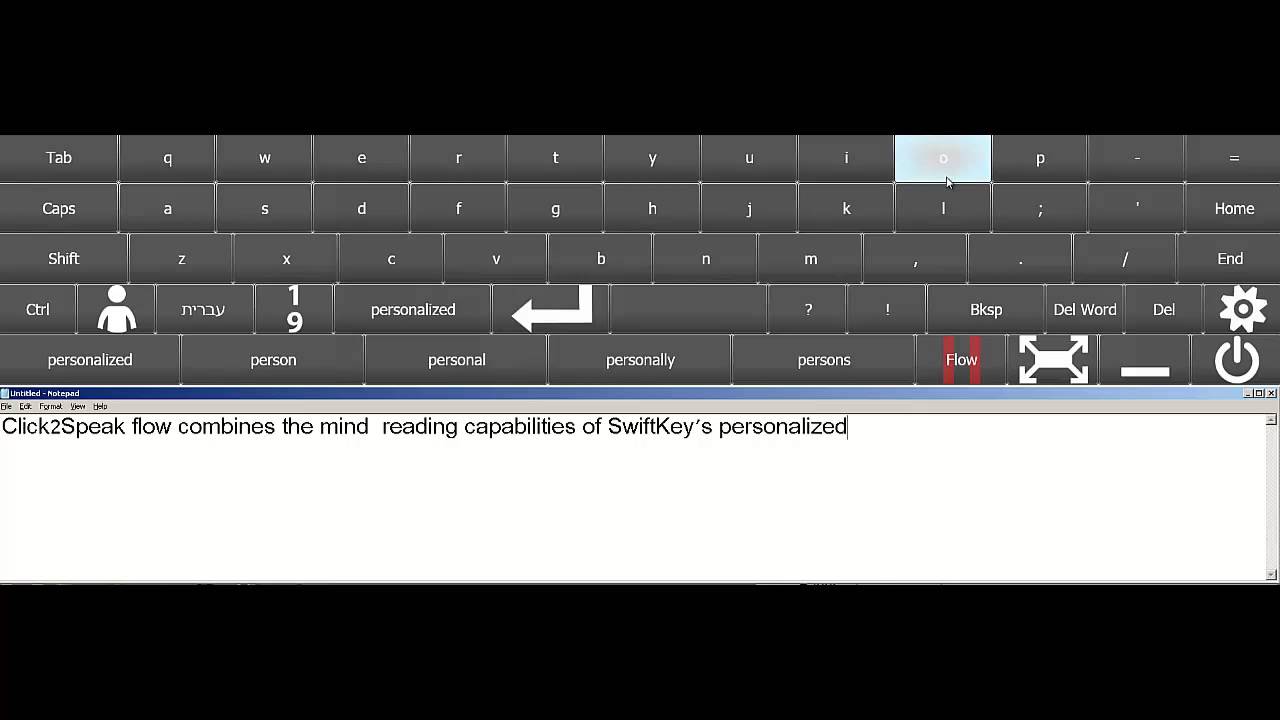

Eye tracking virtual keyboard

Click2Speak is SwiftKey based software that allows users to type on a virtual keyboard using eye movements. AI technology predicts words from texts, facebook, and twitter. A camera tracks eye movement, and one can click with a foot mouse or by looking at a button for a few seconds. Founder Gal Sont, who has ALS,…

-

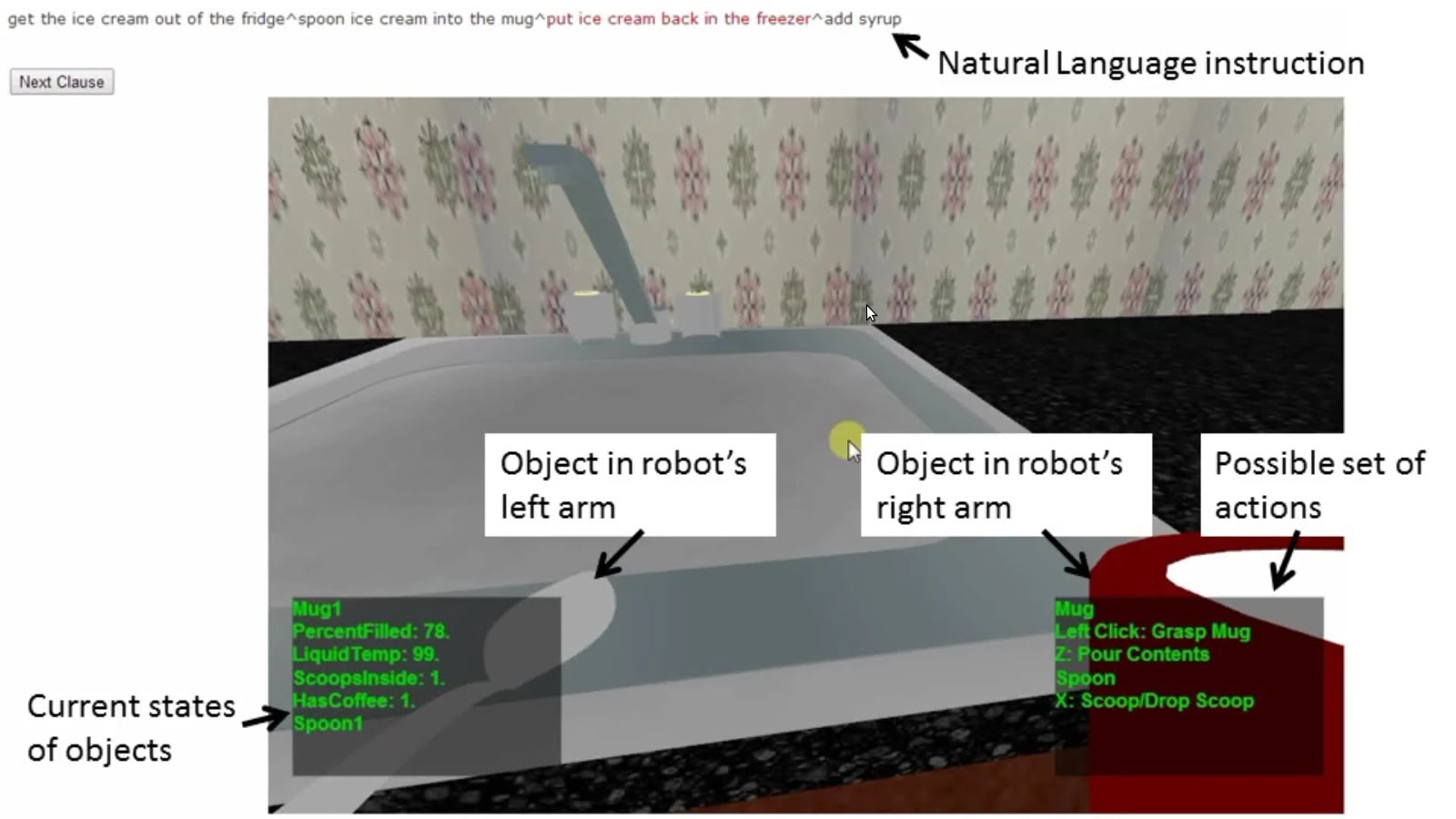

Context sensitive robot understands casual language

Cornell professor Ashutosh Saxenam is developing a context sensitive robot that is able to understand natural language commands, from different speakers, in colloquial English. The goal is to help robots account for missing information when receiving instructions and adapt to their environment. Tell Me Dave is equipped with a 3D camera for viewing its surroundings. Machine learning has enabled…

-

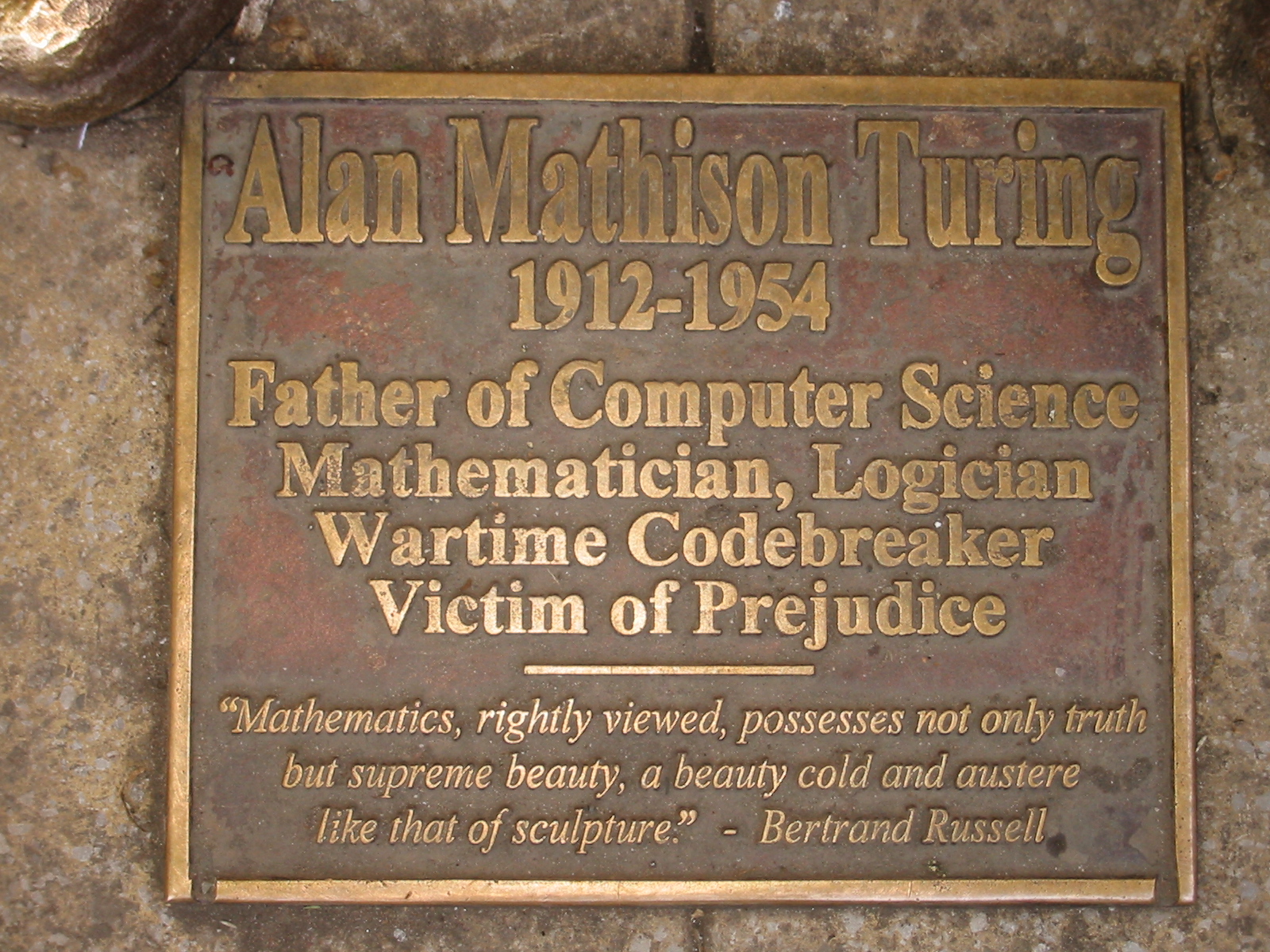

Chatbot passes Turing Test

A chatterbot named Eugene Goostman has become the first to pass the Turing Test. “Eugene” and four other contenders participated in the Turing Test 2014 Competition at the Royal Society in London. Each chatterbox was required to engage in a series of five-minute text-based conversations with a panel of judges. A computer passes the test if…

-

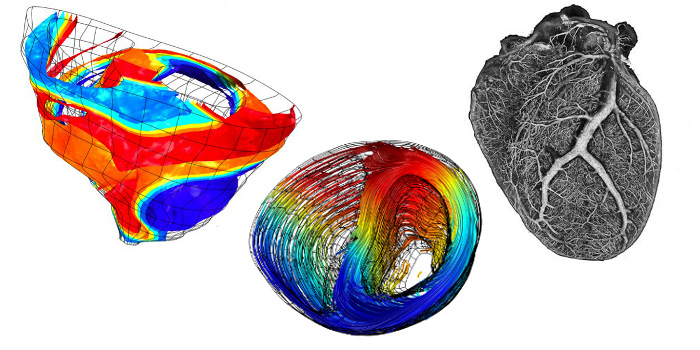

Human body simulation for health research

The Virtual Physiological Human project is a computer simulated replica of the human body that is being created to test drugs and treatments. It will allow physicians to model the mechanical, physical and biochemical functions of the body as a single complex system rather than as a collection of organs. The goal is to offer personalized treatment…

-

Brain based machine learning software

Vicarious FPC claims to be “building software that thinks and learns like a human.” Their goal is to replicate the neocortex, the part of the brain that sees, controls the body, understands language and does math. Its first project is a visual perception system that interprets contents of photographs and videos. The next milestone is to…

-

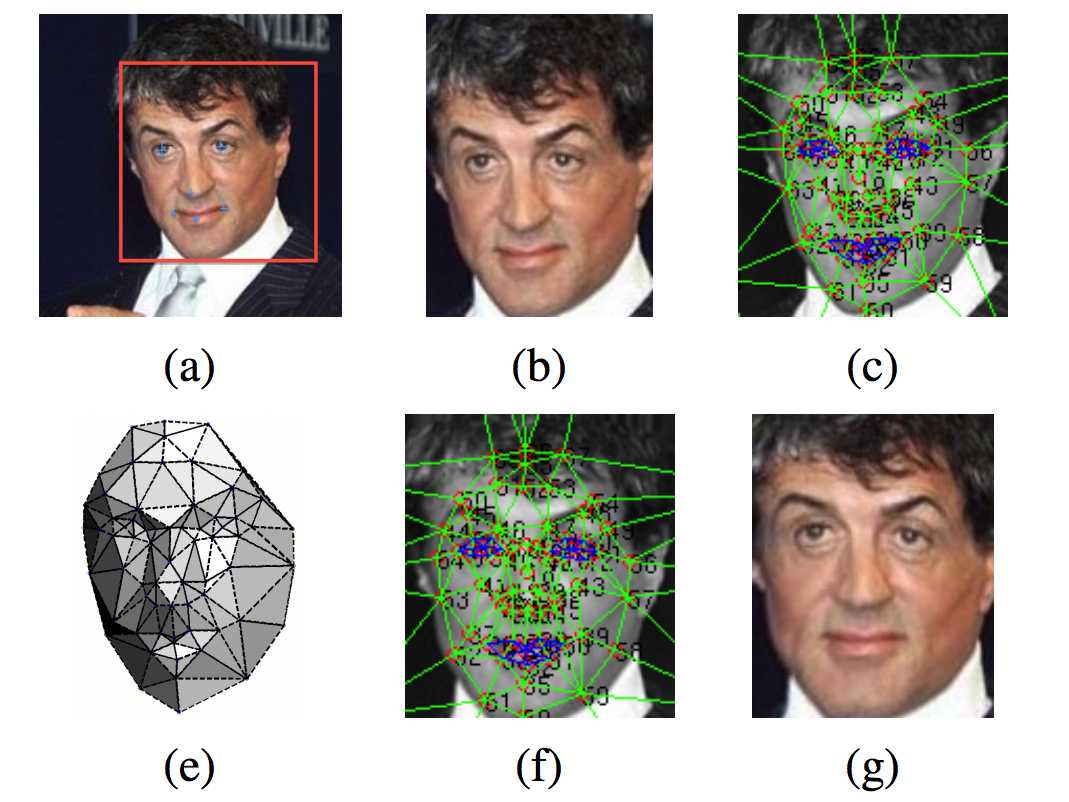

Facebook’s DeepFace achieves near human-level face verification

Following its acquisition of Tel Aviv University Professor Lior Wolf‘s face.com, Facebook is developing DeepFace, with near-human level face recognition capabilities. The technology processes images of faces in two steps. First it corrects the angle of a face so that the person in the picture faces forward, using a 3-D model of an “average” forward-looking…

-

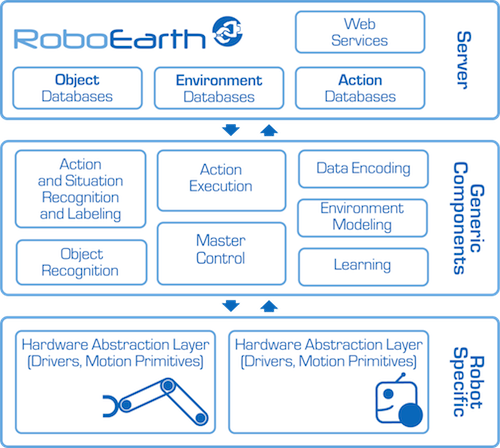

Collaborative cloud system for human-serving robots

http://www.roboearth.org/what-is-roboearth RoboEarth’s goal is to speed the development of human-serving robots. Scientists from five European universities gathered this week for its launch and demonstrated potential applications. This included a robot scanning a room’s physical layout, including the location of the patient’s bed, and the placing a carton of milk on a nearby table. The system is…

-

K supercomputer runs largest neural simulation to date

http://www.telegraph.co.uk/technology/10567942/Supercomputer-models-one-second-of-human-brain-activity.html RIKEN, Okinawa Institute of Science and Technology, and Forschungszentrum Jülich researchers have used Japan’s K computer to run the longest brain simulation to date.With 705,024 processor cores, running at speeds of over 10 petaflops, it is ranked the world’s fourth-most powerful computer. Using Neural Simulation Technology software and 92,944 of its processors, the computer replicated one second of…

-

Johns Hopkins develops thought controlled prosthetic arm and “targeted innervation” technique

http://hub.jhu.edu/2013/01/02/prosthetic-arm-60-minutes The number of researchers developing advanced prosthetics, particularly thought controlled limbs, is increasing rapidly. This can significantly impact the lives of many. In Johns Hopkins Universty’s Applied Physics Lab, a motorized arm with a five fingered hand that operates much like human hand is nearing completion. Professor Michael McLoughlin and trauma surgeon Albert Chi have developed a…

-

Crowdfunded 3-D augmented reality glasses aim to compete with Google Glass

https://www.spaceglasses.com/ For several months, after a successful Kickstarter campaign, Meta has been developing augmented reality glasses “that combine the power of a laptop and smartphone in a pair of thick Ray-Bans and a small pocket computer.” The Meta Pro will have an i5 CPU, 4GB of RAM, 128 GB of storage, Wi-Fi 802.11n and Bluetooth 4.0 connectivity.…

-

Year end review of “neuromorphic” chip prototypes

http://www.technologyreview.com/featuredstory/522476/thinking-in-silicon/ MIT Technology Review today features an overview of processors that they claim are “about to narrow the gulf between artificial and natural computation—between circuits that crunch through logical operations at blistering speed and a mechanism honed by evolution to process and act on sensory input from the real world.” Caltech’s Carver Mead pioneered “brain…

-

Nerve interface simulates touch in prosthetic hand

http://www.technologyreview.com/news/522086/an-artificial-hand-with-real-feelings/ Cleveland Veterans Affairs Medical Center and Case Western Reserve University researchers have developed an interface that can convey a sense of touch from 20 spots on a prosthetic hand. It directly stimulates nerve bundles, known as peripheral nerves, in the arms of patients. Two people have been fitted with the interface to date.…