USC researchers are using machine learning to diagnose depression, based on speech patterns. During interviews, SimSensei detected reductions in vowel expression that might be missed by human interviewers.

The depression-associated speech variations have been documented in past studies. Depressed patient speech can be flat, with reduced variability, and monotonicity. Reduced speech, reduced articulation rate, increased pause duration, and varied switching pause duration were also observed.

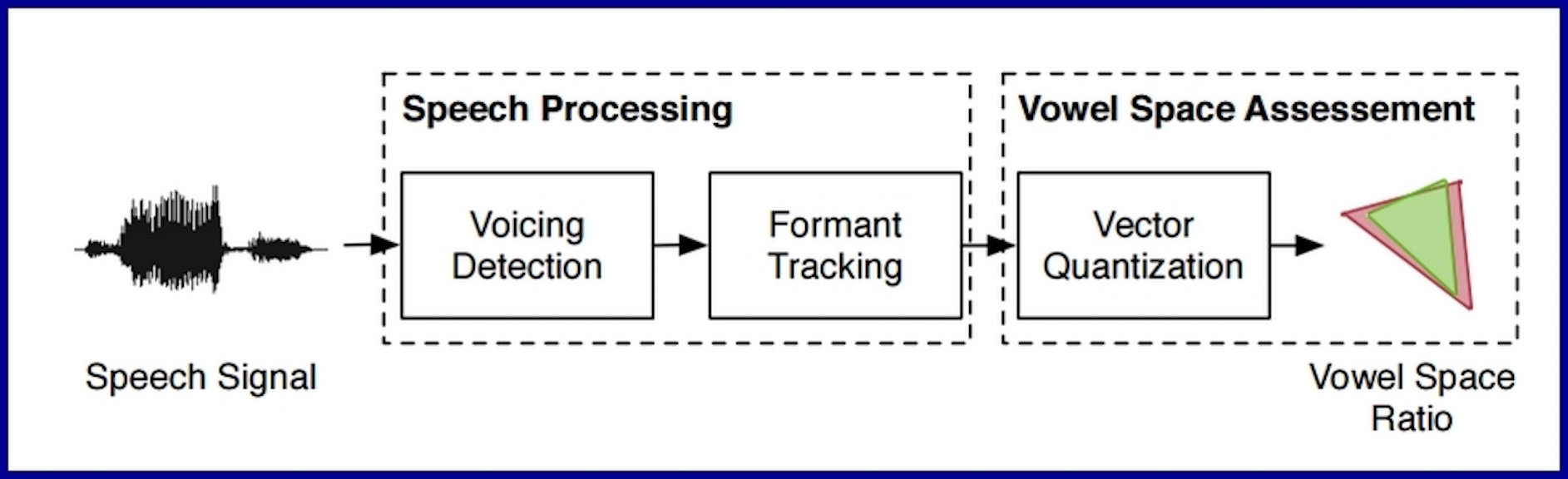

253 adults used the method to be tested for “vowel space,” which was significantly reduced in subjects that reported symptoms of depression and PTSD. The researchers believe that vowel space reduction could also be linked to schizophrenia and Parkinson’s disease, which they are investigating.

Join us at ApplySci’s 6th WEARABLE TECH + DIGITAL HEALTH + NEUROTECH conference – February 7-8, 2017 @ Stanford University