Category: Machine Learning

-

Mobile hyperspectral “tri-corder”

Tel Aviv University‘s David Menlovic and Ariel Raz are turning smartphones into hyperspectral sensors, capable of identifying chemical components of objects from a distance. The technology, being commercialized by Unispectral and Ramot, improves camera resolution and noise filtering, and is compatible with smartphone lenses. The new lens and software allow in much more light than current smartphone camera…

-

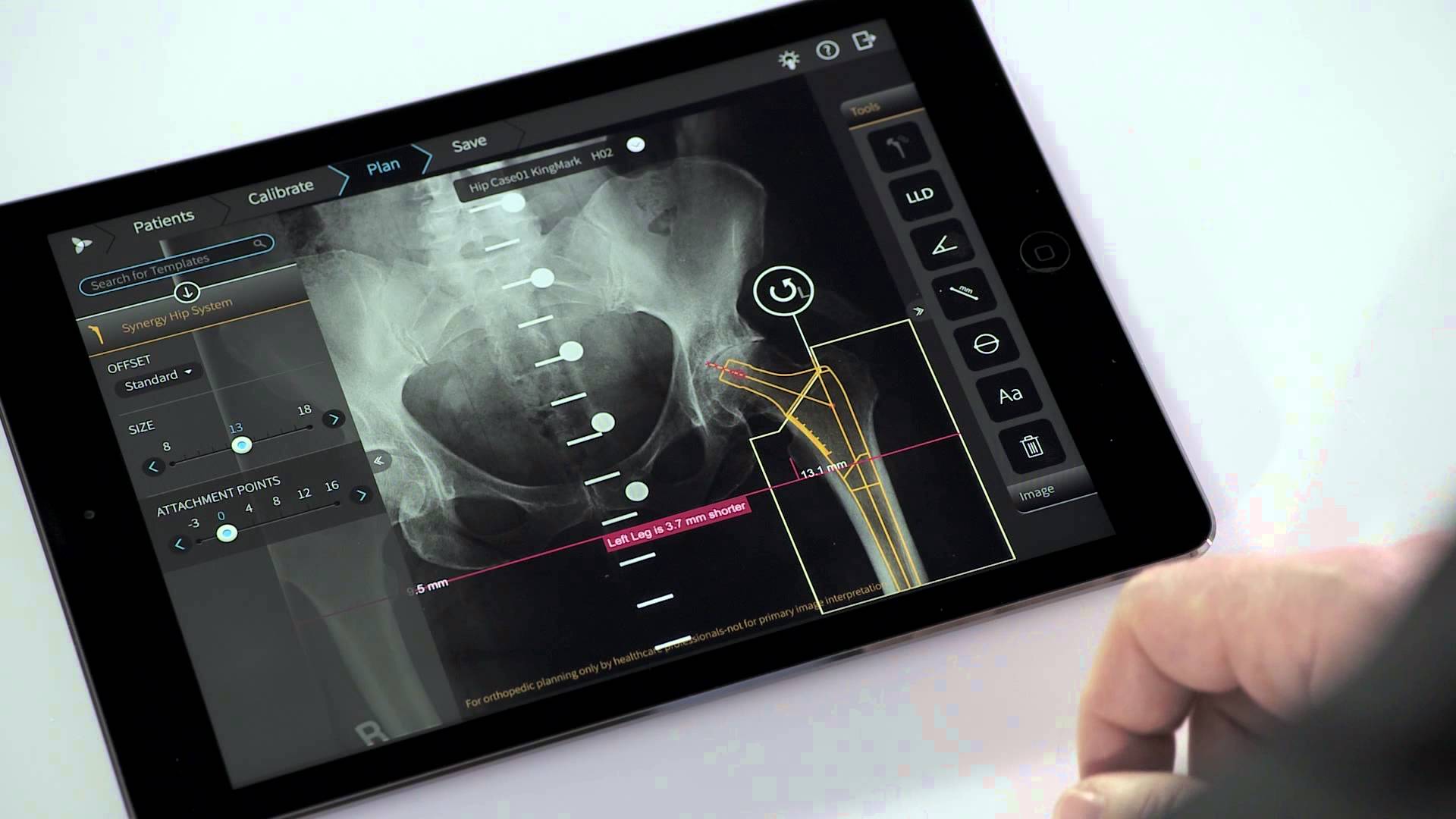

App helps orthopedic surgeons plan procedures

Tel Aviv based Voyant Health‘s TraumaCad Mobile app helps orthopedic surgeons plan operations and create result simulations. The system offers modules for hip, knee, deformity, pediatric, upper limb, spine, foot and ankle, and trauma surgery. The iPad app mobile version of this decade old system was recently approved by the FDA. Surgeons can securely import medical images from the…

-

Physiological and mathematical models simulate body systems

Another CES standout was LifeQ, a company that combines physiological and bio-mathematical modeling to provide health decision data. LifeQ Lens is a multi-wavelength optical sensor that can be integrated into wearable devices. It monitors key metrics, with what the company claims to be laboratory level accuracy, using a proprietary algorithm. Raw data is translated through…

-

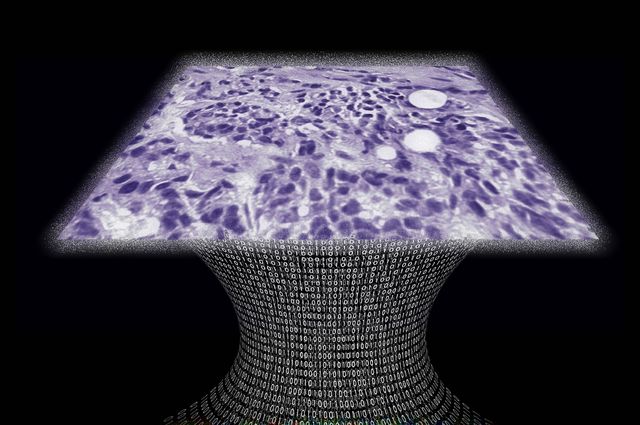

Portable, lens free, on chip microscope for 3-D imaging

UCLA professor Aydogan Ozcan has developed a lens-free microscope for high throughput 3-D tissue imaging to detect cancer or other cell level abnormalities. Laser or light-emitting-diodes illuminate a tissue or blood sample on a slide inserted into the device. A sensor array on a microchip captures and records the pattern of shadows created by the sample. The…

-

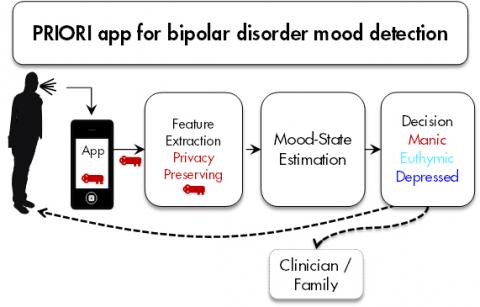

Speech app detects bipolar mood swings early

PRIORI is an android app that monitors subtle voice changes to detect bipolar mood swings. It was developed by Zahi Karam, Emily Mower Provost and Melvin McInnis at the University of Michigan. The hope is to anticipate swings before they happen, and intervene. PRIORI was inspired by the families of bipolar patients, who often were first…

-

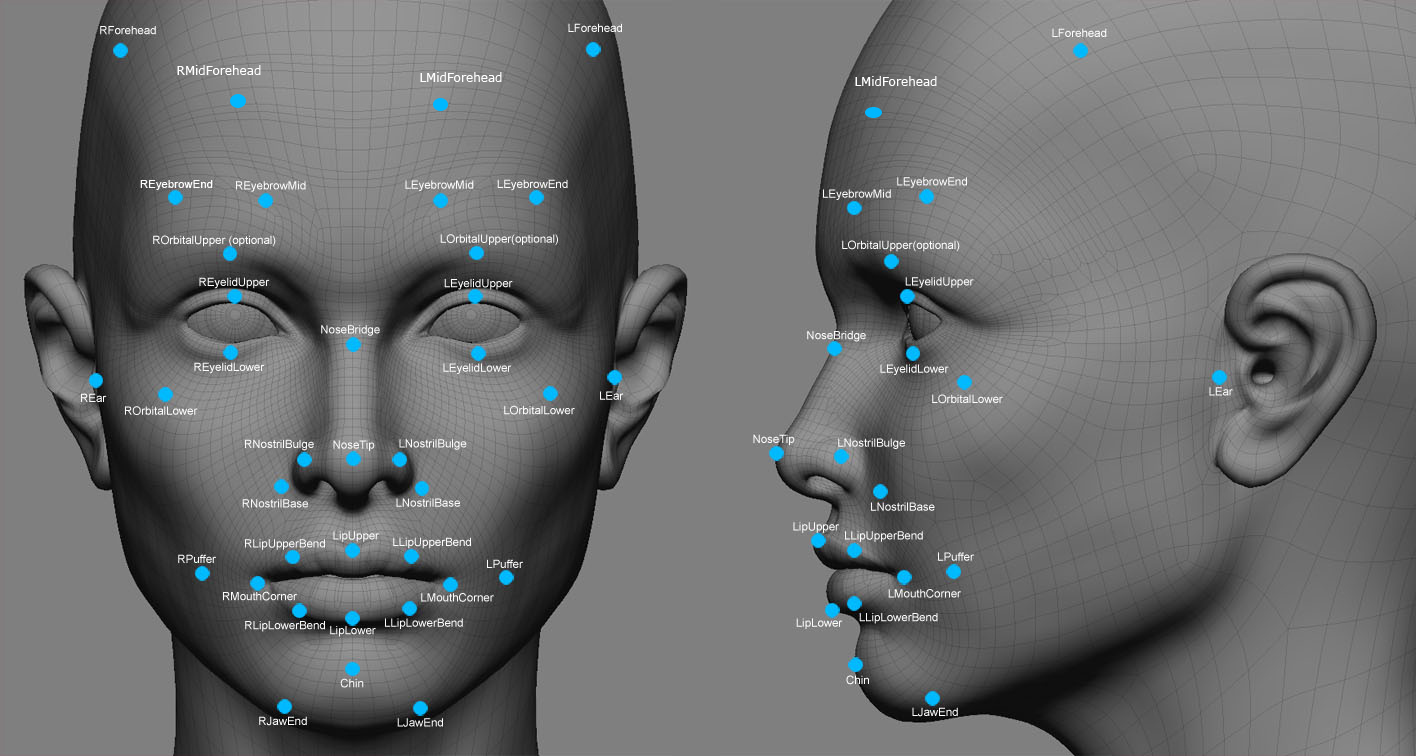

Face video scan detects heart disease

According to University of Rochester professor Jean-Philippe Couderc, cardiac disease can be identified and diagnosed using contactless video monitoring of the face. A recent study describes technology and an algorithm that scan the face and detect skin color changes imperceptible to the naked eye. Sensors in digital cameras record the colors red, green, and blue. Hemoglobin “absorbs”…

-

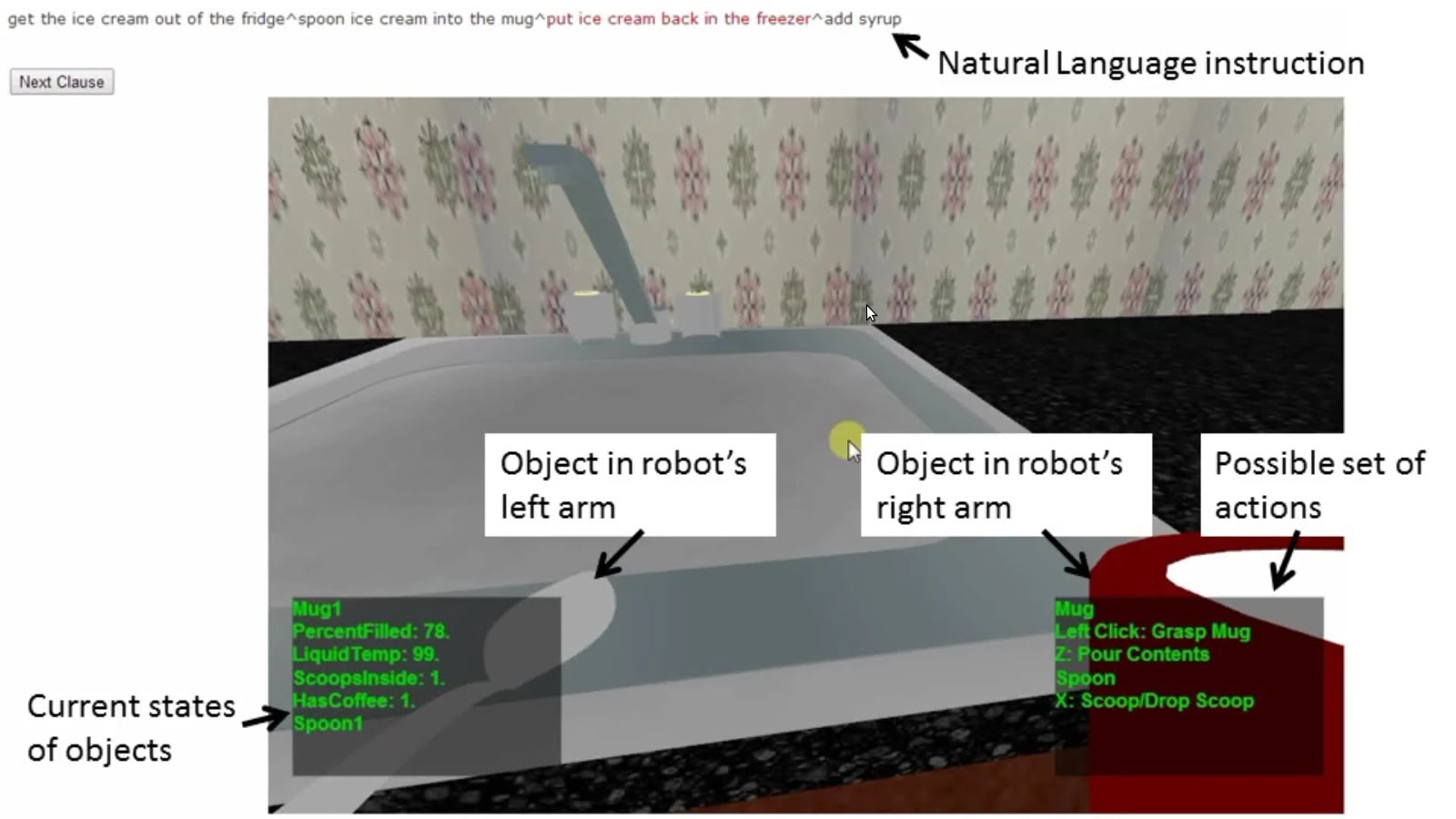

Context sensitive robot understands casual language

Cornell professor Ashutosh Saxenam is developing a context sensitive robot that is able to understand natural language commands, from different speakers, in colloquial English. The goal is to help robots account for missing information when receiving instructions and adapt to their environment. Tell Me Dave is equipped with a 3D camera for viewing its surroundings. Machine learning has enabled…

-

MD Anderson uses IBM’s Watson supercomputer to accelerate cancer fighting knowledge

http://www-03.ibm.com/press/us/en/pressrelease/42214.wss Houston’s MD Anderson Cancer Center is feeding IBM’s Watson “cognitive computer” case histories on more than 1 million leukemia patients, along with information about the disease, research and treatment options. Hospital staff and doctors hope it will help guide care and reduce the death rate. They also hope the supercomputer will be able to…

-

Tooth sensor monitors health

http://mll.csie.ntu.edu.tw/papers/TeethProbeISWC.pdf National Taiwan University researchers have created a tooth-based sensor, accelerometer, and associated machine learning software to detect and distinguish between chewing, smoking, coughing, or speaking. The capability to monitor mouth motions may help physicians keep track of patient progress or allow a patient to better understand his/her health habits. Working prototypes of the tooth…

-

Brain Computer Interface – a timeline

http://www.livescience.com/37944-how-the-human-computer-interface-works-infographics.html From the Babbage Analytical Engine of 1822 through thought control – a brief history of the intersection of mind and machine.

-

Eye tracking data helps diagnose autism, ADHD, Parkinson’s

http://www.scientificamerican.com/article.cfm?id=eye-tracking-software-may-reveal-autism-and-other-brain-disorders USC’s Laurent Itti and researchers from Queen’s University in Ontario have created a data heavy, low cost method of identifying brain disorders through eye tracking. Subjects watch a video for 15 minutes while their eye movements are recorded. An enormous amount of data is generated as the average person makes three to five saccadic…

-

fMRI and machine learning identify emotions

http://www.plosone.org/article/info%3Adoi%2F10.1371%2Fjournal.pone.0066032 Carnegie Mellon researchers have developed “a new method with the potential to identify emotions without relying on people’s ability to self-report” using a combination of fMRI and machine learning. They recruited 10 actors from the university’s drama school to act out emotions including anger, happiness, pride and shame, while inside an fMRI scanner, multiple times in…