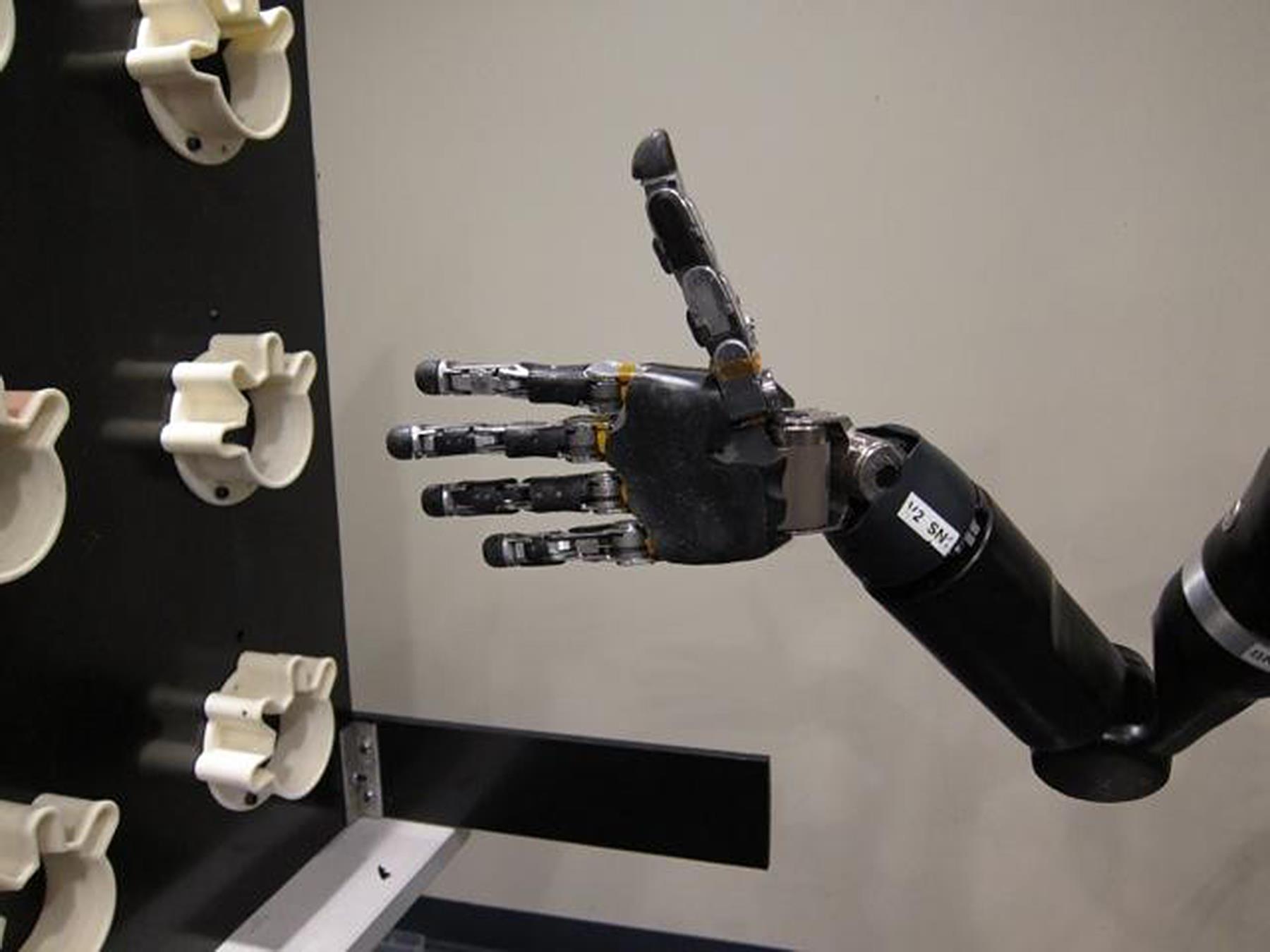

Jennifer Collinger and University of Pittsburgh colleagues have enabled a prosthetic arm wearer to reach, grasp, and place a variety of objects with 10-D control for the first time.

The trial participant had electrode grids with 96 contact points surgically implanted in her brain in 2012. This allowed 3-D control of her arm. Each electrode point picked up signals from an individual neuron, which were relayed to a computer to identify the firing patterns associated with observed or imagined movements, such as raising or lowering the arm, or turning the wrist. This was used to direct the movements of a prosthetic arm developed by Johns Hopkins Applied Physics Laboratory. Three months later, she also could flex the wrist back and forth, move it from side to side and rotate it clockwise and counter-clockwise, as well as grip objects, adding up to 7-D control.

The new study, published yesterday, allowed the participant 10-D control — the ability to move the robot hand into different positions while also controlling the arm and wrist.

To bring the total of arm and hand movements to 10, the pincer grip was replaced by four hand shapes: finger abduction, in which the fingers are spread out; scoop, in which the last fingers curl in; thumb opposition, in which the thumb moves outward from the palm; and a pinch of the thumb, index and middle fingers. As before, the participant watched animations and imagined the movements while the team recorded her brain signals. They used this to read her thoughts so that she could move the hand into various positions.