Tag: Featured

-

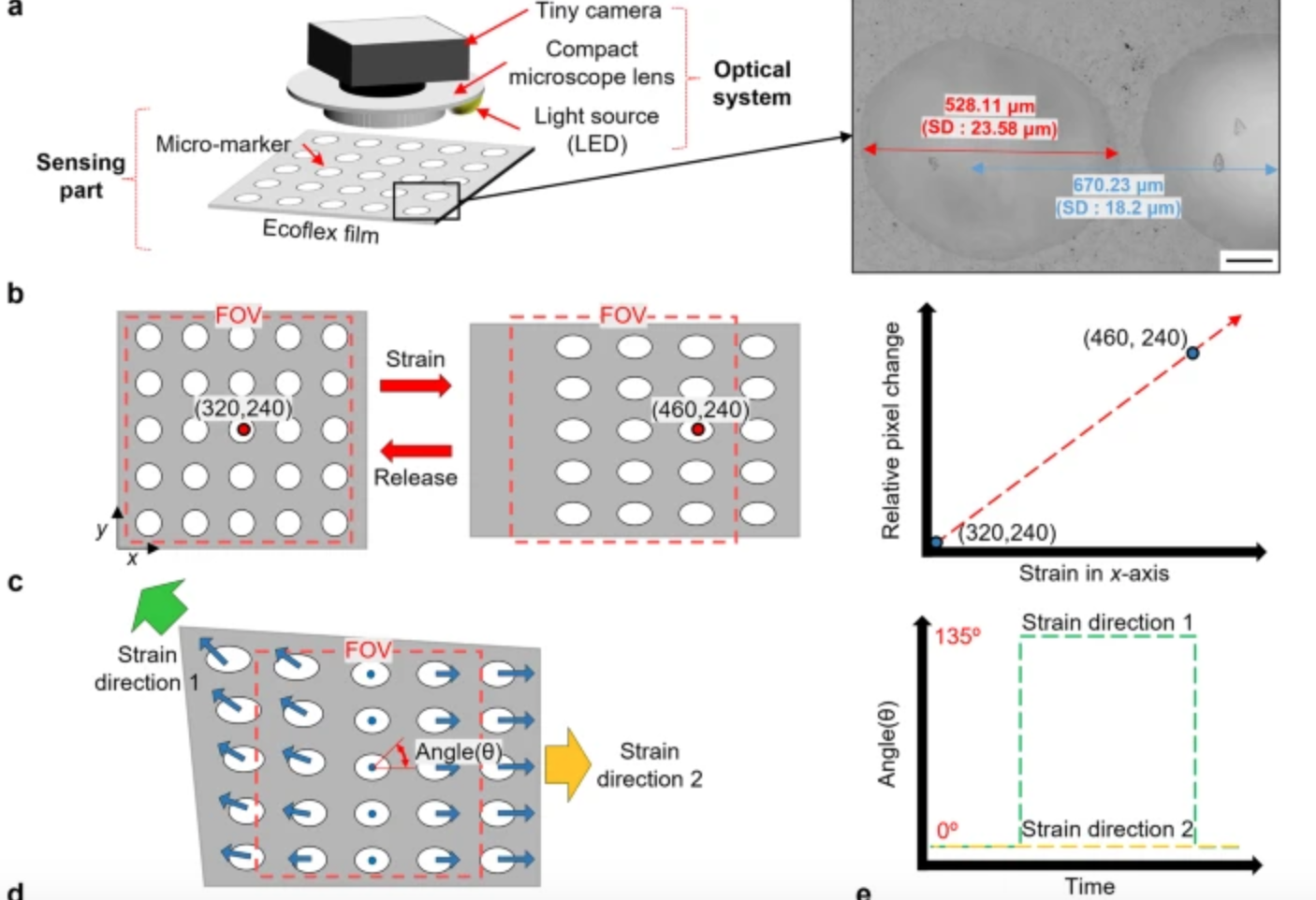

Computer vision enhanced sensors improve rehabilitation

POSTECH professor Sung-Min Park and researcher Sunguk Hong have developed optical sensor technology, integrated with computer vision, to track muscle movements in rehab patients with limited mobility. The sensors are flexible, lightweight, and able to gauge subtle body changes, while overcoming the conventional challenges of soft strain sensors – inadequate durability due to temperature and…

-

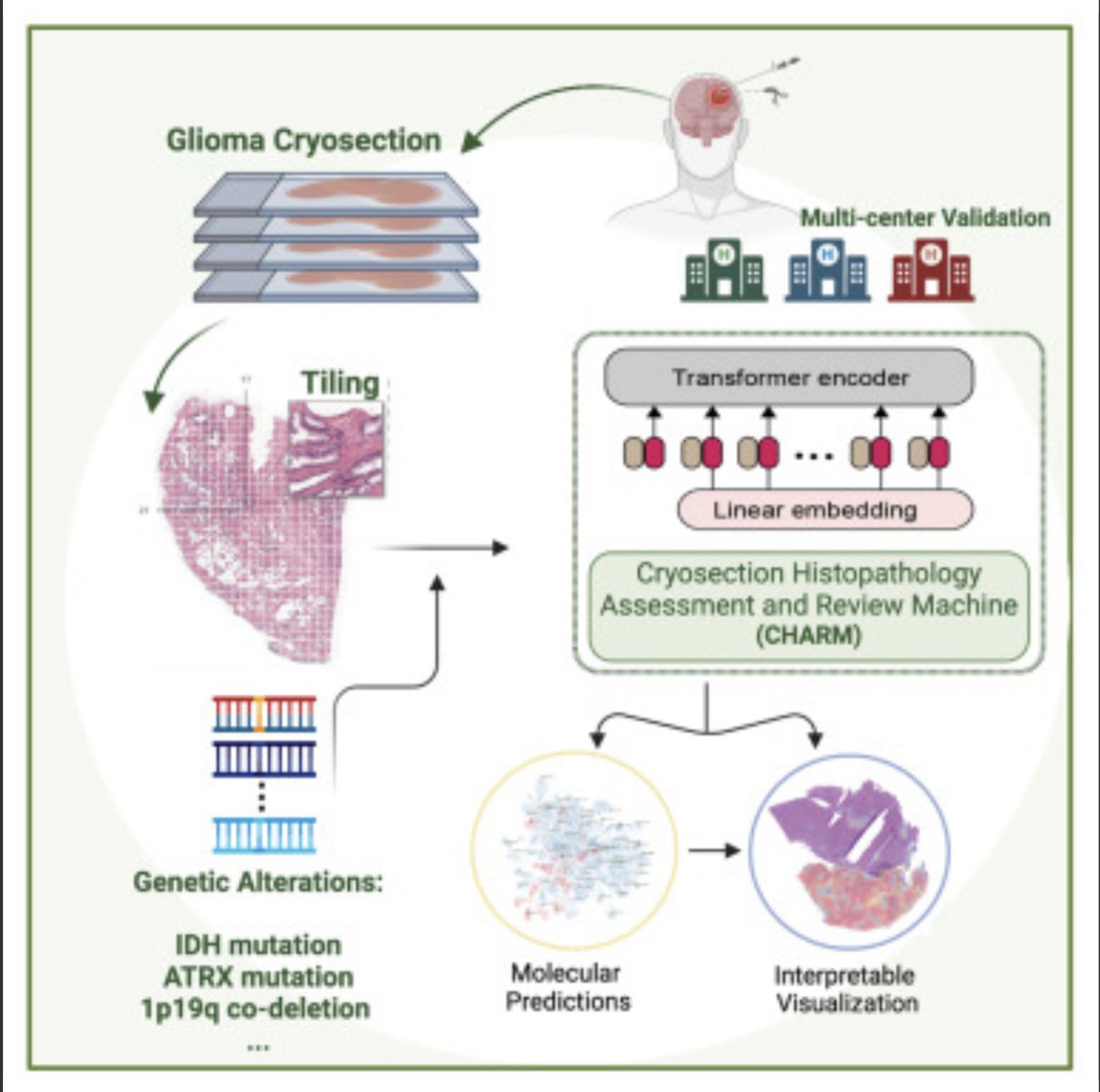

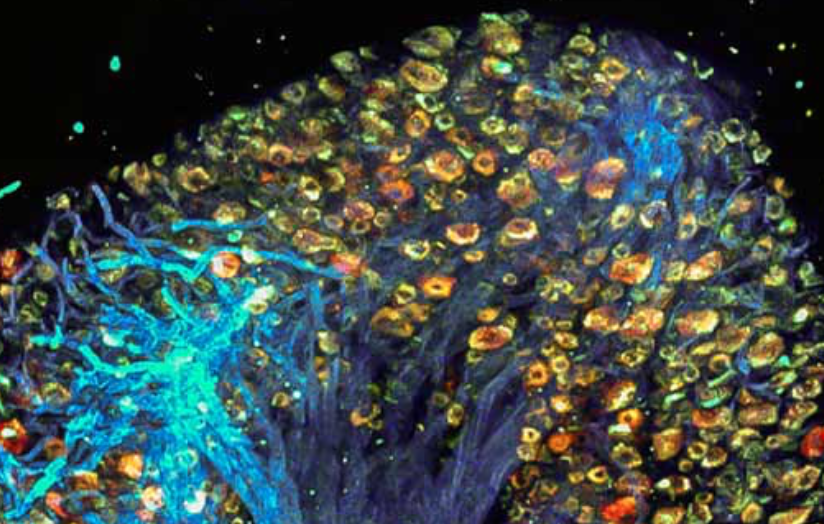

AI decodes brain tumor DNA during surgery

Kun-Hsing Yu and HMS colleagues used AI to rapidly determine a brain tumor’s molecular identity during surgery, propeling the development of precision oncology. The tool is CHARM (Cryosection Histopathology Assessment and Review Machine.) Currently, genetic sequencing takes days to weeks. Accurate molecular diagnosis during surgery can help a neurosurgeon decide how much brain tissue…

-

Wearable ultrasound to detect early breast cancer

MIT’s Canan Dagdeviren has developed a flexible ultrasound patch that can be attached to a bra, obtaining ultrasound images with resolution comparable to medical imaging centers, and used repeatedly. Interval cancers, which develop between regularly scheduled mammograms, account for 20 – 30 percent of all breast cancers, and tend to be more aggressive. The goal…

-

AI detects diabetes from fatty tissue in chest x rays

Judy Wawira Gichoya and Emory colleagues have developed an AI model that detects warning signs for diabetes in x rays collected during routine exams. The signs were also detected in patients who do not meet elevated risk guidelines. Applying deep learning to images and electronic health record data, the model that successfully flagged elevated diabetes risk in a retrospective analysis, often years before patients were…

-

AI-based device increases ademona detection during colonoscopy

MAGENTIQ-COLO is an AI based FDA cleared colonoscopy device which offers a significant increase in Adenoma Detection Rate. Current high rates of missed and undetected adenomas during colonoscopy means that even regularly screened patients are still at risk of developing colon cancer. A missed polyp can lead to interval cancer, which accounts for approximately 8%…

-

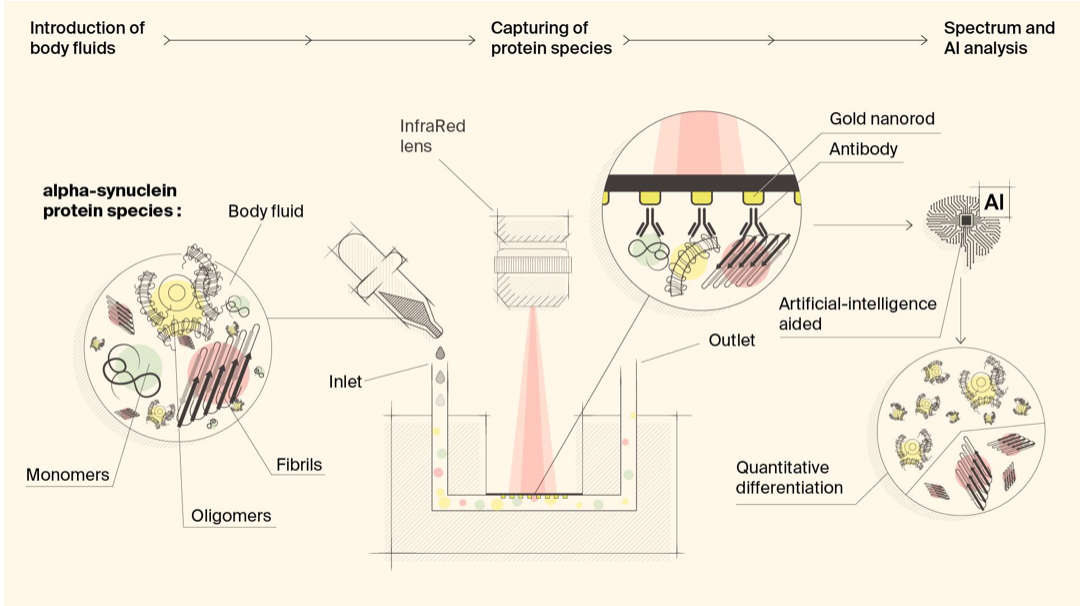

Biosensor detects misfiled proteins in Parkinson’s and Alzheimer’s disease

Hatice Altug, Hilal Lashue, and EPFL colleagues have developed ImmunoSEIRA, an AI-enhanced, biosensing tool for the detection of misfolded proteins linked to Parkinson’s and Alzheimer’s disease. The researchers also claim that neural networks can quantify disease stage and progression. The technology holds promise for early detection, monitoring, and assessing treatment options. Protein misfolding has been…

-

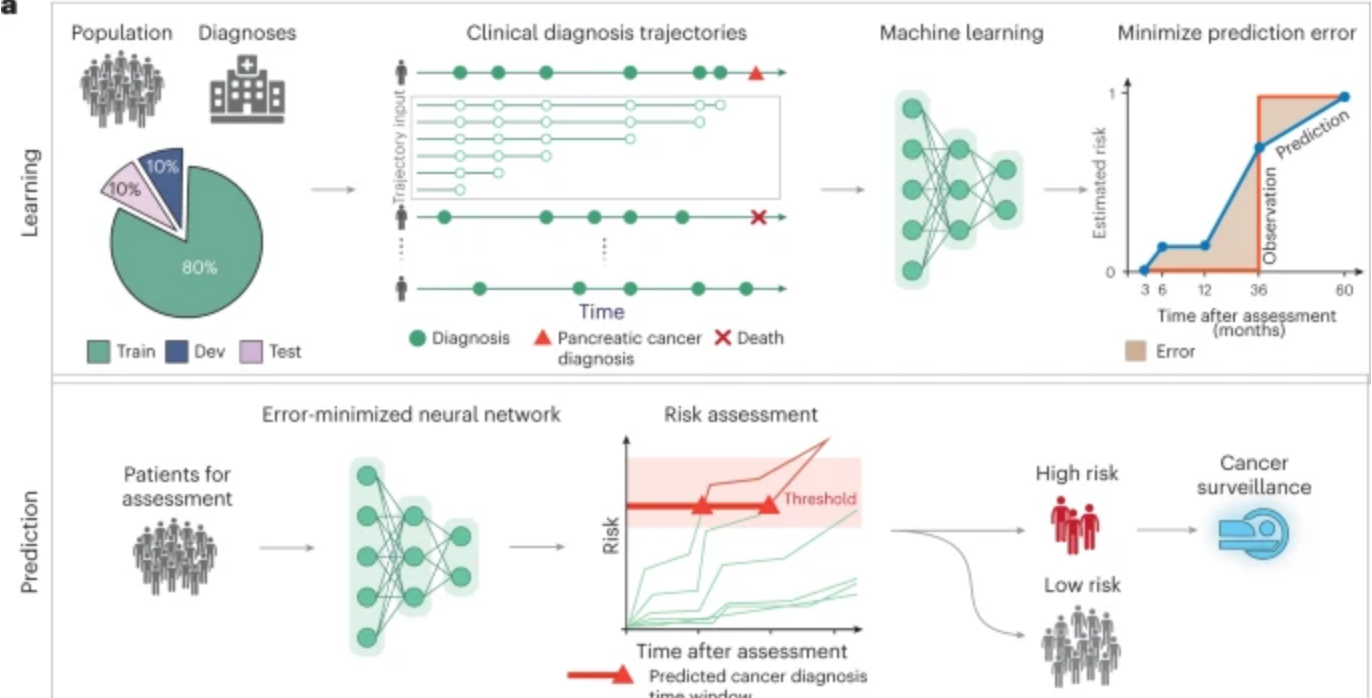

AI predicts pancreatic cancer

Harvard professor Chris Sander used clinical data from 6 million patients in Denmark’s national health system and 3 million in the U.S. VA system to train an AI model to predict the occurrence of pancreatic cancer within 3, 6, 12, and 36 months. This could allow wider screening for the aggressive disease, which is often…

-

Brain-spine interface allows paraplegic man to walk

EPFL professor Grégoire Courtine has created a “digital bridge” which has allowed a man whose spinal cord damage left him with paraplegia, to walk. The brain–spine interface builds on previous work, which combined intensive training and a lower spine stimulation implant. Gert-Jan Oskam participated in this trial, but stopped improving after three years. The new…

-

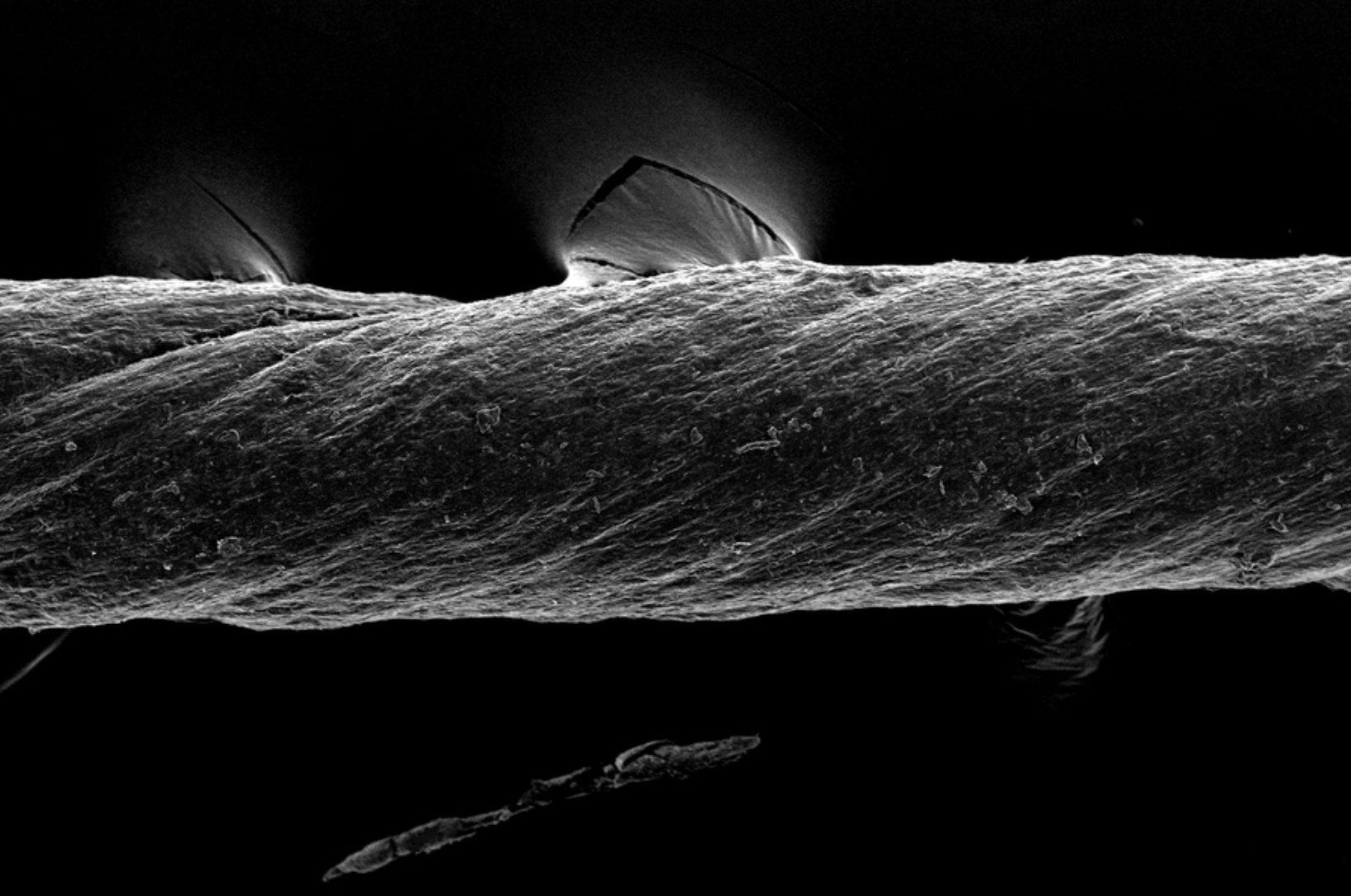

Hydrogel-coated sutures sense inflammation, can deliver drugs and stem cells

Giovanni Traverso has designed tough, absorbable, hydrogel-coated sutures, which in addition to holding post-surgery or wound-affected tissue in place, can sense inflammation and deliver drugs, including monoclonal antibodies. They could also be used to deliver stem cells. The sutures were created from pig tissue, “decellularized” with detergents, to reduce the chances of inducing inflammation in…

-

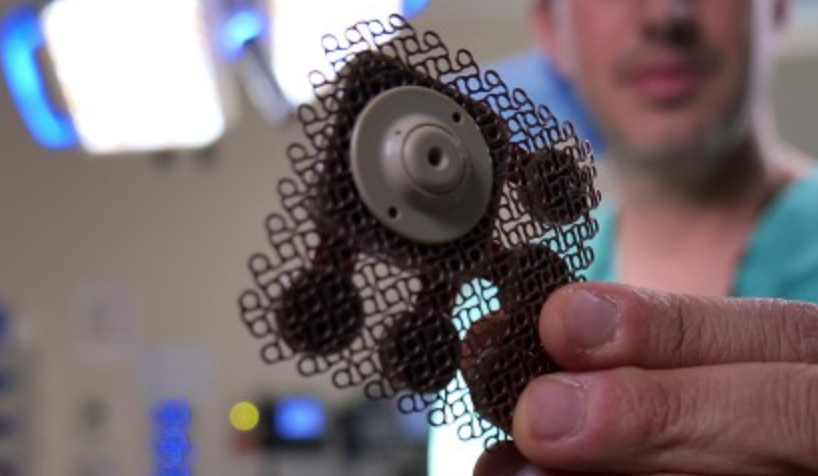

Implanted ultrasound allows powerful chemotherapy drugs to cross the blood brain barrier

Adam Sonaband and Northwestern colleages used a skull-implantable ultrasound device to open the blood-brain barrier and repeatedly permeate critical regions of the human brain, to deliver intravenous chemotherapy to glioblastoma patients. This is the first study to successfully quantify the effect of ultrasound-based blood-brain barrier opening on the concentrations of chemotherapy in the human brain.…

-

Study: Molecular mechanism of accelerated cognitive decline in women with Alzheimer’s

Hermona Soreq, Yonatan Loewenstein, and Hebrew University of Jerusalem colleagues have uncovered a sex-specific molecular mechanism leading to accelerated cognitive decline in women with Alzheimer’s disease. Current therapeutic protocols are based on structural changes in the brain and aim to delay symptom progression. Women typically experience more severe side effects from these drugs. This research…

-

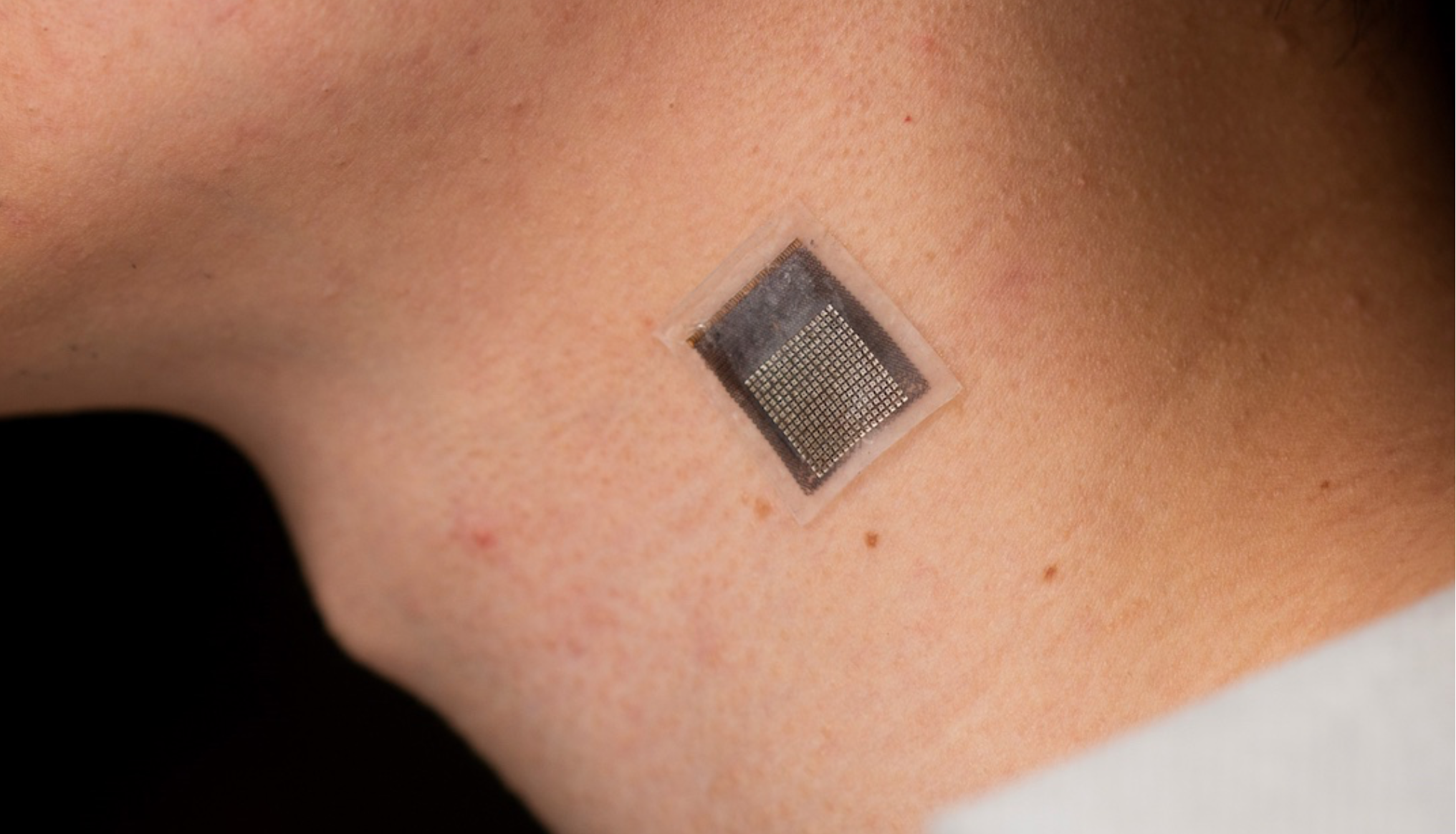

Wearable sensor evaluates human tissue stiffness

Sheng Xu and colleagues have developed a wearable, stretchable device that non-invasively evaluates the stiffness of human tissue, at an improved penetration depth, and for a longer period than, existing methods. An ultrasonic array facilitates serial, non-invasive, three-dimensional imaging of tissues, four centimeters below the surface of human skin, at a spatial resolution of 0.5…