Category: Prosthetics

-

Applied robot control theory for more natural prosthetic leg movement

Prosthetics are lighter and more flexible than in the past, but fail to mimic human muscle power. Powered prosthesis motors generate force, but cannot respond with stability to disturbances or changing terrain. Robert Gregg and colleagues at the University of Texas have applied robot control theory to allow powered prosthetics to dynamically respond to a wearer’s environment.…

-

NIH “Bionic Man” with 14 sensor and brain controlled functions

The National Institute of Biomedical Imaging and Bioengineering recently launched the “NIBIB Bionic Man,” an interactive Web tool detailing 14 sensor based technologies they are supporting. They include: 1. A robotic leg prosthesis that senses a person’s next move and provides powered assistance to achieve a more natural gate. 2. A light sensitive biogel and…

-

Virtual reality movement training for amputees

CAREN, developed at the University of South Florida, helps those with limb loss and prosthetics improve basic function, symmetry and walking efficiency. It is also a tool for researchers to study ways to improve mobility and balance. Wearing a safety harness and walking on a treadmill in the room-sized system, participants of a recent study engaged in audio-visual balance…

-

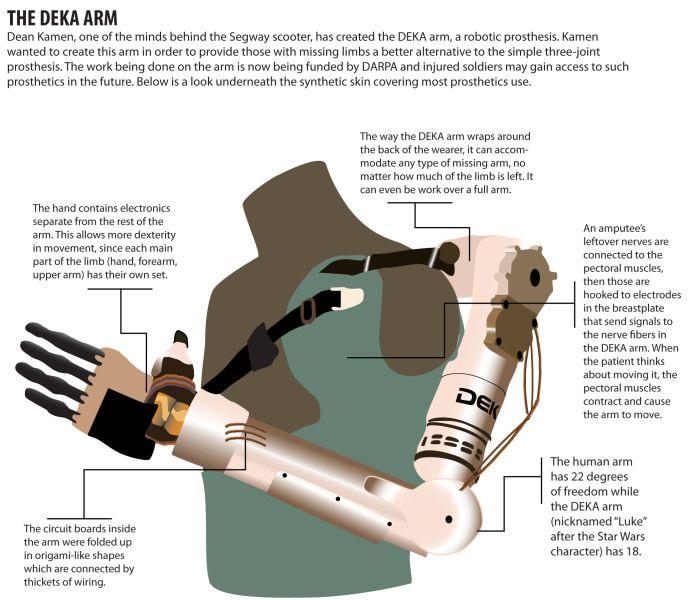

Prosthetic arm moves after muscle contraction detected

DEKA is a robotic, prosthetic arm that will allow amputees to perform complex movements and tasks. It has just received FDA approval. Electrodes attached to the arm detect muscle contractions close to the prosthesis, and a computer translates them into movement. Six “grip patterns” allow wearers to drink a cup of water, hold a cordless…

-

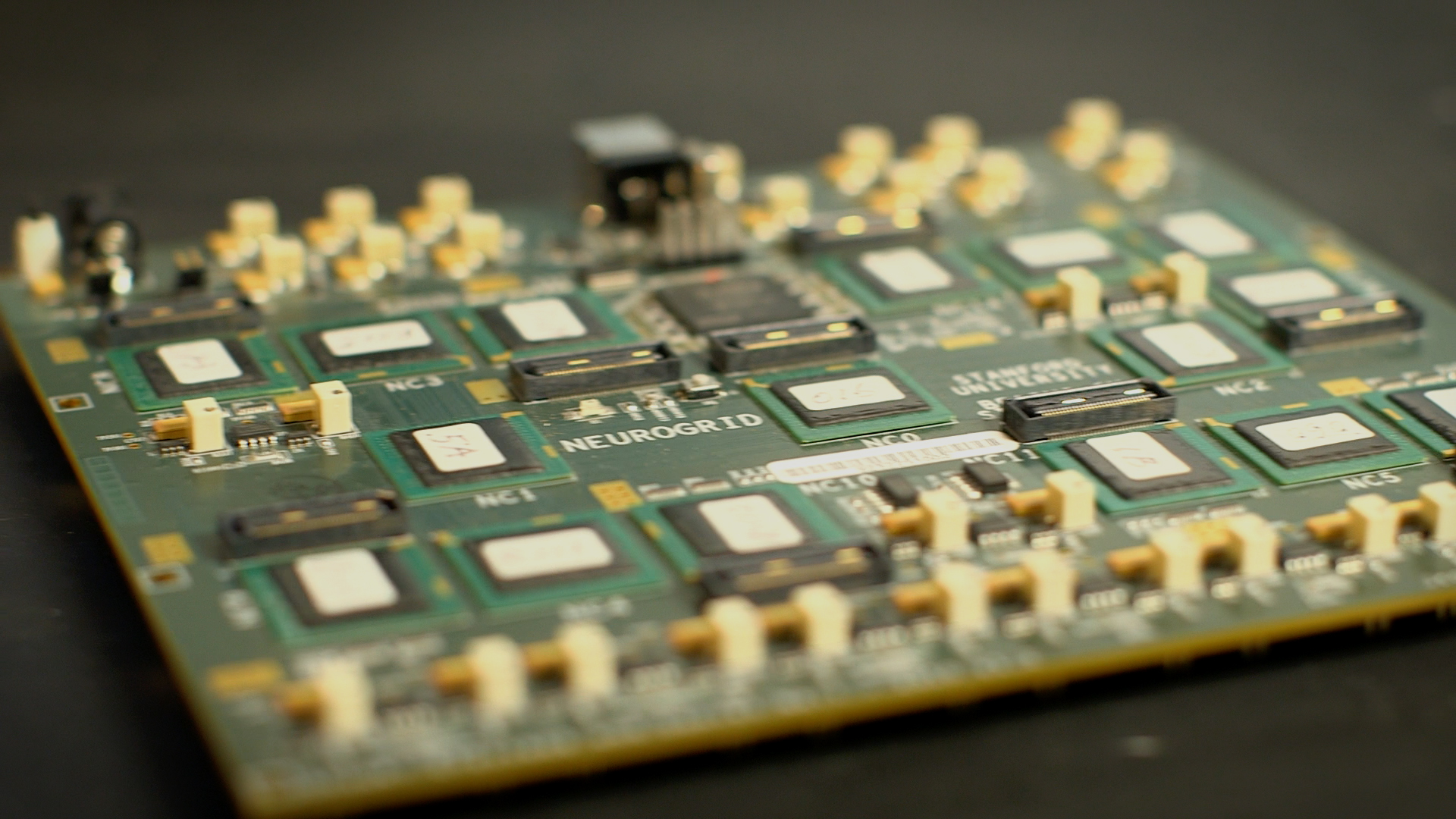

“Brain modeled” chip with prosthetic potential

Neurogrid is a “human brain based” microchip that is 9,000 times faster than and requires 1/40,000 the power of a typical pc. It is being developed by Professor Kwabena Boahen at Stanford University. The circuit board consists of 16 custom-designed “Neurocore” chips which can simulate 1 million neurons and billions of synaptic connections. Certain synapses were enabled…

-

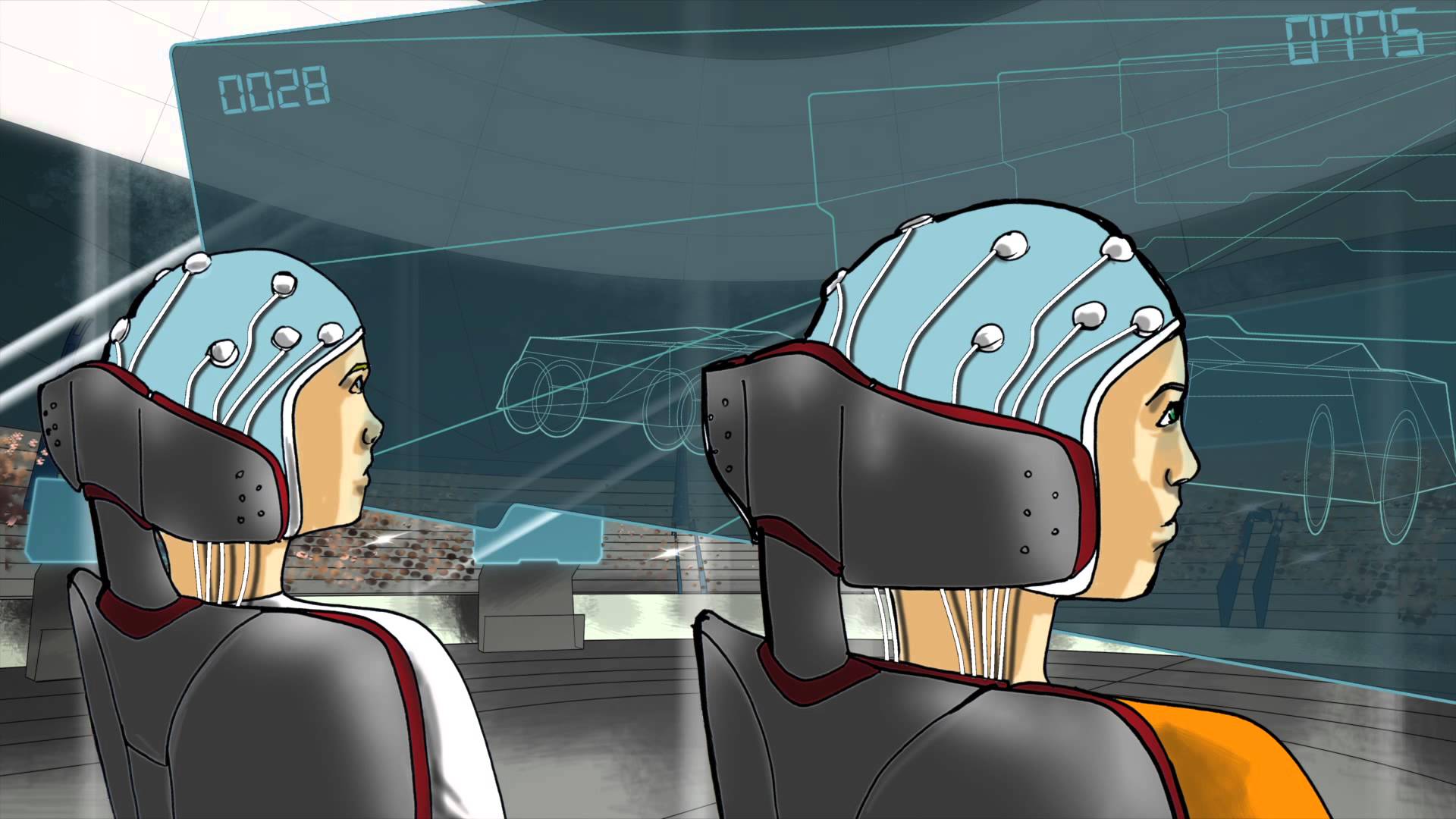

BCI and robotic prosthetic paralympic competition

Switzerland will host the world’s first Cybathlon, an Olympic-style competition for parathletes using robotic assistive devices and brain computer interfaces. It will include six events: a bike race, leg race, wheelchair race, exoskeleton race, arm prosthetic race (including electrical muscle stimulation), and a Brain Computer Interface race for competitors with full paralysis. Unlike the Olympics, where athletes…

-

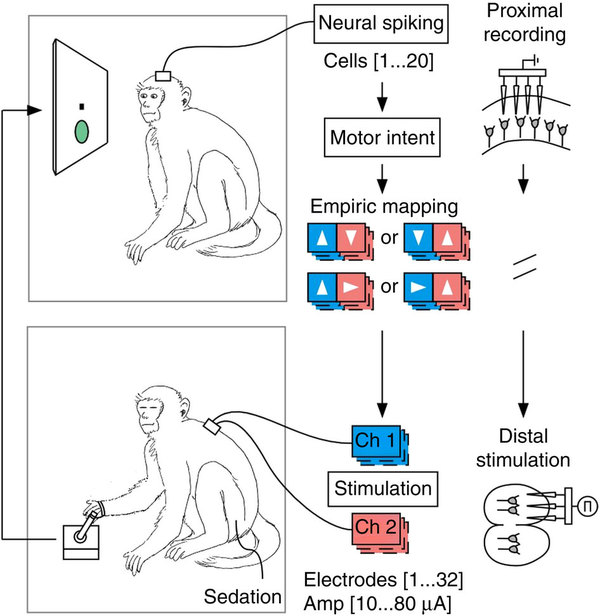

Cortical-spinal prosthesis directs “targeted movement” in paralyzed limbs

http://www.nature.com/ncomms/2014/140218/ncomms4237/full/ncomms4237.html Cornell‘s Maryam Shanechi, Harvard‘s Ziv Williams and colleagues developed a cortical-spinal prosthesis that directs “targeted movement” in paralyzed limbs. They tested a prosthesis that connects two subjects by enabling one subject to send its recorded neural activity to control limb movements in a different subject that is temporarily sedated. The BMI is based on a set of real-time…

-

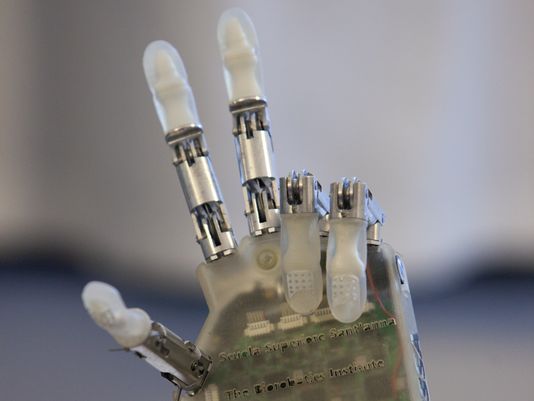

Trial: Improved sense of touch and control in prosthetic hand

http://stm.sciencemag.org/content/6/222/222ra19 In the ongoing effort to improve the dexterity of prosthetics, a recent trial showed an improved sense of touch and control over a prosthetic hand. EPFL professor Silvestro Micera and colleagues surgically attached electrodes from a robotic hand to a volunteer’s median and ulnar nerves. Those nerves carry sensations that correspond with the volunteer’s index finger and…