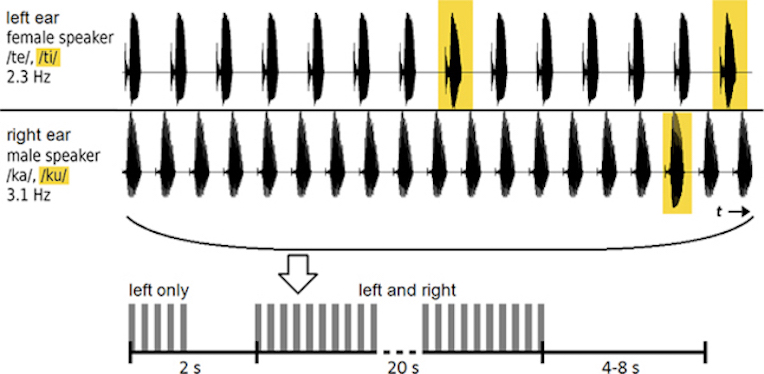

University of Oldenburg student Carlos Filipe da Silva Souto is in the early stages of developing a brain computer interface that can advise a user who he/she is listening to in a noisy room. Wearers could focus on specific conversations, and tune out background noise.

Most BCI studies have focused on visual stimuli, which typically outperforms auditory stimuli systems, possibly because of the larger cortical surface of the visual system. As researchers further optimize classification methods for auditory systems, performance will be improved.

The goal is for visually impaired or paralyzed people to be able to use natural speech features to control hearing devices.

ApplySci’s 6th Digital Health + NeuroTech Silicon Valley – February 7-8 2017 @ Stanford | Featuring: Vinod Khosla – Tom Insel – Zhenan Bao – Phillip Alvelda – Nathan Intrator – John Rogers – Roozbeh Ghaffari –Tarun Wadhwa – Eythor Bender – Unity Stoakes – Mounir Zok – Sky Christopherson – Marcus Weldon – Krishna Shenoy – Karl Deisseroth – Shahin Farshchi – Casper de Clercq – Mary Lou Jepsen – Vivek Wadhwa – Dirk Schapeler – Miguel Nicolelis