Scientist-led conferences at Harvard, Stanford and MIT

-

Verily developing low-power health wearable

While visiting Verily last week, an MIT Technology Review journalist saw and described the company’s wearable vital tracker, called the “Cardiac and Activity Monitor” by CTO Brian Otis. Its novelty is a low-power e-paper display, which will address the universal problem of battery life. Only with guaranteed continuous measurement can meaningful data be gathered and health analyzed. The…

-

Monkeys type Shakespeare with thoughts; human trials underway

Stanford professor Krishna Shenoy has developed technology that reads brain signals in monkeys, from implanted electrodes, to control a cursor moving over a keyboard. A clinical trial will begin soon, with the goal of creating brain computer interfaces to help paralyzed people communicate. This could overcome the limitations of eye-controlled keyboards, which do not…

-

Robotic hand exoskeleton for stroke patients

ETH professor Roger Gassert has developed a robotic exoskeleton that allows stroke patients to perform daily activities by supporting motor and somatosensory functions. His vision is that “instead of performing exercises in an abstract situation at the clinic, patients will be able to integrate them into their daily life at home, supported by a robot.”…

-

Mobile brain health management

After scanning the brains of ALS, epilepsy, minimally conscious, schizophrenia, memory impaired, and healthy patients, to monitor brain health and treatment effectiveness, Brown and Tel Aviv University professor Nathan Intrator has commercialized his algorithms and launched Neurosteer. At ApplySci’s recent NeuroTech NYC conference, Professor Intrator discussed dramatic advances in BCI, monitoring, and neurofeedback, in his…

-

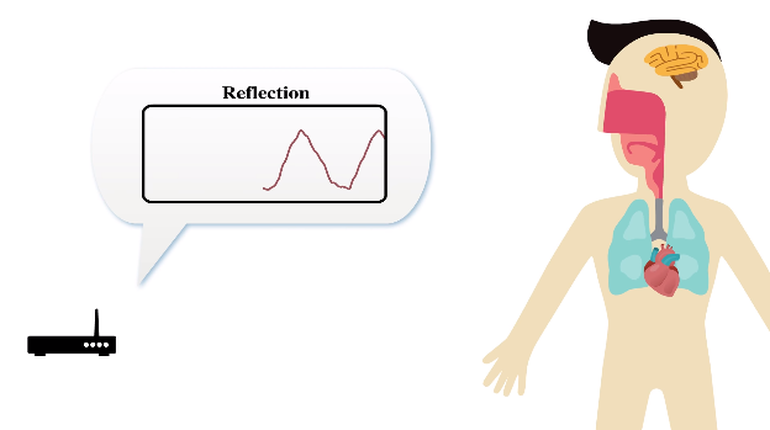

Wireless signals detect emotions

Mingmin Zhao and MIT colleagues have developed a device that detects emotions remotely, emitting wireless signals to measure heartbeat and breathing. EQ-Radio detects whether a person is excited, happy, angry or sad without wearable sensors or using face recognition technology. Radio signals are reflected off a person’s body, and back to the device. Algorithms detect…

-

Continuously generated “small data” analysis to improve health

The discussion of “big data” analysis in healthcare continues, unabated. At ApplySci’s recent Wearable Tech + Digital Health + NeuroTech NYC conference, Cornell professor Deborah Estrin, who is also a founder of Open mHealth, described how “small data” — our digital patterns and interactions — can help physicians by creating a more meaningful representation of our…

-

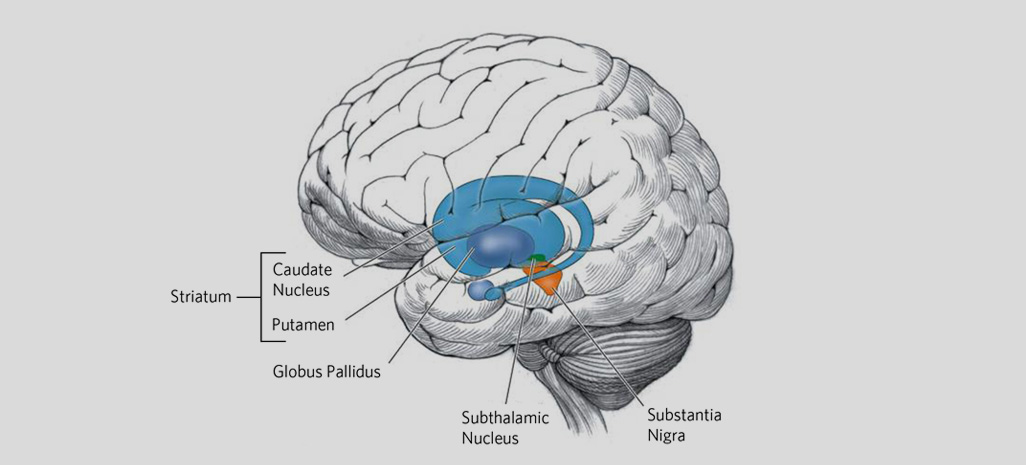

Wearable + cloud analysis track Huntington’s disease progression

In the latest pharma/tech partnership, Teva and Intel are developing a wearable platform to track the progression of Huntington’s disease. There is no cure for the disease, which causes a breakdown of nerve cells in the brain, resulting in a decline in motor control, cognition and mental stability. The technology can be used to assess the effectiveness…

-

Sanofi/Verily joint venture to fight diabetes

Big pharma + big tech/data partnerships continue to proliferate. Onduo is a Sanofi/Verily joint venture that will use each company’s expertise to help manage diabetes — Sanofi’s drugs plus Verily’s software, data analysis, and devices. CEO Josh Riff and has not announced a project pipeline, as they are taking “a thoughtful approach to finding lasting…

-

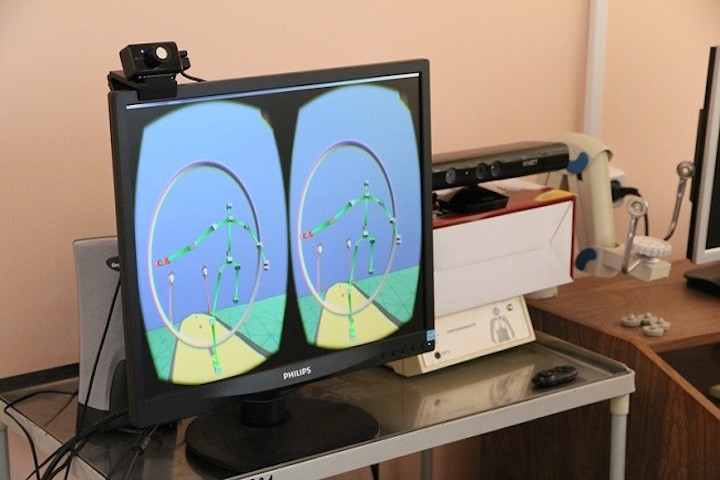

VR for early neurodegenerative disease detection, personalized rehabilitation

Tomsk Polytechnic and Siberian State University scientists David Khachaturyan and Ivan Tolmachov have developed a VR based neurodegenerative disorder diagnosis system. The goal is the early detection and tretment of diseases, including MS and Parkinson’s. The next step is the use of VR systems, like Glass and Kinect, for personalized rehabilitation. 50 subjects, both healthy…

-

Takeda’s digital transformation

Pharmaceutical giant Takeda is amid a digital transformation — with a patient-centric approach to drug development, wearable device adoption, and sophisticated data analysis. Leading this effort is Bruno Villetelle, Takeda’s Chief Digital Officer. Bruno was a keynote speaker at ApplySci’s recent Wearable Tech + Digital Health + NeuroTech San Francisco conference, where he discussed digital pharma…

-

Phone sensors detect anemia, irregular breathing, jaundice

University of Washington’s Shwetak Patel and his UbiComp Lab colleagues develop non-invasive, smartphone based tests, meant to bring diagnostics to the masses. HemaApp, a smartphone/light source detection method for anemia, could be especially useful in areas lacking access to care. Anemia is extremely common in poor countries. In a recent study, a phone camera was used…

-

“Data, not drugs” for elite sport performance

With equal parts modesty, enthusiasm, and wearable tech expertise, Olympic cyclist Sky Christopherson came to ApplySci’s recent Wearable Tech + Digital Health + NeuroTech NYC conference to “thank this community for helping the US Olympic team before the last Olympics with a lot of the same technology to help athletes prepare, using data and not drugs.”…

Got any book recommendations?