Category: Brain

-

Neural Network assesses sleep patterns for passive Parkinson’s diagnosis

MIT’s Dina Katabi has developed a non-contact, neural network-based system to detect Parkinson’s disease while a person is sleeping. By assessing nocturnal breathing patterns, the series of algorithms detects, and tracks the progression of, the disease — every night, at home. A device in the bedroom emits radio signals, analyzes their reflections off the surrounding…

-

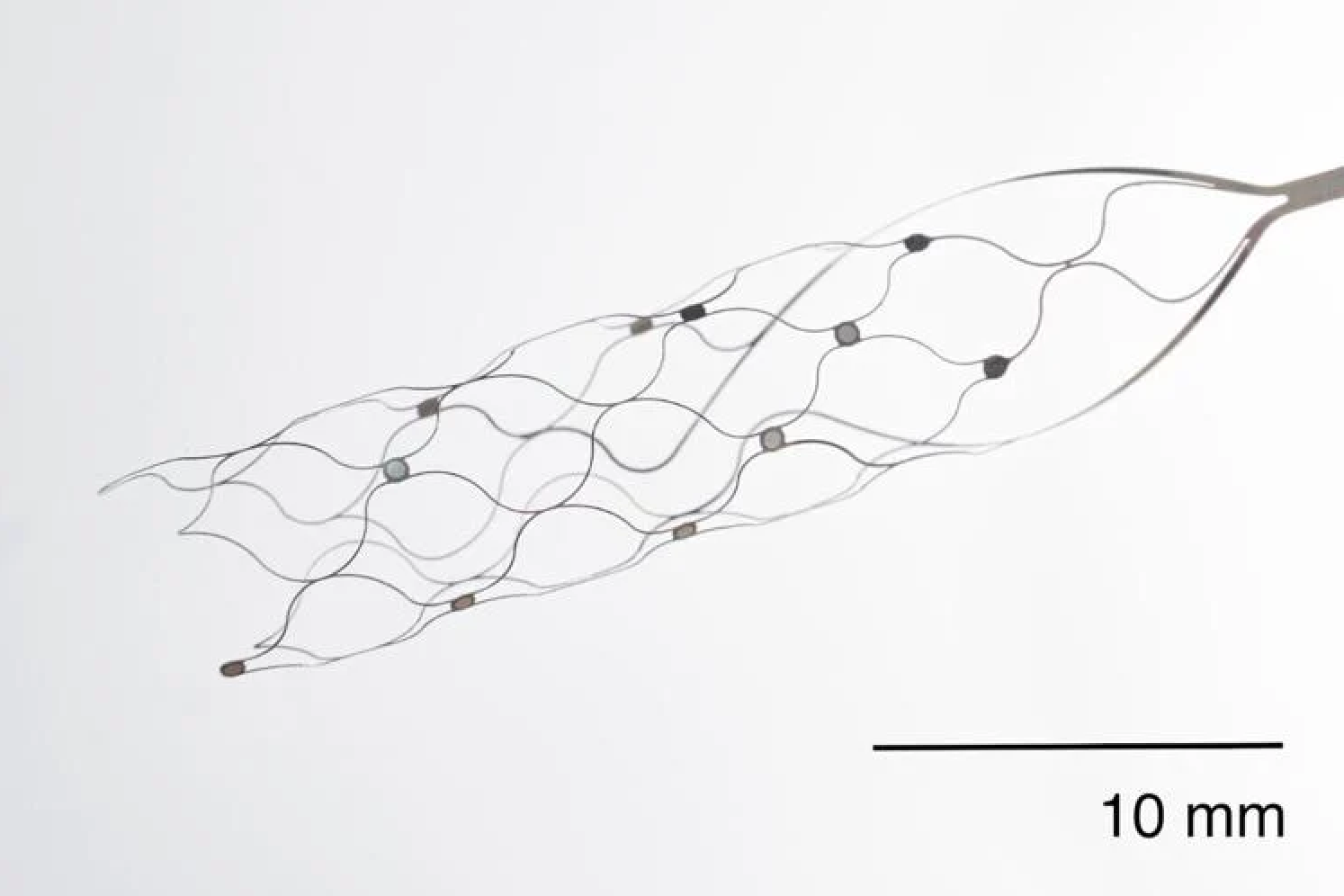

First US patient receives Synchron endovascular BCI implant

On July 6, 2022, Mount Sinai’s Shahram Majidi threaded Synchron‘s 1.5-inch-long, wire and electrode implant into a blood vessel in the brain of a patient with ALS. The goal is for the patient, who cannot speak or move, to be able to surf the web and communicate via email and text, with his thoughts. Four…

-

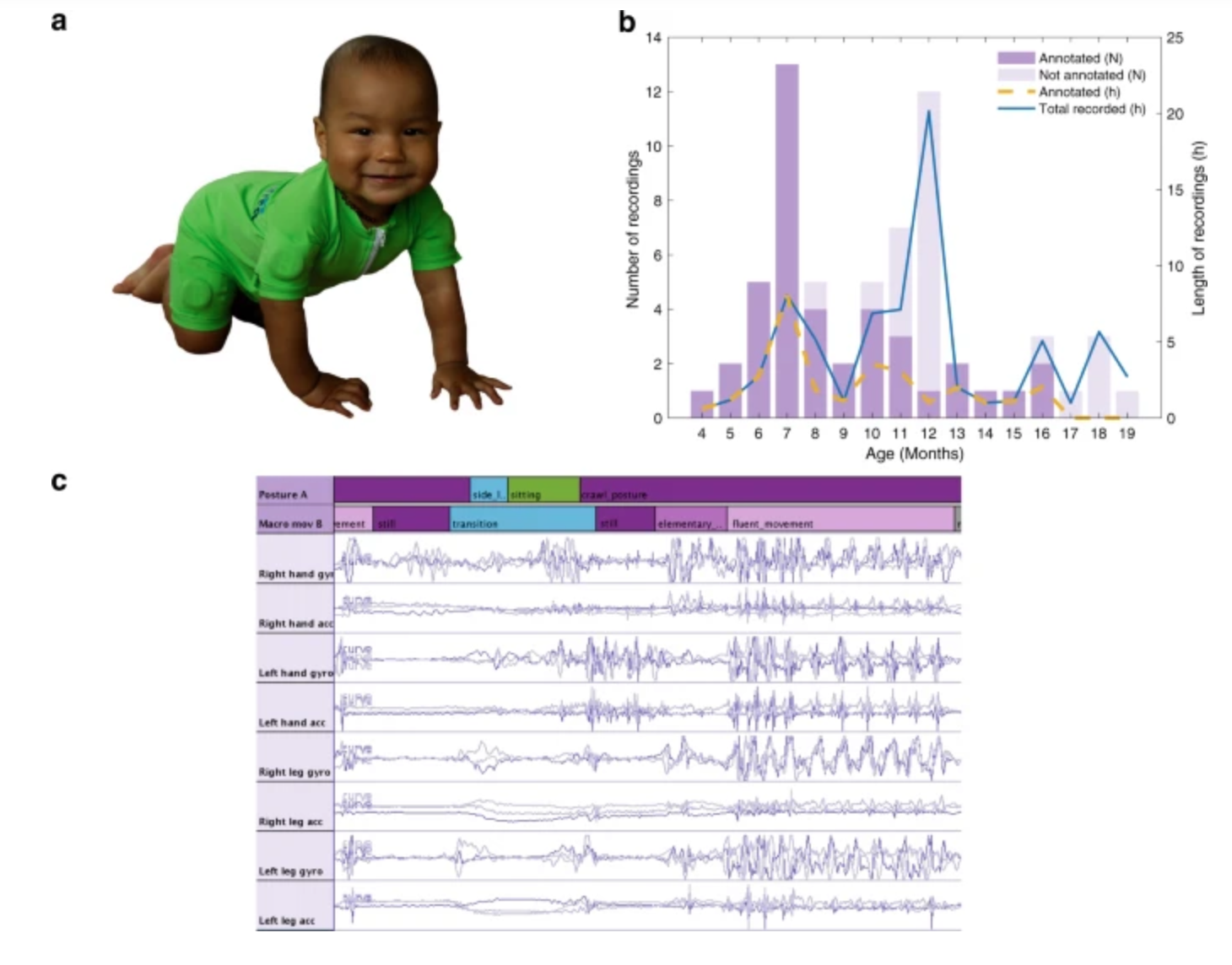

Sensor jumpsuit monitors infant motor abilities

Sampsa Vanhatalo, Manu Airaksinen and University of Helsinki colleagues have developed MAIJU (Motor Assessment of Infants with a Jumpsuit,) a wearable onesie with multiple movement sensors which they believe is able to predict a child’s neurological development. In a recent study, 5 to 19 month-old infants were monitored using MAIJU during spontaneous playtime. Initially, infant…

-

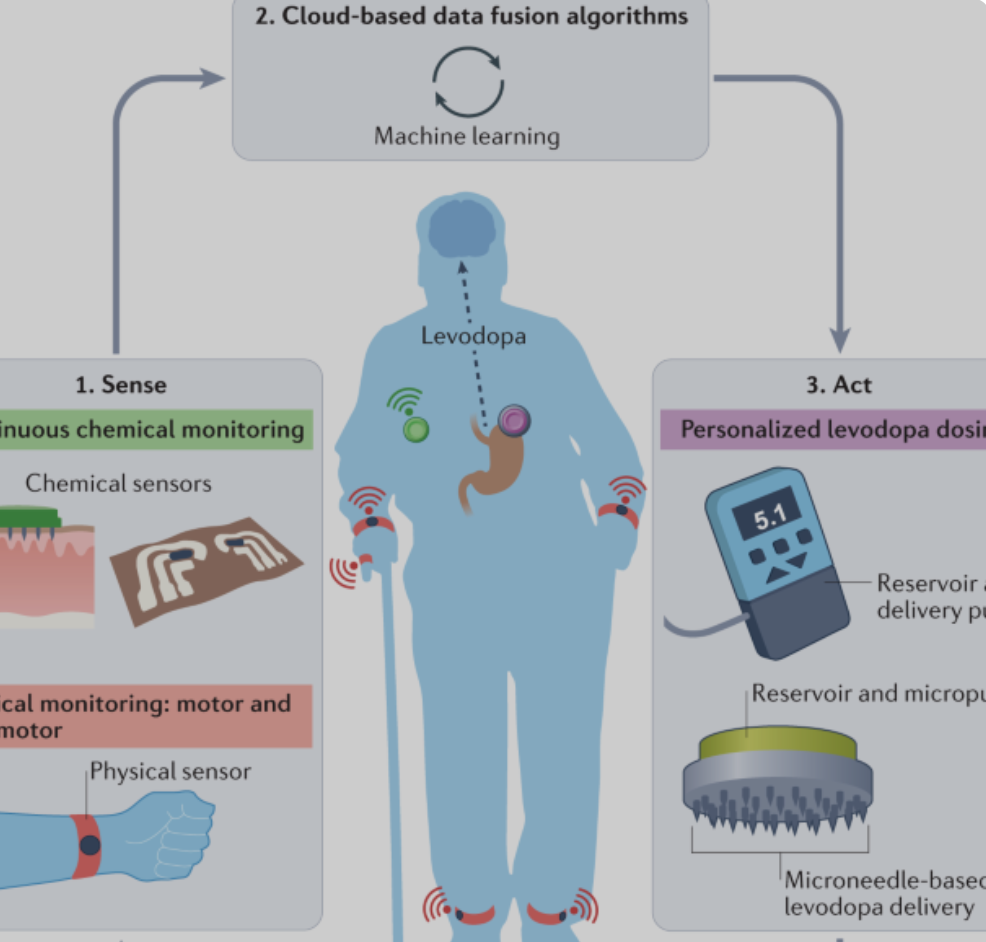

Joe Wang developed, closed-loop, levadopa delivery/monitoring system for Parkinson’s disease

Early Parkinson’s Disease patients benefit significantly from levodopa, to replace dopamine to restore normal motor function. As PD progresses, the brain loses more dopamine-producing cells, which causes motor complications and unpredictable responses to levodopa. Doses must be increased over time, and given at shorter intervals. Regimens are different for each person and may vary from…

-

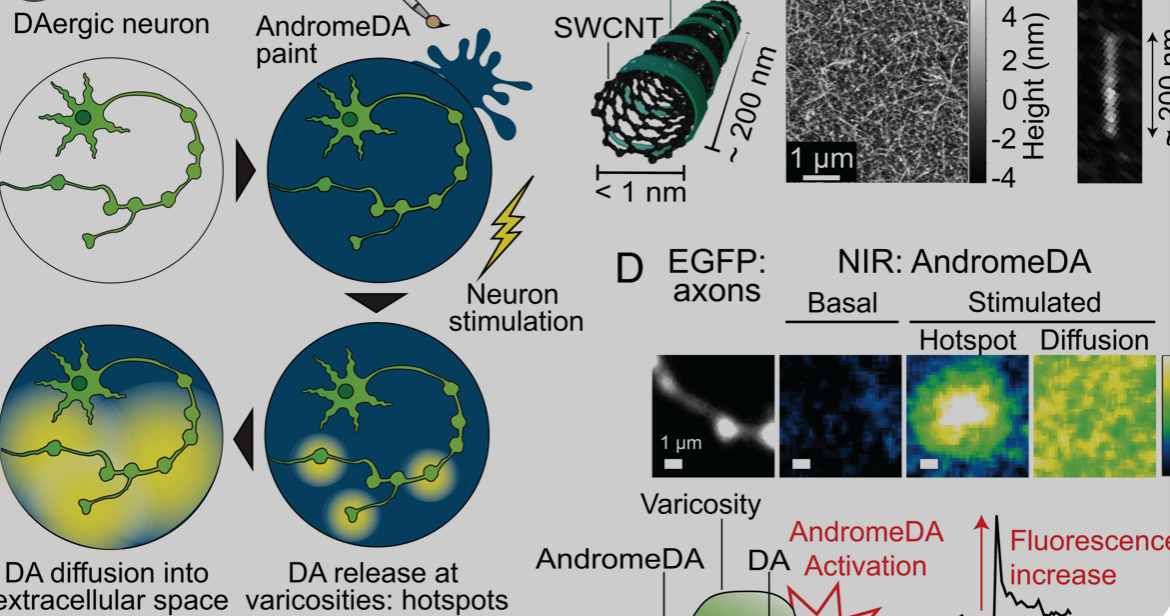

Carbon nanotube sensor precisely measures dopamine

Ruhr University professor Sebastian Kruss, with Max Planck researchers Sofia Elizarova and James Daniel, has developed a sensor that can visualize the release of dopamine from nerve cells with unprecedented resolution. The team used modified carbon nanotubes that glow brighter in the presence of the messenger substance dopamine. Eizarova said that the sensor “provides new…

-

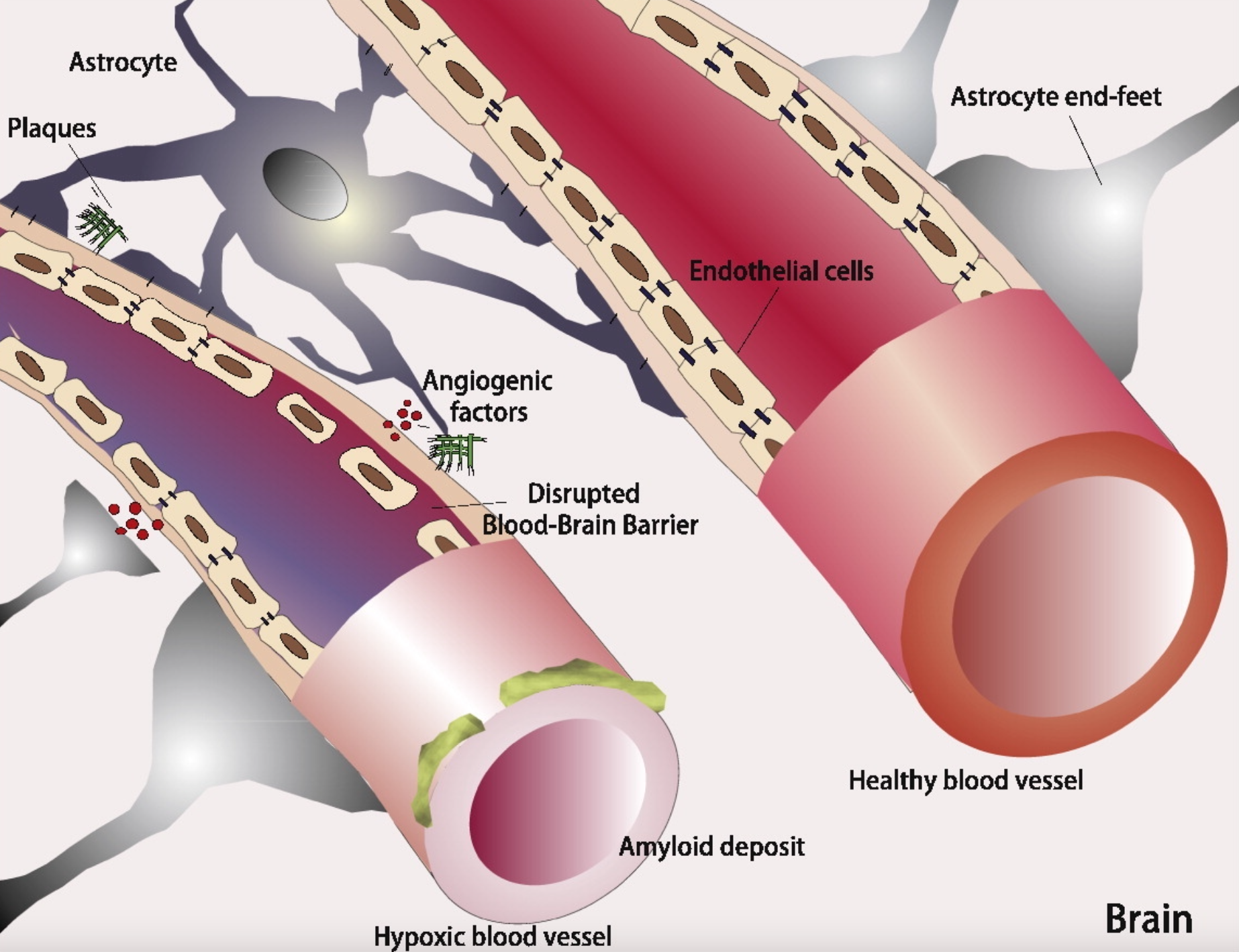

Univ. of Reading study links Alzheimer’s disease to blood brain barrier damage

The “Lipid Invasion Model” argues that lipids entering the brain due to blood brain barrier damage is the determining cause of the Alzheimer’s Disease. The presence of excess lipids in the brain cells of Alzheimer’s patients is an element of Alois Alzheimer’s 1906 research, but little has been published about this connection since. The hypothesis,…

-

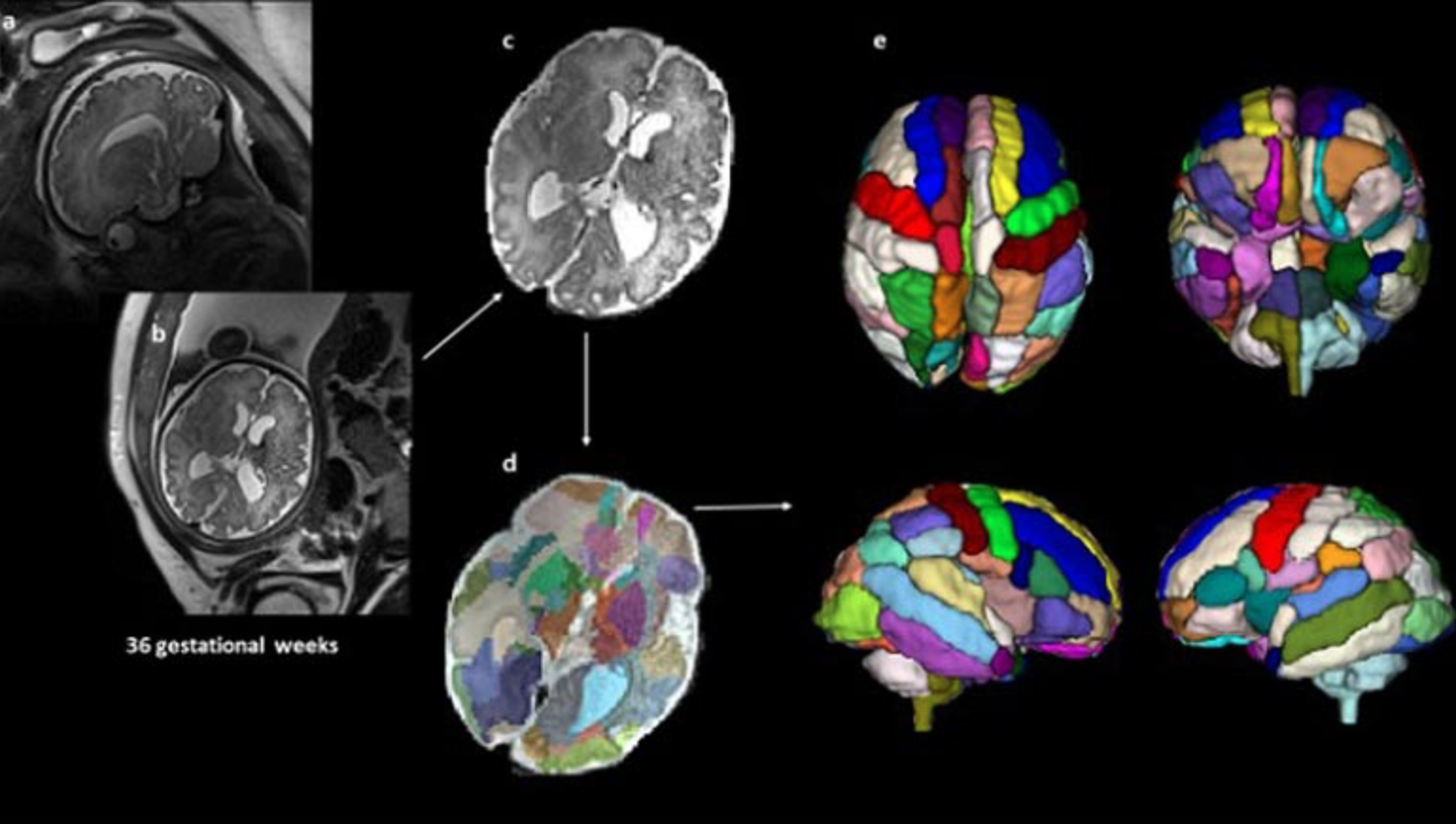

Prenatal MRI study suggests autism differences may begin in the womb

A small Boston Children’s Hospital study led by Assistant Professor Emi Takahashi and postdoc Alpen Ortug showed increased volume of the insular lobe as a potential strong prenatal MRI biomarker that could predict the emergence of ASD later in life. It revealed significant differences in brain structures at 25 weeks’ gestation between children who were…

-

Stanford study: High dose magnetic stimulation eases severe depression

Nolan Williams, Alan Schatzberg, and Stanford colleagues have published a small, double blind study showing that high dose, noninvasive, magnetic brain stimulation alleviated depression symptoms in 80% of participants. Stanford accelerated intelligent neuromodulation therapy (SAINT) is an intensive, individualized form of transcranial magnetic stimulation. Effects were seen within days and lasted months. Side effects included…

-

Passive EEG assessment detects cognitive decline early

George Sothart and University of Bath colleagues have developed a new, EEG + game memory assessment technique which could enable the earlier diagnosis of Alzheimer’s disease, the underlying cause of around 60% of dementia cases.. The need for early diagnosis tools to help doctors to prescribe lifestyle interventions to slow the rate of cognitive decline…

-

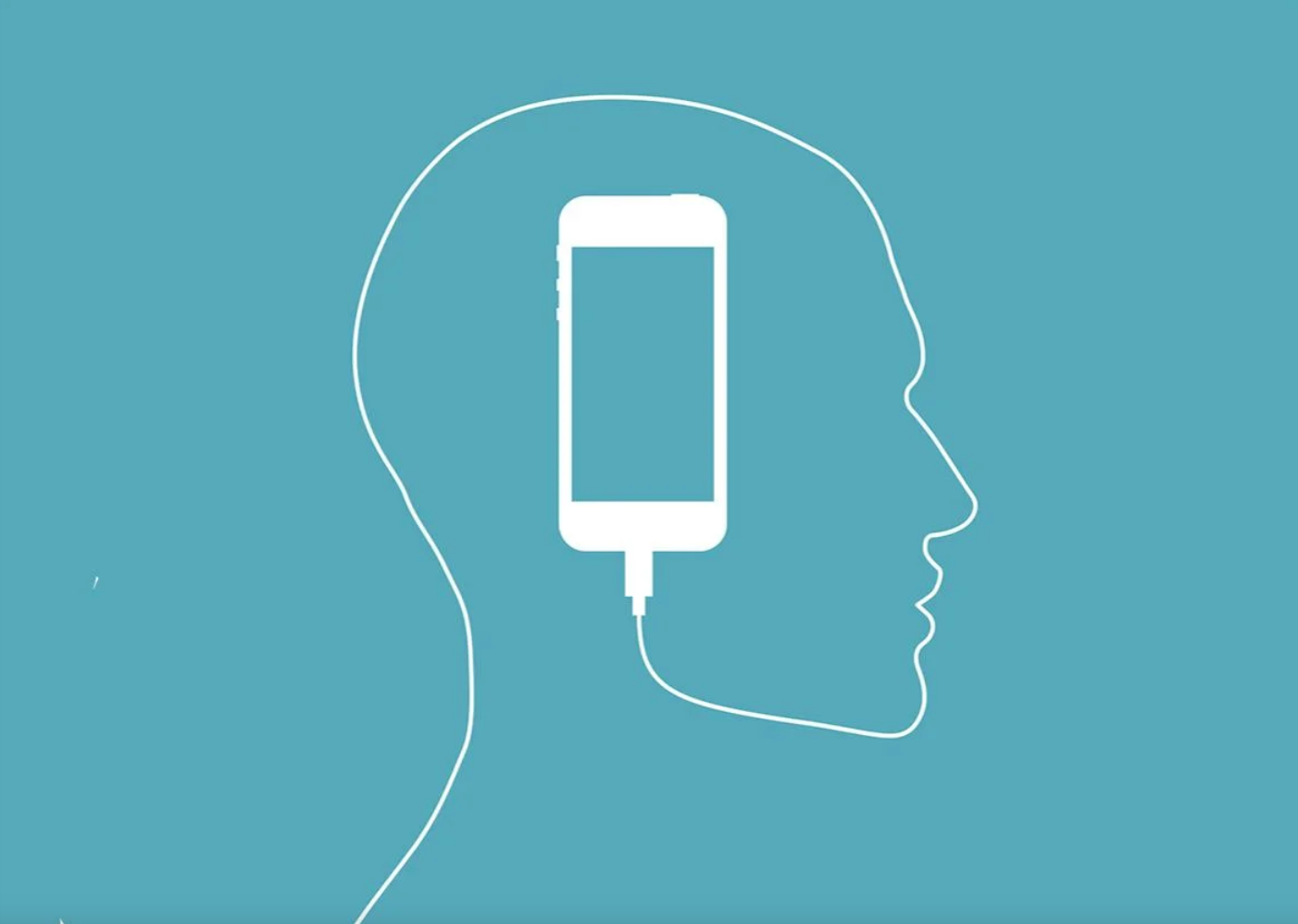

Apple partners with UCLA, Biogen for iPhone mental/cognitive health monitoring

The Apple/UCLA project “Seabreeze” and Apple/Biogen project “Pi” represent a further move into iPhone health monitoring. According to the Wall Street Journal, Apple is attempting to develop an algorithm to identify depression and cognitive decline from sleep patterns, mobility, and how one uses the phone — for example, how often they look at its clock.…

-

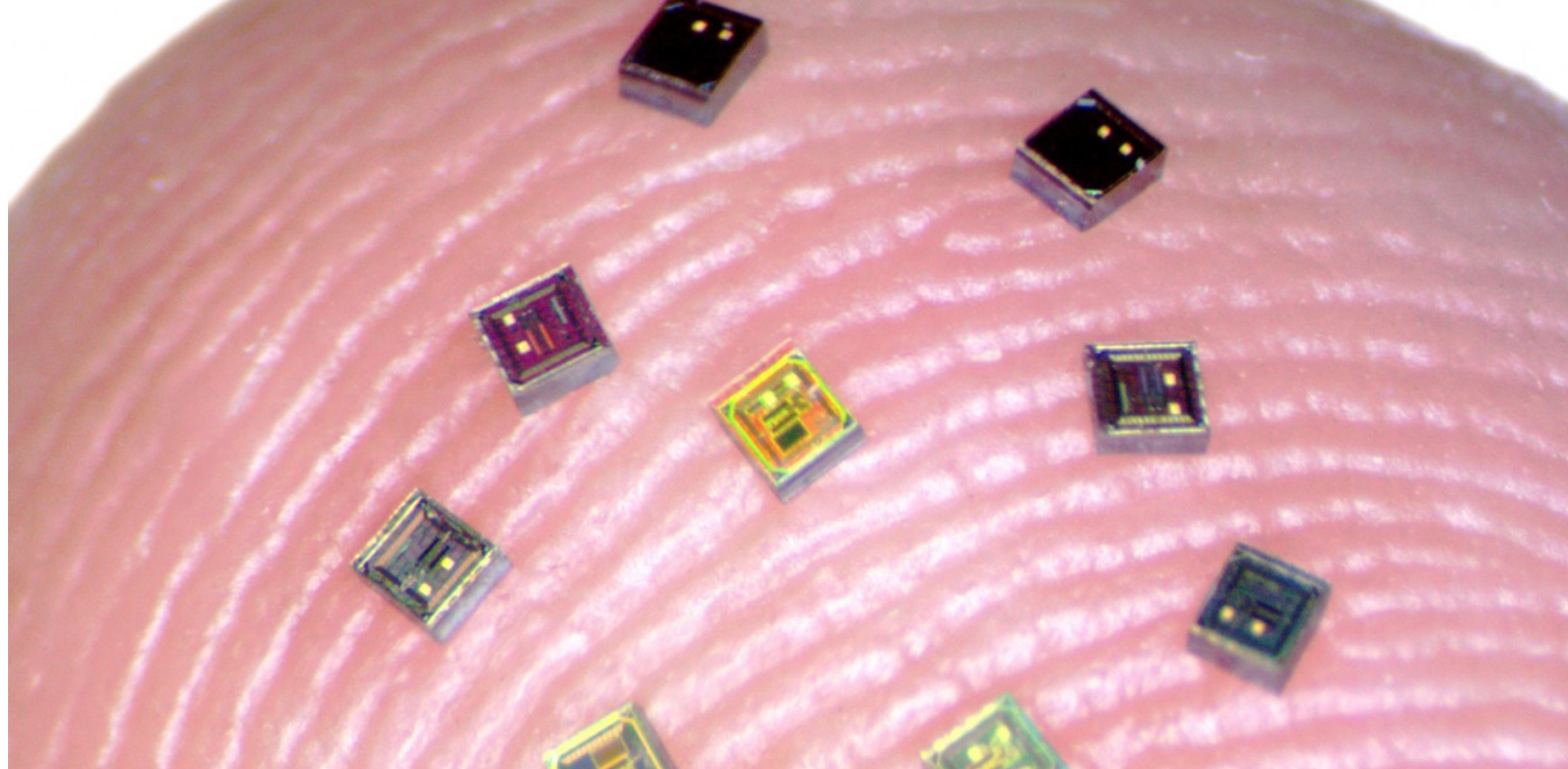

Nurmikko’s Neurograins can enable unprecedented brain signal recording detail, new therapies

Arto Nurmikko and Brown colleagues have developed BCI system which employs a coordinated network of independent, wireless microscale neural sensors, to record and stimulate brain activity. “Neurograins” independently record electrical pulses made by firing neurons and send the signals wirelessly to a central hub, which coordinates and processes the signals. In a recent Nature paper,…

-

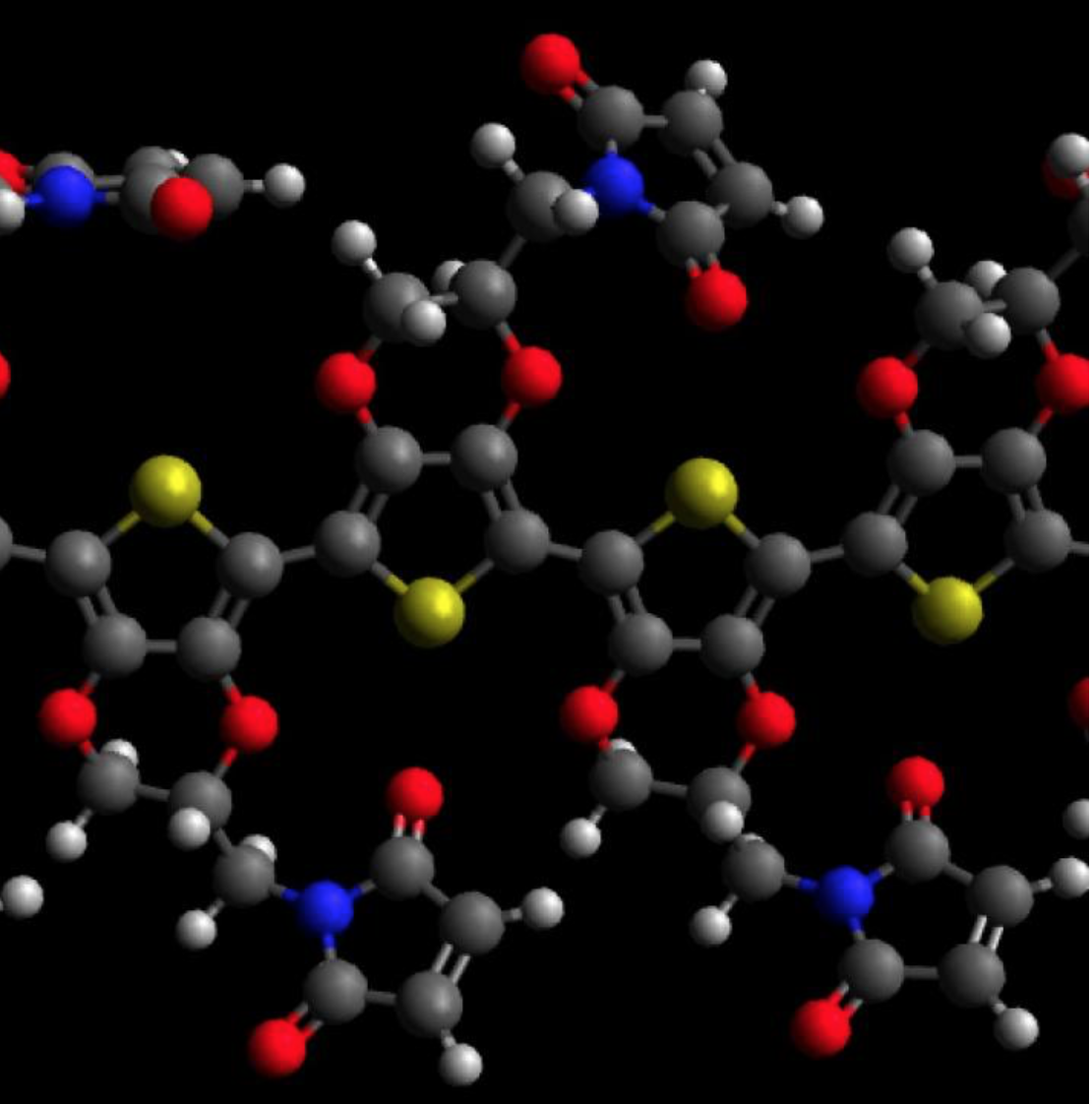

Polymer improves medical implants, could enable brain-computer interface

David Martin and University of Delaware colleagues have developed a bio-synthetic coating for electronic components that could avoid the scarring (and signal disruption) caused by traditional microelectric materials. The PEDOT polymer improved the performance of medical implants by reducing their opposition to an electric current. Pedot film was used with an antibody to stimulate blood…