Tag: BCI

-

Brain-spine interface allows paraplegic man to walk

EPFL professor Grégoire Courtine has created a “digital bridge” which has allowed a man whose spinal cord damage left him with paraplegia, to walk. The brain–spine interface builds on previous work, which combined intensive training and a lower spine stimulation implant. Gert-Jan Oskam participated in this trial, but stopped improving after three years. The new…

-

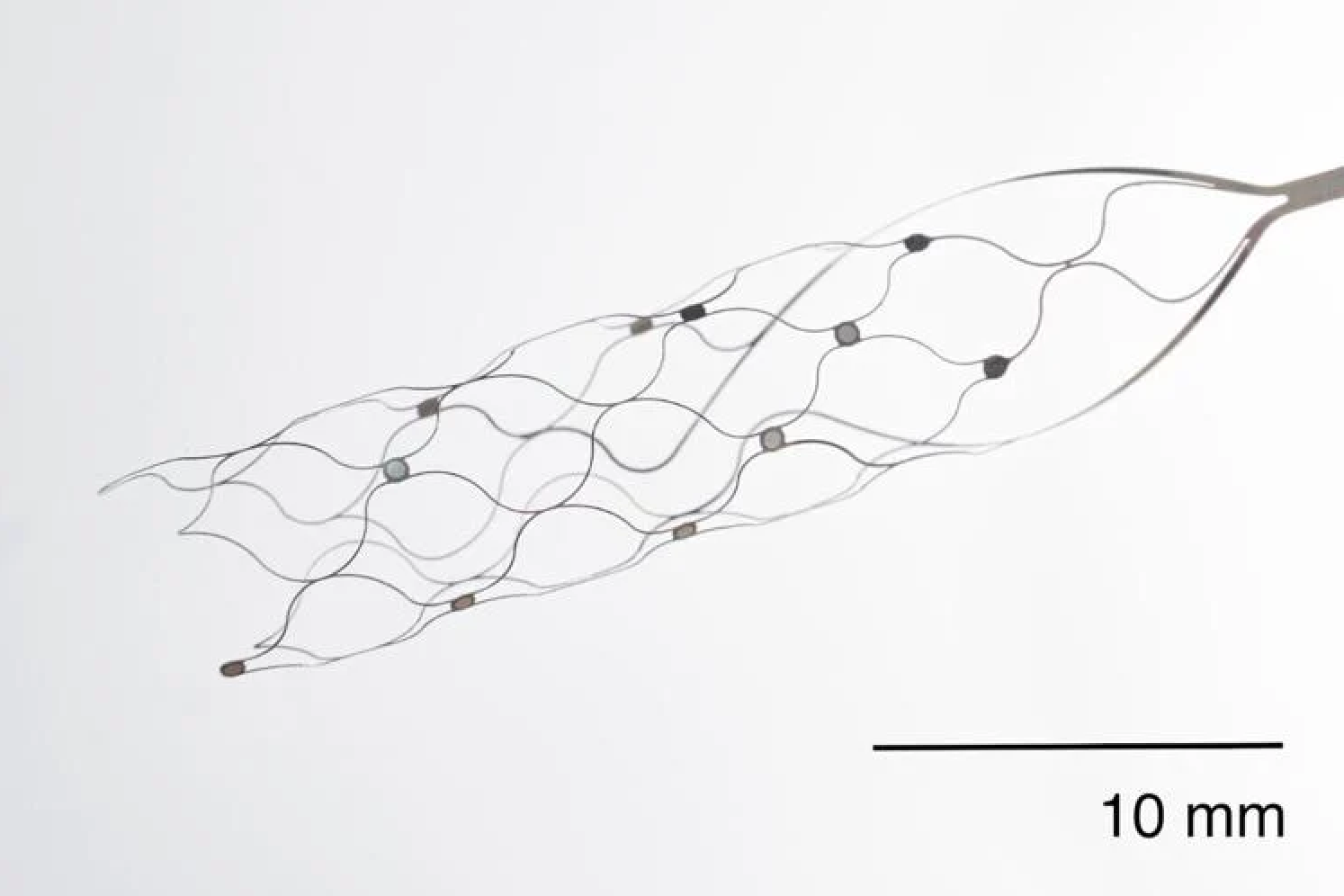

First US patient receives Synchron endovascular BCI implant

On July 6, 2022, Mount Sinai’s Shahram Majidi threaded Synchron‘s 1.5-inch-long, wire and electrode implant into a blood vessel in the brain of a patient with ALS. The goal is for the patient, who cannot speak or move, to be able to surf the web and communicate via email and text, with his thoughts. Four…

-

Shallow implant plus precise stimulation startup aims to treat depression

Inner Cosmos is a new, shallowly implanted brain stimulation system meant to address depression. It calls its system a “digital pill” but still requires a procedure for electronics to be placed under the skin on the head. Chief Medical Officer Eric Leuthardt is a top neurosurgeon from Washington University in St Louis and the CEO…

-

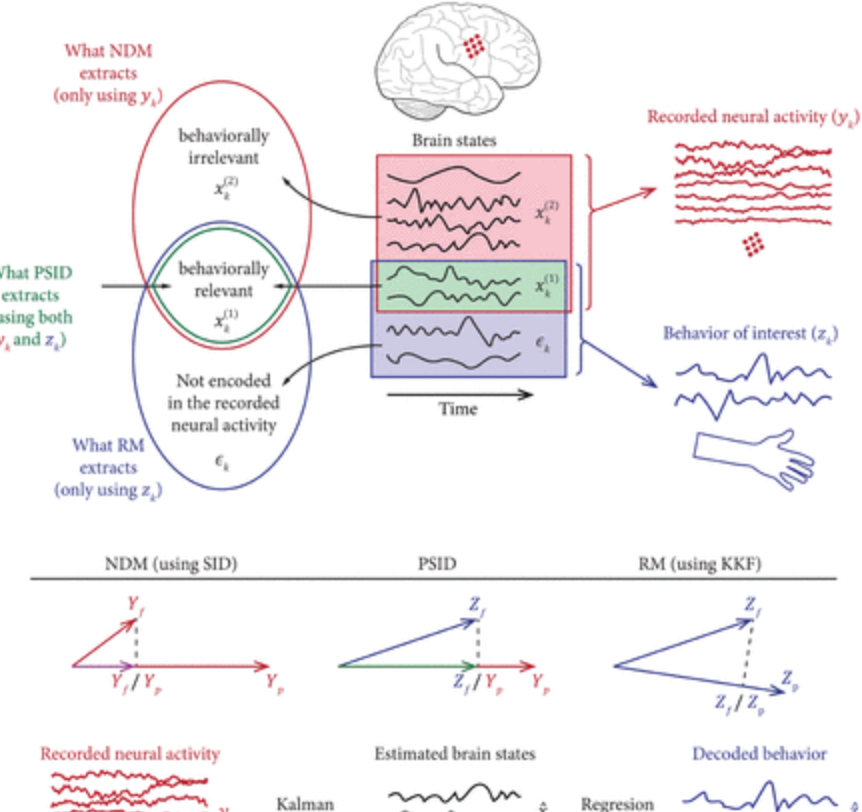

Algorithm isolates specific brain signals, provides feedback

The US Army and USC Prof Maryam Shanechi have developed an algorithm that can determine which specific behaviors—like walking and breathing—belong to specific brain signals. Segmenting brain signals has been notoriously difficult, as all signals associated with tasks mix together. Shanechi and her team used the algorithm to separate behaviorally relevant brain signals from behaviorally…

-

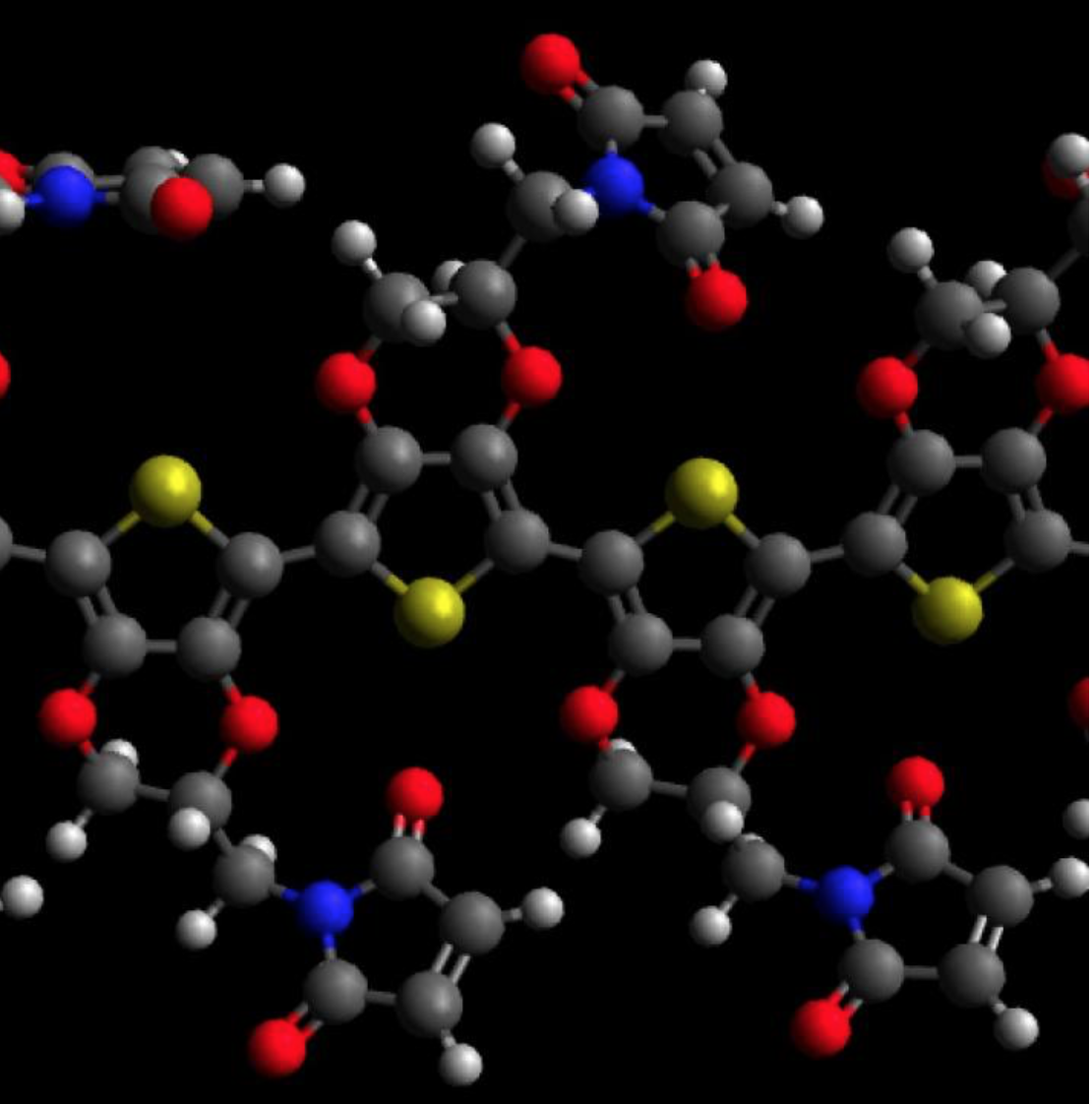

Polymer improves medical implants, could enable brain-computer interface

David Martin and University of Delaware colleagues have developed a bio-synthetic coating for electronic components that could avoid the scarring (and signal disruption) caused by traditional microelectric materials. The PEDOT polymer improved the performance of medical implants by reducing their opposition to an electric current. Pedot film was used with an antibody to stimulate blood…

-

Facebook’s Mark Chevillet on Brain-Computer-Interfaces

Mark Chevillet’s recent talk at the ApplySci Silicon Valley conference, called “Imagining a new Interface: Hands-free Communication With Out Saying a Word” is now live on the ApplySci YouTube Channel. Join ApplySci at Deep Tech Health + Neurotech Boston on September 24, 2020 at MIT

-

Study: Noninvasive BCI improves function in paraplegia

Miguel Nicolelis has developed a non-invasive system for lower-limb neurorehabilitation. Study subjects wore an EEG headset to record brain activity and detect movement intention. Eight electrodes were attached to each leg, stimulating muscles involved in walking. After training, patients used their own brain activity to send electric impulses to their leg muscles, imposing a physiological gait. With…

-

Thought generated speech

Edward Chang and UCSF colleagues are developing technology that will translate signals from the brain into synthetic speech. The research team believes that the sounds would be nearly as sharp and normal as a real person’s voice. Sounds made by the human lips, jaw, tongue and larynx would be simulated. The goal is a communication method for…

-

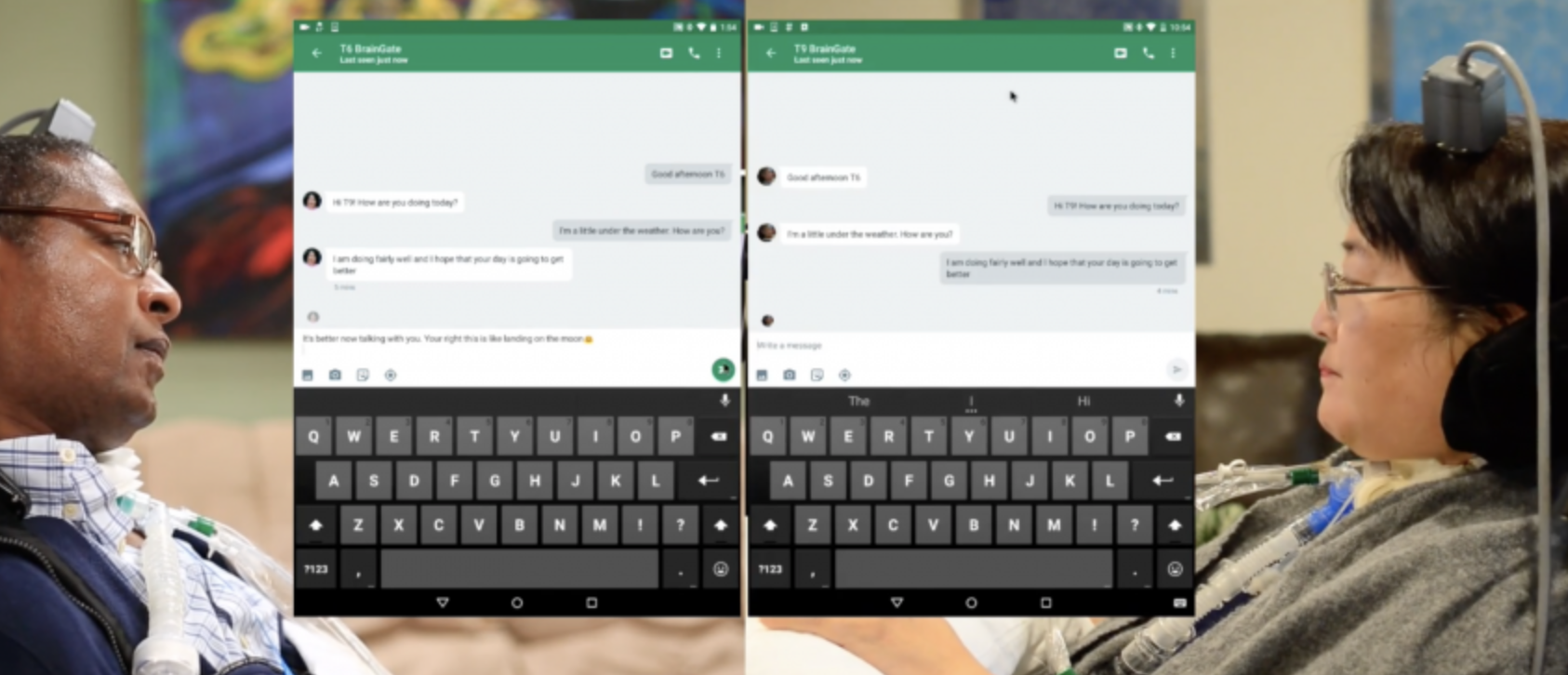

Thought controlled tablets

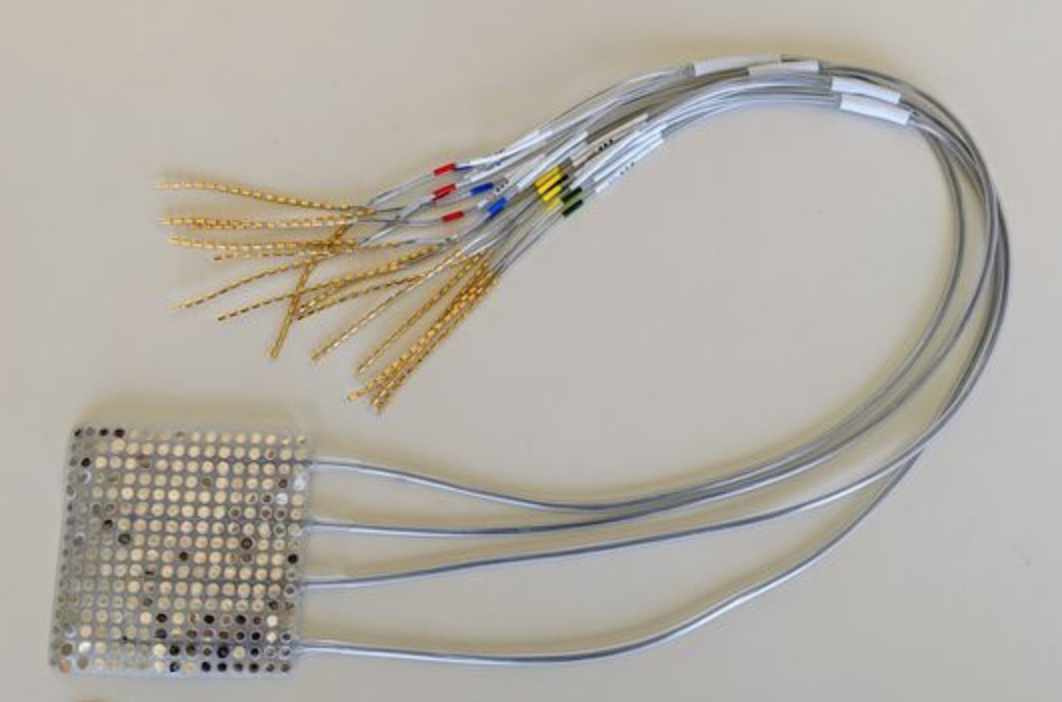

The BrainGate/Brown/Stanford/MGH/VA consortium has published a study describing three teraplegic patients who were able to control an off the shelf tablet with their thoughts. They surfed the web, checked the weather and shopped online. A musician played part of Beethoven’s “Ode to Joy” on a digital piano interface. The BrainGate BCI included a small implant that…

-

Thought controlled television

Samsung and EPFL researchers, including Ricardo Chavarriaga, are developing Project Pontis, a BCI system meant to allow the disabled to control a TV with their thoughts. The prototype uses a 64 sensor headset plus eye tracking to determine when a user has selected a particular movie. Machine learning is used to build a profile of…

-

Brain-to-brain communication interface

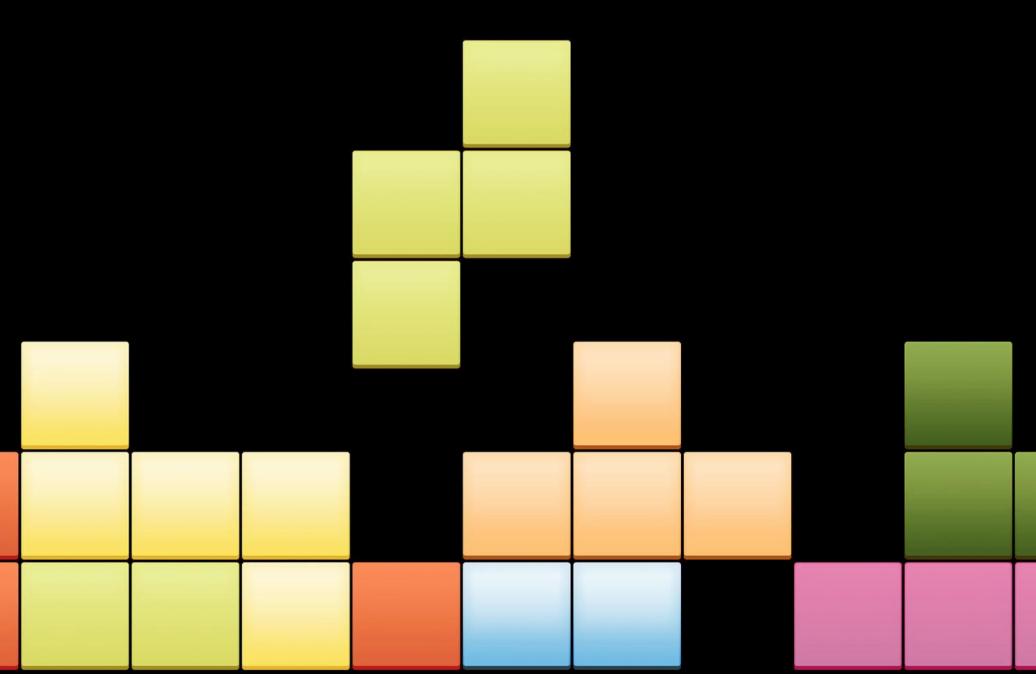

Rajesh Rao and University of Washington colleagues have developed BrainNet, a non-invasive direct brain-to-brain interface for multiple people. The goal is a social network of human brains for problem solving. The interface combines EEG to record brain signals and TMS to deliver information to the brain, enabling 3 people to collaborate via direct brain-to-brain communication.…

-

DARPA: Three aircraft virtually controlled with brain chip

Building on 2015 research that enabled a paralyzed person to virtually control an F-35 jet, DARPA’s Justin Sanchez has announced that the brain can be used to command and control three types of aircraft simultaneously. Click to view Justin Sanchez’s talk at ApplySci’s 2018 conference at Stanford University Join ApplySci at the 9th Wearable Tech…