Category: BCI

-

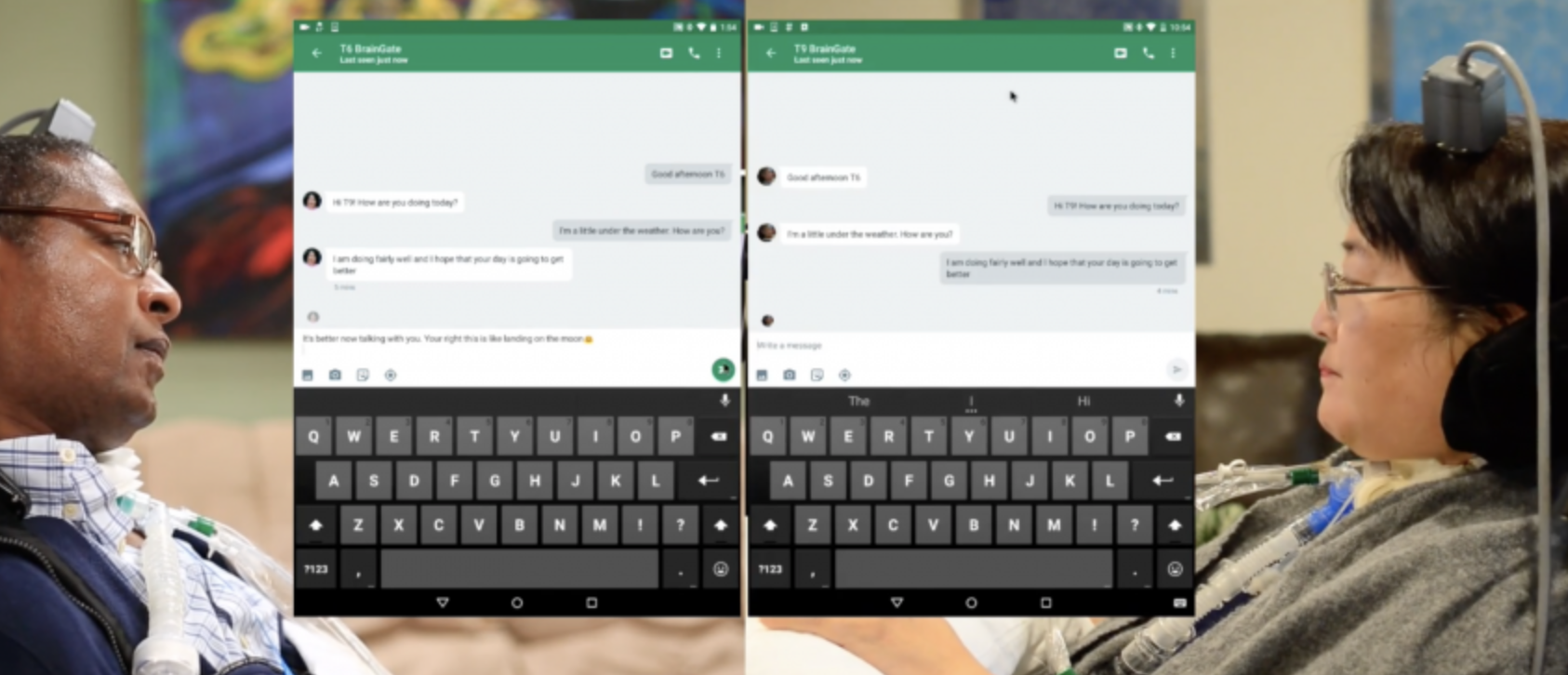

Thought controlled tablets

The BrainGate/Brown/Stanford/MGH/VA consortium has published a study describing three teraplegic patients who were able to control an off the shelf tablet with their thoughts. They surfed the web, checked the weather and shopped online. A musician played part of Beethoven’s “Ode to Joy” on a digital piano interface. The BrainGate BCI included a small implant that…

-

Thought controlled television

Samsung and EPFL researchers, including Ricardo Chavarriaga, are developing Project Pontis, a BCI system meant to allow the disabled to control a TV with their thoughts. The prototype uses a 64 sensor headset plus eye tracking to determine when a user has selected a particular movie. Machine learning is used to build a profile of…

-

Brain-to-brain communication interface

Rajesh Rao and University of Washington colleagues have developed BrainNet, a non-invasive direct brain-to-brain interface for multiple people. The goal is a social network of human brains for problem solving. The interface combines EEG to record brain signals and TMS to deliver information to the brain, enabling 3 people to collaborate via direct brain-to-brain communication.…

-

DARPA: Three aircraft virtually controlled with brain chip

Building on 2015 research that enabled a paralyzed person to virtually control an F-35 jet, DARPA’s Justin Sanchez has announced that the brain can be used to command and control three types of aircraft simultaneously. Click to view Justin Sanchez’s talk at ApplySci’s 2018 conference at Stanford University Join ApplySci at the 9th Wearable Tech…

-

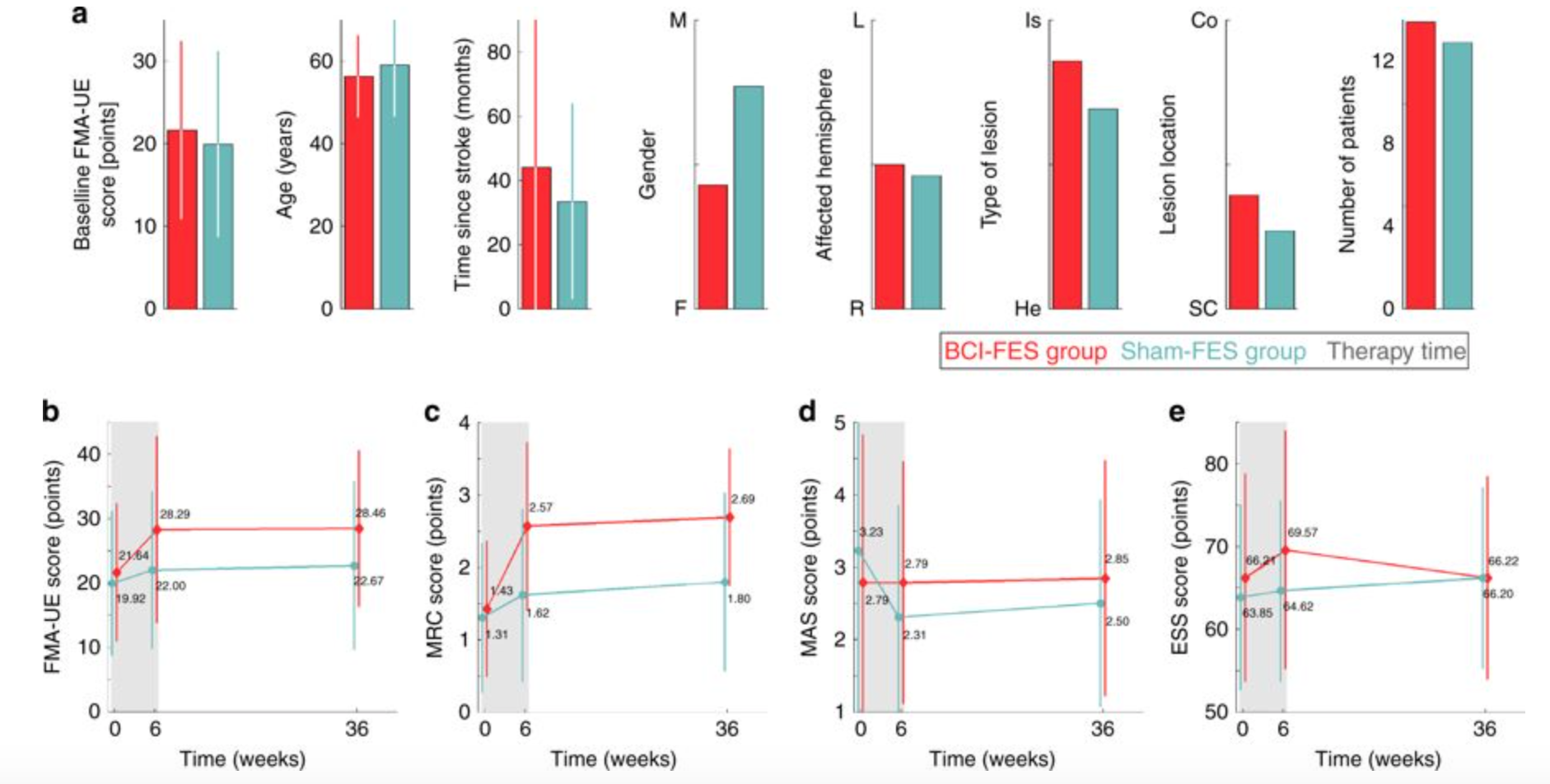

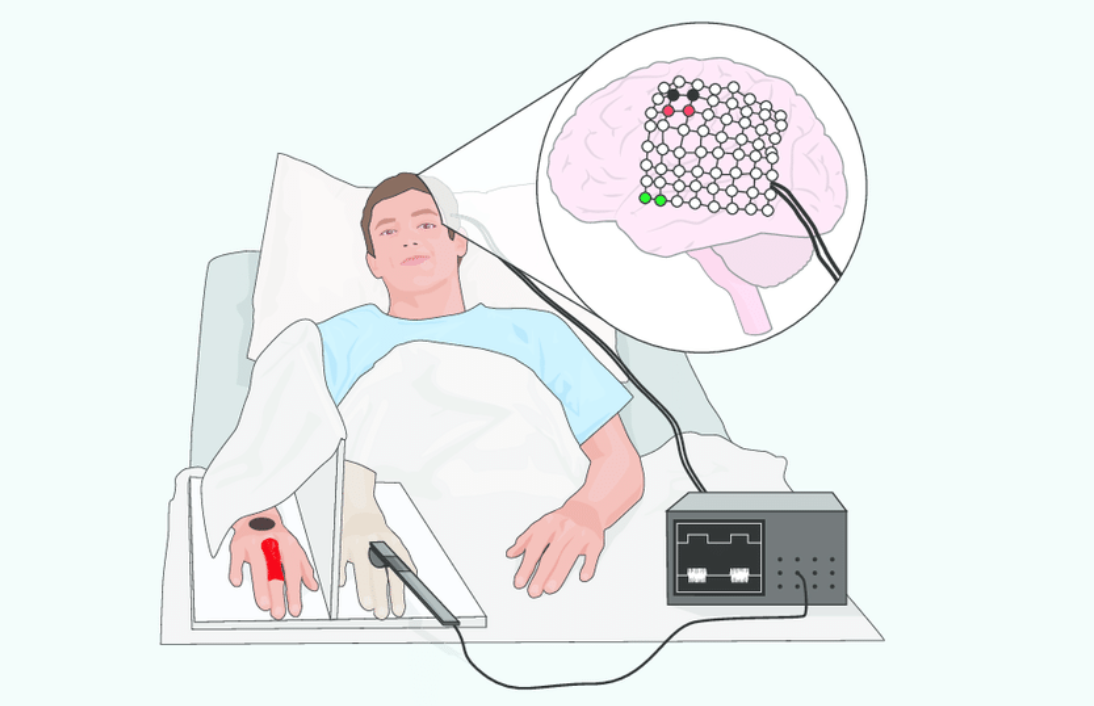

Combined BCI + FES system could improve stroke recovery

Jose Millan and EPFL colleagues have combined a brain computer interface with functional electrical stimulation in a system that, in a study, showed the ability to enhance the restoration of limb use after a stroke. According to Millan: “The key is to stimulate the nerves of the paralyzed arm precisely when the stroke-affected part of…

-

Bob Knight on decoding language from direct brain recordings | ApplySci @ Stanford

Berkeley’s Bob Knight discussed (and demonstrated) decoding language from direct brain recordings at ApplySci’s recent Wearable Tech + Digital Health + Neurotech Silicon Valley at Stanford: Join ApplySci at the 9th Wearable Tech + Digital Health + Neurotech Boston conference on September 24, 2018 at the MIT Media Lab. Speakers include: Rudy Tanzi – Mary Lou Jepsen – George…

-

Nathan Intrator on epilepsy, AI, and digital signal processing | ApplySci @ Stanford

Nathan Intrator discussed epilepsy, AI and digital signal processing at ApplySci’s Wearable Tech + Digital Health + Neurotech Silicon Valley conference on February 26-27, 2018 at Stanford University: Join ApplySci at the 9th Wearable Tech + Digital Health + Neurotech Boston conference on September 24, 2018 at the MIT Media Lab. Speakers include: Mary Lou…

-

Ed Boyden on tools for mapping, repairing brain circuitry | ApplySci @ Stanford

Ed Boyden discussed tools for mapping and repairing brain surgery at ApplySci’s Wearable Tech + Digital Health + Neurotech Silicon Valley conference. Recorded on February 26-27, 2018 at Stanford University Join ApplySci at the 9th Wearable Tech + Digital Health + Neurotech Boston conference on September 24, 2018 at the MIT Media Lab

-

DARPA’s Justin Sanchez on driving and reshaping biotechnology | ApplySci @ Stanford

DARPA Biological Technologies Office Director Dr. Justin Sanchez on driving and reshaping biotechnology. Recorded at ApplySci’s Wearable Tech + Digital Health + Neurotech Silicon Valley conference on February 26-27, 2018 at Stanford University. Join ApplySci at the 9th Wearable Tech + Digital Health + Neurotech Boston conference on September 25, 2018 at the MIT Media…

-

TMS + VR for sensory, motor skill recovery after stroke

EPFL’s Michela Bassolino has used transcranial magnetic stimulation to create hand sensations when combined with VR. By stimulating the motor cortex, subjects’ hand muscles were activated, and involuntary short movements were induced. In a recent study, when subjects observed a virtual hand moving at the same time and in a similar manner to their own…

-

Mary Lou Jepson on wearable MRI + telepathy | ApplySci @ Stanford

Mary Lou Jepsen discusses wearable MRI + holography-based telepathy at ApplySci’s Wearable Tech + Digital Health + Neurotech Silicon Valley conference – February 26-27, 2018 at Stanford University: Join ApplySci at the 9th Wearable Tech + Digital Health + Neurotech Boston conference – September 25, 2018 at the MIT Media Lab

-

Bone-conduction headset for voice-free communication

MIT’s Arnav Kapur has created a device that senses and interprets neuromuscular signals created when we subvocalize. AlterEgo rests on the ear and extends across the jaw. A pad sticks beneath the lower lip, and another below the chin. It senses jaw and facial tissue bone-conduction, undetectable by humans. Two bone-conduction headphones pick up inner ear…